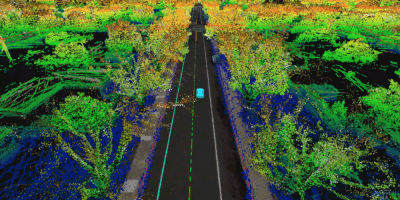

Self-driving cars must learn how to play nice with other human road users before it’s safe to be on the streets. Source: Shutterstock

Selfish autonomous cars must learn courtesy

AUTONOMOUS cars are designed to deliver a passenger from one point to another quickly and safely, without ever needing human intervention.

The algorithms are supposed to effectively calculate the optimum route and avoid collisions by predicting the movements of other objects.

Sounds good on paper. However, the AIs powering self-driving cars still have much to learn before it’s ready for real-world uses. One of them is how to deal with human drivers.

Researchers from the Department of Mechanical Engineering, University of California, Berkeley are training AIs to be courteous drivers.

“This is of crucial importance since humans are not perfectly rational, and their behavior will be influenced by the aggressiveness of the robot cars,” the researchers wrote in a report.

Currently, most autonomous driving algorithms are designed to prioritize safety and driving quality of the vehicle itself. The systems are trained to anticipate human actions and match the output of the car to be as efficient as possible.

In simpler terms, the car would be motivated by selfish reasons. The researchers found that AI behaviors trained under these models can range from overly conservative to extremely aggressive, which pose a danger in road conditions.

A simple solution would be to code a command so that the robot always give humans the right of way. However, that’s counterintuitive to having autonomous cars in the first place – having a more efficient transport system.

Therein lies a catch-22. Existing AI algorithms cannot be coded to be efficient and nice. As researchers found, the purportedly “efficient” algorithms see cars cutting people off, or inch forward at intersections to go first.

“While this behavior is good sometimes, we would not want to see it all the time,” noted the report.

The researchers attempted to quantify the qualities of being “courteous”, based on three scenarios:

- What would a human do, if the robot car wasn’t present

- What would a human do, if the human had help from the robot car

- What would a human do, if the robot car didn’t change its maneuver

The results from simulations did during the research, showed success with cars behaving less aggressively. Researchers argue that the courtesy terms can be used to help explain human driving behavior.

This means not only can robots learn to drive more like a human, the model can also be used for other algorithms to better predict human actions.

As we inch closer to 2020 – the year generally agreed by the automotive industry to be when the first commercial self-driving car would be available – algorithms need to cater to current road conditions, where humans would still largely be behind the wheel.

Until we reach a future where every vehicle on the roads are self-driving and connected to each other, AIs that understand human reactions would still be needed.

READ MORE

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications

- How Japan balances AI-driven opportunities with cybersecurity needs

- Deploying SASE: Benchmarking your approach

- Insurance everywhere all at once: the digital transformation of the APAC insurance industry

- Google parent Alphabet eyes HubSpot: A potential acquisition shaping the future of CRM