The next generation of data management and business intelligence, explored

While the technology and business media’s claims that technology is “the new gold” is probably hyperbole, it’s undeniable that there are staggering amounts of data being accrued by every organization, every day.

That’s thanks in part to most areas of commerce (and indeed, of life) being based on technology, and also due to technology’s consumerization — that is, the fact that everyone now carries a smartphone with them at all times.

The opportunities presented by data repositories are significant, and vary wildly according to industry vertical. Manufacturers, for instance, can use IIoT data to predict machinery failures better, financial institutions can track fraud better, retailers connect online shopping sites with brick-and-mortar stores.

Regardless, there are advantages of using data to any organization or company, as well as freely available information, and specially collected data, too. There are greater efficiencies to be gained, better strategizing, the giving of higher value — and all with the resources that most organizations already have, but have yet to exploit fully.

As part of the technology press, it would be easy to say that it’s just a case of plugging in appliance A or deploying software B. Unfortunately, the realities are slightly more involved! While data exists in quantities that need terms like “data lakes” to describe them, the devil is in the detail. Common problems are:

– Unstructured data, or disparate data that’s differently formatted.

– Data silos that are difficult to connect with, or several silos containing replica data.

– Information that’s not properly stored and backed up — it’s an easy commodity to lose.

– Being aware of where data is held and what it describes or pertains to.

– The very quantities of information mean insights are challenging to surface.

– Data governance & strictures, the law, and cybersecurity.

– Data’s age — is it up to date? The issue of real-time data is of paramount concern.

In enterprise-scale organizations, there are four discrete business functions whose lifeblood is data — although arguably, the modern business is entirely dependent on the zeros and ones stored in silicon.

And each of these specific functions is starting to use solutions in their daily work that can be summarized as “DataOps”. DataOps is analogous to DevOps, in that it’s dependent on continuous development and continuous integration. As information changes, the data professionals need to know where data is, what it is, what has treated or altered the data, and finally (and certainly not leastly) what value can be drawn from the data. Those roles can be broadly defined as:

# 1 | The data engineers. These are the people and tools that source the data, manage the lakes of information, ensure that there’s no extraneous data duplication, and administer that databases that hold the “new gold.”

# 2 | Data scientists create the models that mould the information, mine the repositories and process the data, often using tools that are everyday items in the DevOps function’s toolbox. Among these tools are low-code interfaces that present real-time data that can quickly surface values, like lowered costs and risks.

# 3 | Self-service data processors. Working from information with excellent provenance (clean data, up to date, accurate data) and information shaped by data scientists in many cases, the roles that serve themselves can be anyone in the business. That ability requires the type of next-generation data tools, two of which we feature below, and it’s here that the first outward signs of surfaced, empirical business results begin to appear.

# 4 | Data governance. The people and systems that ensure information is safe, held and processed in ways fitting to the industry type, geography and local rules & guidelines. Governance’s remit extends into all the above roles, too (or it should), with safety and legality baked into every process.

Here at Tech Wire Asia, we’re showcasing two major players in the DataOps vertical, solutions capable of managing and processing the torrents of data that are the daily reality at enterprise scale.

By better maintenance, governance and intelligent use of data tools, businesses can create the type of agility and scalability that DataOps methodology can produce.

Without an appropriate data platform on which to build an organization’s processes, decisions and strategies, there’s little hope of determining the most efficient, timely and agile strategies.

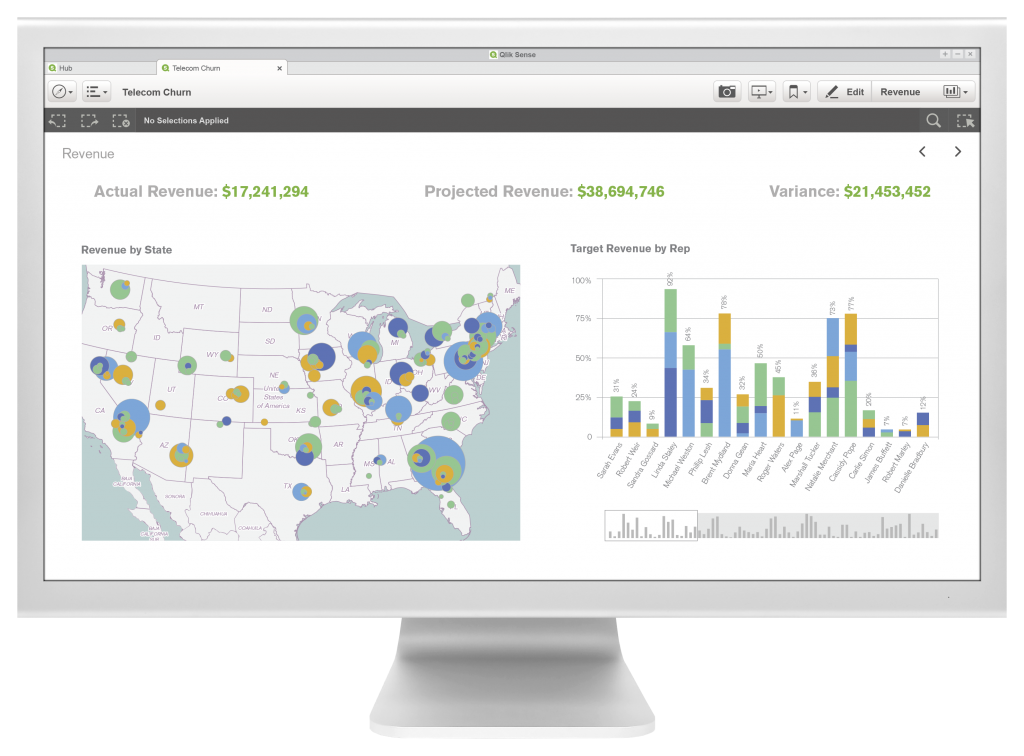

QLIK

It’s probably a gross oversimplification, but the Qlik Agile Data Warehouse platform can be distilled down into three — outwardly simple — steps.

Source: Qlik

Firstly, there’s the arrival of data into the enterprise in real-time. As most people aware of technology know, the stream of data that we recognize in 2020 will soon be a torrent, deluge… or some other hyperbole. Scalability, therefore, is paramount in any data platform, and Qlik’s technology ensures that it can assimilate information in real-time, from hundreds, if not thousands of sources.

Step two is the refinement of data, using automated processes. With Qlik, you can design and build warehouses of information, clean and de-duped, normalized and ready for use.

The third step is the delivery of data collaboratively and safely. The data catalogue is continuously up-to-date, and presented according to business needs, regardless of where it’s hosted or how it’s been processed (although that information is transparent and available, if needed).

Whether it’s for use by data-parsing specialists, or available via intuitive self-service, the information locked away in a business is now ready to provide significant value.

Qlik’s Data Warehouse solutions form a coherent picture that’s of practical business value for enterprises, many of whom are struggling to get more than a glimpse of the resources they have.

Read more about Qlik’s game-changing offerings here on the pages of Tech Wire Asia.

IBM

As you might expect from IBM, a great deal of the marketing collateral over DataOps and data management in general points to the company’s open, Watson-derived AI engines. It’s to AI that businesses are turning, increasingly, to mine petabytes of information in real-time to surface insights.

IBM’s DataOps capabilities create an analytics foundation that’s designed from the ground up to be business-focused: this is a long way from the academic research (partly funded by IBM, back in the day) that lead to Watson and the mainstreaming of machine learning and cognitive programming.

Master data management creates trust-able data, allows self-service access without qualms, and data governance and security are assured. That means Big Blue’s customers can begin their “big data journey” in a great deal less time than finding and equipping data scientists, database specialists and data governance experts. Its off-the-shelf modular approach combines nicely with the company’s collaborative approach, meaning as an enterprise partner, there will always be expert guidance and the technical tools to underpin any offering.

While it’s difficult to pin down a single web landing page to continue your investigations, here is a good starting point.

*Some of the companies featured on this article are commercial partners of Tech Wire Asia

READ MORE

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications

- How Japan balances AI-driven opportunities with cybersecurity needs

- Deploying SASE: Benchmarking your approach

- Insurance everywhere all at once: the digital transformation of the APAC insurance industry

- Google parent Alphabet eyes HubSpot: A potential acquisition shaping the future of CRM