Exploiting ChatGPT: How Russian cybercriminals are using the OpenAI API in dark web operations

- CPR has observed an increase in dark web activity involving ChatGPT, even in countries where OpenAI does not provide support.

- Russian cybercriminals have reportedly found ways to bypass the limitations placed on unsupported countries.

As the use of AI and machine learning technology continues to grow in various industries, it’s not surprising to see cybercriminals also start to take advantage of these tools.

Recently, Tech Wire Asia reported a warning from Check Point Research (CPR) about using ChatGPT to create malware. According to CPR, cybercriminals are using the language model’s capabilities to develop an automatic encryption tool and scripts for a dark web marketplace. One of the main concerns with ChatGPT is its accessibility through the OpenAI API.

CPR has observed an increase in dark web activity involving ChatGPT, even in countries where OpenAI does not provide support. Russian cybercriminals have discovered ways to utilize ChatGPT for their illegal activities, with CPR anticipating that they will use it to streamline their malware development process and lower the cost of initial investments for cybercrime.

To access ChatGPT, Russian cybercriminals have reportedly found ways to bypass the limitations placed on unsupported countries, which include IP address, phone number, and payment card verification.

Note: All the screenshots below were originally in Russian.

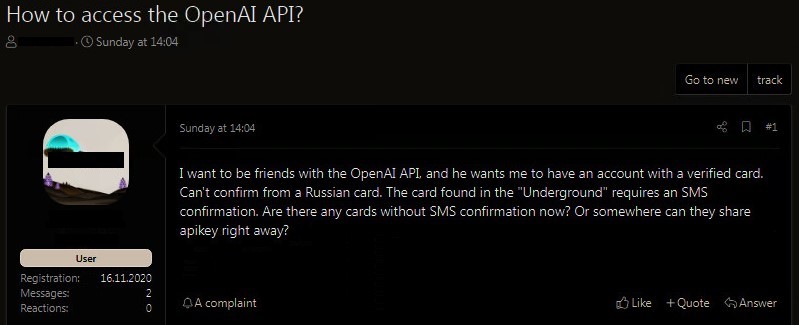

CPR discovered that Russian cybercriminals were attempting to gain access to OpenAI’s API through illegal means. They were seeking advice on how to use stolen payment cards to purchase access and were having trouble using Russian payment cards.

How to access OpenAI API? (Source – Check Point)

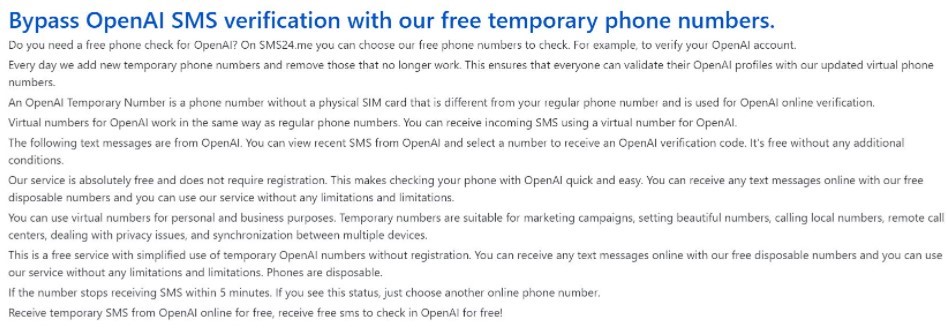

CPR also found discussions on a Russian underground forum about using ChatGPT to develop malware and ways to bypass OpenAI’s geographical controls. The cybercriminals also stated that they could bypass phone verification using various online SMS services for a small fee (around US$0.09).

Additionally, CPR found tutorials in Russian on semi-legal SMS text messaging services and confirmed that cybercriminals were actively using these services to bypass registration limitations on various online services.

Bypass OpenAI SMS verification with our free temporary phone numbers. (Source – Check Point)

Risks and potential uses of ChatGPT API in cybersecurity.

Since its release in November 2022, ChatGPT has gained a significant number of users globally and has inspired the creation of various guides to help users fully utilize its capabilities. Experts predict that by 2025, AI will generate 90% of online content due to its growing popularity. The sudden rise of Open AI’s chatbot has raised concerns in various industries, with the cybersecurity industry particularly worried.

Steve Povolny, Principal Engineer and Director at Trellix, said that ChatGPT has recently become a topic of conversation among the general public. While its predecessors generated interest in the data science industry, few found practical uses for the average consumer.

“That can be put to rest now, as the smartest text bot ever made has inspired thousands of innovative use cases, applicable across nearly every industry,” said Povolny. “In the cyber realm, examples range from email generation to code creation and auditing, vulnerability discovery and much more.”

However, with these technological breakthrough advances, security concerns are always present. Povolny said that despite ChatGPT’s efforts to prevent malicious input and output, cybercriminals are already finding ways to use the tool for illegal activities. Illegal activities include crafting hyper-realistic phishing emails and exploiting codes by adjusting user input or modifying the output.

“While text-based attacks such as phishing continue to dominate social engineering, the evolution of data science-based tools will inevitably lead to other mediums, including audio, video and other forms of media that could be equally effective. Furthermore, threat actors may look to refine data processing engines to emulate ChatGPT, while removing restrictions and even enhancing these tool’s abilities to create malicious output,” he explained.

While the security concerns surrounding ChatGPT’s potential malicious use cannot be ignored, it is important to recognize the benefits it can bring to the cybersecurity industry. The ability to detect critical coding errors, simplify complex technical concepts, and create resilient code are examples of how ChatGPT can be utilized for good. Researchers, practitioners, academia, and businesses in the cybersecurity field can harness the power of ChatGPT to drive innovation and collaboration.

In fact, the use of ChatGPT can help to improve the overall security of systems and networks by identifying vulnerabilities and potential threats that might have been missed otherwise. The key is to use the technology responsibly and cautiously while being mindful of the risks.

“It will be interesting to follow this emerging battleground for computer-generated content as it enhances capabilities for both benign and malicious intent,” he concluded.

READ MORE

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications

- How Japan balances AI-driven opportunities with cybersecurity needs

- Deploying SASE: Benchmarking your approach

- Insurance everywhere all at once: the digital transformation of the APAC insurance industry

- Google parent Alphabet eyes HubSpot: A potential acquisition shaping the future of CRM