How Telegram bots are bypassing ChatGPT restrictions for malicious purposes

- According to Check Point Research (CPR), there are instances where cybercriminals are exploiting Telegram bots and scripts to get around restrictions imposed by ChatGPT.

- Cybercriminals are exploiting the OpenAI platform, specifically ChatGPT, to produce malicious content such as phishing emails and malware.

Is Telegram still relevant and influential these days? Apparently, it is, but for all the wrong reasons. According to Check Point Research (CPR), cybercriminals are exploiting Telegram bots to bypass security restrictions imposed by ChatGPT on underground forums. The bots are using OpenAI’s API to generate malicious emails or code.

Cybercriminals are utilizing the OpenAI platform, particularly ChatGPT, to produce harmful content such as phishing emails and malware. A recent CPR blog post highlighted how ChatGPT effectively carried out an entire malicious flow, from crafting a convincing spear-phishing email to executing a reverse shell that can understand English commands.

CPR has also discovered that cybercriminals are using ChatGPT to enhance the code of an outdated Infostealer malware from 2019, making it more efficient and sophisticated, even though the code was not difficult to create.

Currently, there are two options for using OpenAI models:

- The web user interface provides access to ChatGPT, DALL-E 2, and the OpenAI Playground.

- The API allows the building of custom applications with OpenAI models and data running in the background.

Many reputable companies have integrated OpenAI’s API into their applications, as demonstrated by these examples.

Brands using OpenAI´s API model (Source – Check Point Research)

Sergey Shykevich, the Threat Group Manager at Check Point Software, explained that OpenAI has implemented measures such as barriers and restrictions as part of its content policy to prevent the creation of malicious content on its platform.

“However, we’re seeing cybercriminals find ways around ChatGPT’s restrictions, and there’s active discussion in underground forums about how to use the OpenAI API to bypass ChatGPT’s barriers and limitations,” said Shykevich. “This is mostly done by creating Telegram bots that use the API and are advertised in hacking forums to increase their exposure. The current version of the OpenAI API is used by external applications and has very few anti-abuse measures, allowing malicious content creation, such as phishing emails and malware code, without the limitations or barriers that ChatGPT has set in its user interface.”

He also noted that cybercriminals are continuously attempting to bypass the restrictions set in place by ChatGPT.

Bypassing limitations to create malicious content

OpenAI has implemented various measures to prevent the creation of harmful content on its platform, including restrictions within the ChatGPT user interface. Despite these efforts, cybercriminals are finding ways to bypass ChatGPT’s restrictions by creating Telegram bots that use the OpenAI API.

These bots are then advertised in underground forums, allowing for the creation of malicious content, such as phishing emails and malware code, without the limitations set by ChatGPT. The current version of the OpenAI API is used by external applications (for example, the integration of OpenAI’s GPT-3 model into Telegram channels). It lacks adequate anti-abuse measures, making it vulnerable to exploitation by malicious actors.

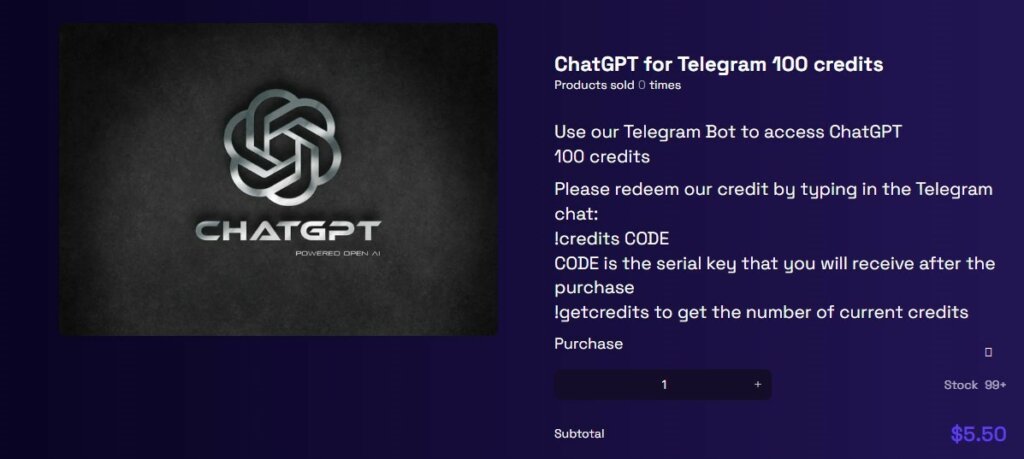

Example 1 – Telegram OpenAI Bot as a Service

In a recent discovery by CPR, a cybercriminal promoted a new service in an underground forum: a Telegram bot that used the OpenAI API without any imposed restrictions. The business model behind this bot allows for 20 free queries through ChatGPT, after which the user would be charged US$ 5.50 for every 100 queries.

Business model of OpenAI API based Telegram channel (Source – Check Point Research)

Not the first time Telegram bots have been used

Last year, cybercriminals exploited bots in popular messaging apps like Telegram and Discord to steal users’ credentials. Security vendor Intel471 reports that these attacks target gaming platforms Roblox and Minecraft users.

The gangs use Trojan malware designed to swipe information, which they attach to legitimate bots in the apps. These bots can steal sensitive information, including autofill data, bookmarks, browser cookies, card information, and passwords.

Researchers discovered that the X-files Trojan downloads information stored in multiple browsers through commands from a Telegram bot. Meanwhile, on Discord, the Blitzed Grabber info stealer is taking advantage of the app’s “webhooks” feature to store stolen data.

According to Check Point, the rising popularity of these messaging platforms mans they’re becoming more attractive targets for hackers, leading to a 140% year-on-year increase in malware on Discord servers.

To summarize, cybercriminals constantly search for ways to exploit ChatGPT for malicious purposes, such as developing malware and crafting phishing emails. Despite efforts to enhance the controls on ChatGPT, cybercriminals persist in finding new, abusive uses for OpenAI models, including the abuse of their API.

READ MORE

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications

- How Japan balances AI-driven opportunities with cybersecurity needs

- Deploying SASE: Benchmarking your approach

- Insurance everywhere all at once: the digital transformation of the APAC insurance industry

- Google parent Alphabet eyes HubSpot: A potential acquisition shaping the future of CRM