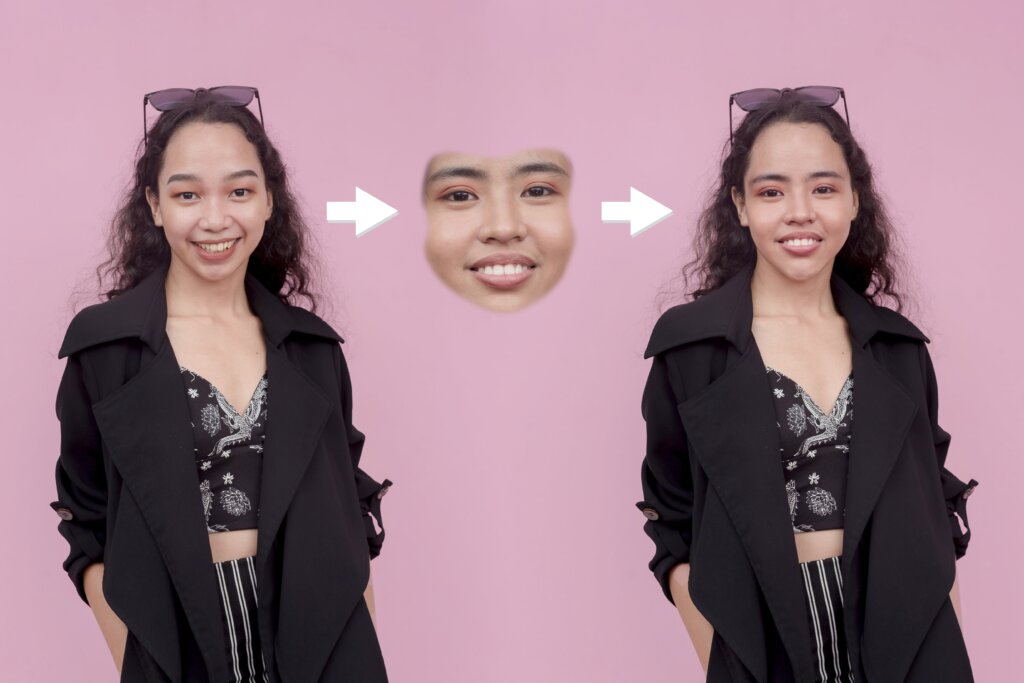

Beware of deepfake images and videos. (Image by Shutterstock).

The growth of deepfake content in Singapore

- Singapore PM warns of deepfake content featuring himself and other prominent individuals in Singapore.

- According to Sumsub’s Identity Fraud Report, there has been a 1550% surge in deepfake attacks in Asia Pacific.

- While deepfake content is becoming harder to detect, there are still a few ways users can tell them apart.

While generative AI in content creation has been revolutionary, it has also led to an increase in complex problems. In the past, editing videos and images would take hours or even days. But today, editing images takes just seconds, producing excellent results.

With the number of apps capable of editing and creating new images growing exponentially, there are concerns that some of these apps could be used for the wrong reasons. And true enough, there are such apps. These image generators are capable of creating deepfake images and even videos, which are becoming an increasingly large problem around the world.

Deepfakes are a big problem because they can be used to create fake or misleading videos, images, or audio that can harm people’s reputation, privacy, or security. Deepfakes can also spread misinformation, propaganda, or disinformation that can influence public opinion, undermine trust, or incite violence. Some of the potential risks of deepfakes are:

- Identity theft and fraud: Deepfakes can be used to impersonate someone’s voice, face, or biometric data, and use it for malicious purposes, such as accessing their accounts, stealing their money, or blackmailing them.

- Political manipulation and sabotage: Deepfakes can be used to create fake or altered speeches, interviews, or events involving political figures, and use them to sway voters, discredit opponents, or disrupt elections.

- Social and moral harm: Deepfakes can be used to create pornographic or abusive content involving celebrities, politicians, or ordinary people, and use it to humiliate, harass, or defame them.

- Legal and ethical challenges: Deepfakes can pose difficulties in verifying the authenticity, credibility, or source of digital content, and raise questions about the rights, responsibilities, and liabilities of the creators, distributors, and consumers of deepfakes.

Identity theft is fueling increasing deepfake content. (Image generated by AI).

The rise of deepfake content

There’s been a tenfold increase in the number of deepfakes detected globally across all industries from 2022 to 2023. Southeast Asia has witnessed increasing deepfake attacks in 2023. In fact, according to Sumsub’s Identity Fraud Report, there has been a 1550% surge in deepfake attacks in Asia Pacific. And no, that number’s not a misprint.

The country attacked by deepfakes the most is Spain, while the UAE passport is the most forged document worldwide. Latin America is the region where fraud increased in every country. When it comes to identity fraud, ID cards remain the most frequently exploited, accounting for nearly 75% of all fraudulent activities involving identity documents.

While many would assume pornographic content to be driving the growth of deepfake images, crypto is the main target sector. Sumsub’s report stated that 88% of all deepfake cases detected in 2023 involved crypto fraud.

“The rise of artificial intelligence is reshaping how fraud is perpetrated and prevented. AI serves as a powerful tool both for anti-fraud solution providers and those committing identity fraud. Our internal statistics show an alarming tenfold increase in the number of AI-generated deepfakes across industries from 2022 to 2023. Deepfakes pave the way for identity theft, scams, and misinformation campaigns on an unprecedented scale,” commented Pavel Goldman-Kalaydin, Head of AI/ML at Sumsub.

Singapore’s Prime Minister has been a victim of deepfake content.

Deepfakes in Singapore

While Singaporeans are enjoying the benefits of modern technology, the country is also experiencing increasing fraud activities which are being caused by deepfake content. Prominent Singaporeans, including the country’s Prime Minister and Deputy Prime Minister, have had their images and voices used to create deepfake content that promotes fraudulent content.

In a recent Facebook, X and Instagram posting, Singapore Prime Minister Lee Hsien Loong said that he was aware of several videos of him circulating on social media platforms. The videos feature him purporting to promote cryptocurrency.

“The scammers use AI technology to mimic our voices and images. They transform real footage of us taken from official events into very convincing but completely bogus videos of us purporting to say things that we have never said.”

According to a report by the South China Morning Post, one such altered video depicts Lee in an interview with a presenter from the Chinese news network CGTN in a segment titled Leaders Talk. The video has been altered to make it seem like he is talking about a government-sanctioned “transformative investment platform envisioned by Elon Musk.”

The Prime Minister also advised Singaporeans to report scams and fake news via the government’s official ScamShield Bot on WhatsApp.

Deepfake content is becoming harder to detect. (Image by Shutterstock).

How to spot deepfake content?

While deepfake content is becoming harder to detect, there are still a few ways users can tell them apart. Users need to be aware of content promoting products that feature prominent personalities like celebrities or politicians. Most politicians would not endorse any product – and even if they did, there would be more publicity about it.

Deepfake videos also tend to be blurry, with the image not as sharp as originally produced content. Users need to look out for lip moments and how they sync with the audio. Unfortunately, the technology is improving and it is getting harder to tell them apart.

Apart from visual inspection, users can also conduct a metadata analysis. While this can be a bit more challenging, the metadata analysis will reveal inconsistencies or anomalies in a file. This includes the format, compressions, resolution and timestamps. Advanced techniques would be forensic analysis such as facial recognition, eye tracking and such to measure the naturalness and consistency of human expressions and emotions.

Lastly, it comes down to source verification. If the content is being posted on a website by a relatively unknown publisher, it is most likely to be deepfake content. Users need to check the credibility and reputation of the publisher. This can be done by comparing with other sources or looking for corroborating evidence.

At the end of the day, it is important to be critical and cautious when consuming media and to seek reliable and trustworthy sources of information.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland