Amazon Web Services (AWS) CEO Adam Selipsky speaks with Anthropic CEO and co-founder Dario Amodei during AWS re:Invent 2023. (Image from AFP).

Competition heats up for OpenAI as rivals raise the bar

- OpenAI continues to face challenges from rivals.

- Anthropic is looking to challenge ChatGPT.

- Chinese tech companies are also hoping to give a good run to OpenAI.

Ever since OpenAI launched ChatGPT, rivals have been eager to compete. While many have achieved significant milestones in their development, ChatGPT still dominates the market, with most organizations opting for the enterprise versions that cater to their business needs.

Despite this, rival AI chatbot developers around the world, have continued to innovate their products, offering more features that match – or sometimes perform better than – ChatGPT.

One of OpenAI’s biggest rivals is Anthropic. Founded in 2021 and now a major player in AI, Anthropic is backed by both Amazon and Google. Valued at around US$18 billion, the AI company is hoping to secure more funding as it looks to be a major challenger to OpenAI.

The company recently announced that its three new AI models – called Claude 3 Opus, Sonnet and Haiku – were its most high-performing tools yet and were industry-leading in terms of their ability to “match human intelligence.”

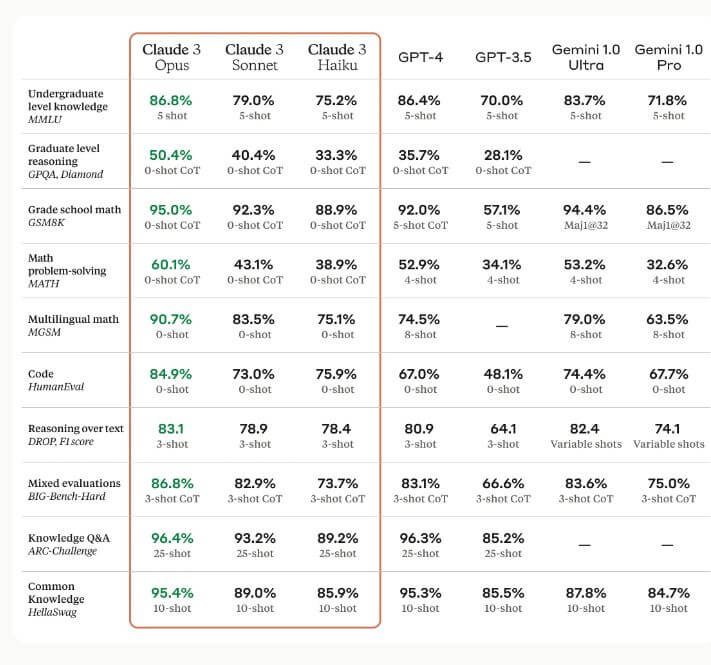

“Opus, our most intelligent model, outperforms its peers on most of the common evaluation benchmarks for AI systems, including undergraduate level expert knowledge (MMLU), graduate-level expert reasoning (GPQA), basic mathematics (GSM8K), and more. It exhibits near-human levels of comprehension and fluency on complex tasks, leading the frontier of general intelligence,” Anthropic announced in a statement.

The company also said that all Claude 3 models show increased capabilities in analysis and forecasting, nuanced content creation, code generation, and conversing in non-English languages like Spanish, Japanese, and French.

The image below shows the performance of Claude 3 when compared with OpenAI and Google Gemini.

A comparison of the Claude 3 models to those of peers on multiple benchmarks. (Source – Anthropic).

“Businesses of all sizes rely on our models to serve their customers, making it imperative for our model outputs to maintain high accuracy at scale. To assess this, we use a large set of complex, factual questions that target known weaknesses in current models.

“We categorize the responses into correct answers, incorrect answers (or hallucinations), and admissions of uncertainty, where the model says it doesn’t know the answer instead of providing incorrect information. Compared to Claude 2.1, Opus demonstrates a twofold improvement in accuracy (or correct answers) on these challenging open-ended questions while also exhibiting reduced levels of incorrect answers,” explained Anthropic.

On concerns about the model’s accuracy and privacy, Anthropic pointed out that it has several dedicated teams that track and mitigate a broad spectrum of risks, ranging from misinformation and CSAM to biological misuse, election interference, and autonomous replication skills.

“We continue to develop methods such as Constitutional AI that improve the safety and transparency of our models and have tuned our models to mitigate against privacy issues that could be raised by new modalities. Addressing biases in increasingly sophisticated models is an ongoing effort and we’ve made strides with this new release. We remain committed to advancing techniques that reduce biases and promote greater neutrality in our models, ensuring they are not skewed towards any particular partisan stance,” the company said.

On concerns about the model’s accuracy and privacy, Anthropic pointed out that it has several dedicated teams that track and mitigate a broad spectrum of risks.

OpenAI: rivals range from ChatGPT to Sora

Another OpenAI product that could soon face challengers is Sora. The text-to-video generator was released recently and has proven to be a hit, given its capabilities to generate videos based on prompts. While most text-to-video generators normally generate content based on a library of footage they have access to, SoraAI actually creates new content based on the prompt.

Given the potential of this tool, there are concerns about how it would impact the livelihoods of graphic artists and designers. However, that may not be the only problem as rivals are now also looking to develop their own version of this concept.

According to a report by SCMP, a team of researchers is making a fresh push to develop China’s answer to Sora. Professors from China’s Peking University and Shenzhen-based AI company Rabbitpre jointly launched an Open-Sora plan with a page on GitHub, with a mission to “reproduce OpenAI’s video generation model”.

The Open-Sora plan aims to reproduce a “simple and scalable ” version of OpenAI’s video generation model with help from the open source community. OpenAI started a global AI frenzy in late 2023 with the launch of its ChatGPT generative chatbot.

Several other Chinese tech companies have also been developing their own versions of text-to-video AI generators. In January 2024, Tencent AI in January released an open source video generation and editing toolbox called VideoCrafter2, which is capable of generating videos from text.

Apart from Tencent, TikTok owner ByteDance also released the MagicVideo-V2 text-to-video model. According to the project’s GitHub page, it combines a “text-to-image model, video motion generator, reference image embedding module and frame interpolation module into an end-to-end video generation pipeline.”

Among the roster of OpenAI rivals, Alibaba is also getting in on the action. The tech giant’s Damo Vision Intelligence Lab released ModelScope, also a text-to-video generation model. The model however currently only supports English input and video output is limited to two seconds.

READ MORE

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications

- How Japan balances AI-driven opportunities with cybersecurity needs

- Deploying SASE: Benchmarking your approach