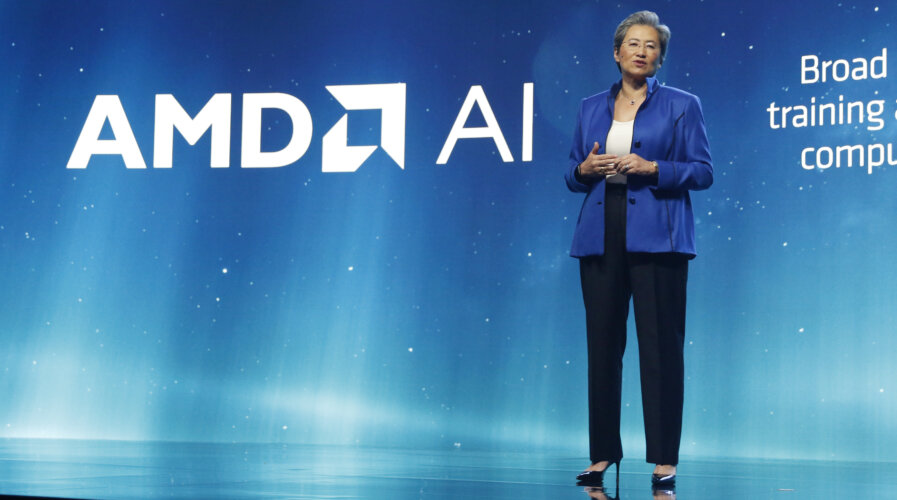

Lisa Su opens up the AMD Data Center Premier event in San Francisco, California, Tuesday, June 13, 2023. (Photo by PaulSakuma.com Photography)

AMD vs Nvidia: Clashing titans in the AI market

|

Getting your Trinity Audio player ready... |

- AMD debuts the world’s top generative AI accelerator.

- Nvidia dominates the AI computing market against AMD.

Prepare for an epic showdown as AMD gears up to challenge Nvidia’s reign with a groundbreaking AI chip! The battle lines have been drawn, and AMD is set to ramp up production in the fourth quarter, shaking the AI market to its core.

During an event in San Francisco, AMD’s CEO Lisa Su unveiled a series of announcements that showcased AMD’s AI Platform strategy. The company aims to provide customers with a comprehensive portfolio of hardware products, ranging from cloud to edge to endpoint, supported by deep collaboration with industry software partners, enabling the development of scalable and pervasive AI solutions.

One of the highlights was the introduction of the AMD Instinct MI300 Series accelerator family, touted as the world’s most advanced accelerator for generative AI. Built on the cutting-edge AMD CDNA 3 accelerator architecture, the MI300X delivers impressive compute and memory efficiency with support for up to 192 GB of HBM3 memory. This makes it ideal for large language model training and inference in generative AI workloads.

With the AMD Instinct MI300X’s memory capacity, customers can effortlessly process large language models like Falcon-40—a 40B parameter model—using a single accelerator. Taking it further, AMD introduced the AMD Instinct Platform, an innovative industry-standard design that seamlessly integrates eight MI300X accelerators. This comprehensive platform empowers businesses with a scalable, high-performance solution for AI inference and training, revolutionizing their AI workflows and driving breakthrough results. Su mentioned that customers can expect sample chips in the third quarter, with production scaling up by the end of the year. Furthermore, AMD unveiled the AMD Instinct MI300A, the world’s pioneering APU Accelerator designed specifically for HPC and AI workloads.

Currently undergoing sampling with customers, the MI300A marks a significant milestone in the industry. Lisa Su emphasized the enduring influence of AI on silicon consumption, underscoring AMD’s commitment to the field. AMD is actively working on a system that integrates eight MI300X chips, presenting a direct challenge to Nvidia’s comparable offerings.

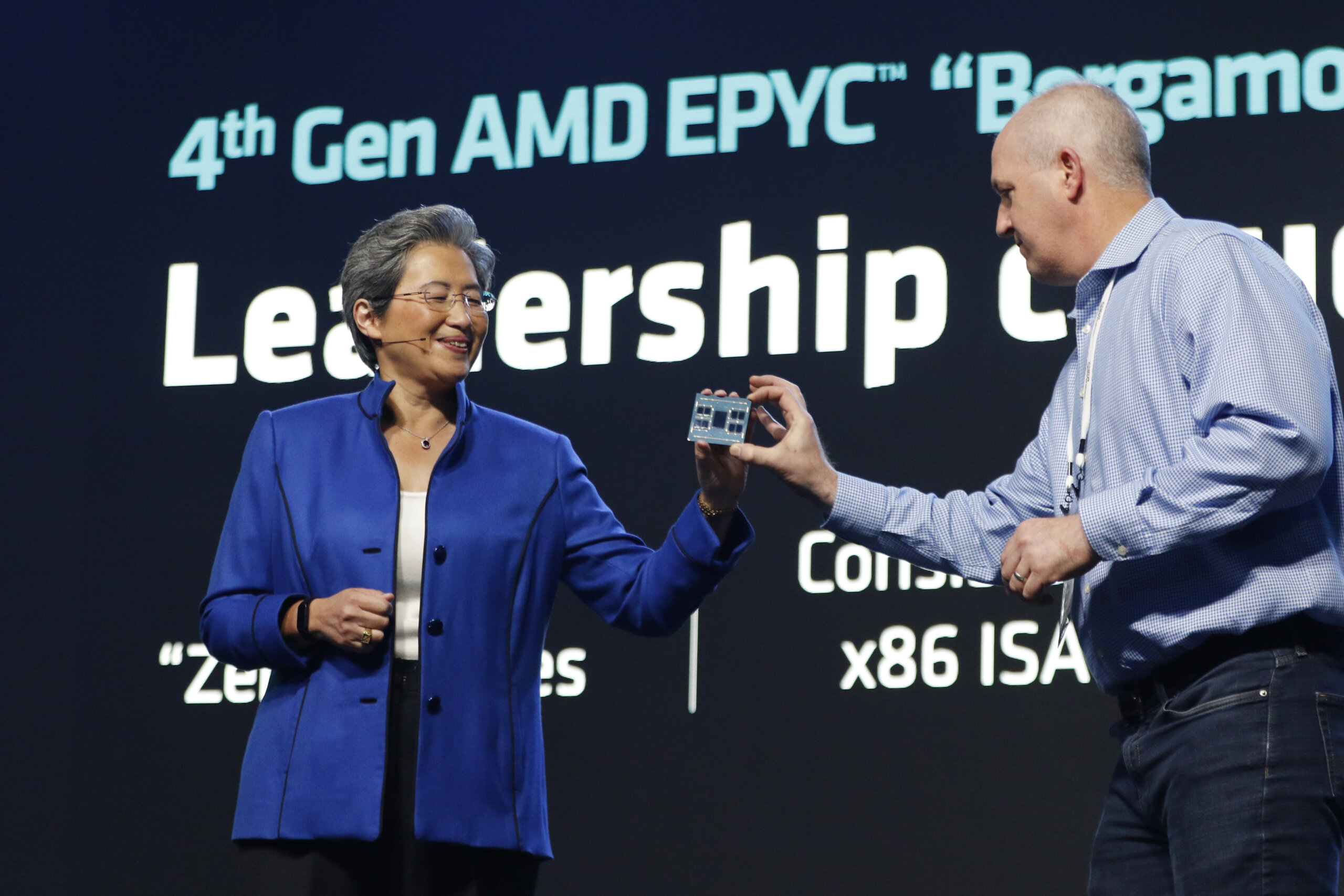

AMD Data Center Premier event in San Francisco, California, Tuesday, June 13, 2023. (Photo by PaulSakuma.com Photography)

The company also announced that it has begun shipping high volumes of the “Bergamo” central processor chip, a general-purpose chip, to companies like Meta Platforms. Facebook parent Meta has adopted the Bergamo chip, which caters to a different segment of AMD’s data center business, specifically cloud computing providers and large chip buyers.

AMD vs Nvidia – Nvidia is leading the AI market

Nvidia currently holds a dominant position in the AI computing market and recently announced impressive sales, surpassing Wall Street’s estimates. This success is attributed to the surge in demand for generative AI, a technology capable of reshaping industries and driving significant productivity gains, although concerns about job displacement persist.

Nvidia’s position in the market is expected to be further solidified by the H100 chip, based on the new “Hopper” chip architecture. The H100 has generated significant buzz and is highly sought-after in Silicon Valley.

NVIDIA is leading the AI market (Source – Financial Times)

While Nvidia faces limited competition at scale, Intel Corp and startups like Cerebras Systems (AI company that builds computer systems for complex AI deep learning applications) and SambaNova Systems (a leading provider of AI hardware and integrated systems) offer competing products. However, Nvidia’s primary sales threats come from Alphabet Inc’s Google and Amazon.com’s cloud unit, which rent custom chips to external developers.

Speaking of Amazon, recent reports suggest that the company is contemplating the adoption of AMD’s new artificial intelligence chips. Although no final decision has been made, Amazon’s interest in AMD’s offerings aligns with the company’s strategy for the AI market, which Nvidia currently dominates.

AMD working closely with AWS

AMD aims to win major cloud computing customers by adopting a flexible approach, offering a range of the necessary components for building customized systems to power AI-driven services. David Brown, Vice President of Amazon EC2 at AWS, highlighted the collaboration between AWS and AMD since 2018 and expressed that AWS is open to considering the new MI300 chips for its cloud services.

“We’re still working on where exactly that will land between AWS and AMD, but it’s something that our teams are working together on,” Brown said. “That’s where we’ve benefited from some of the work that they’ve done around the design that plugs into existing systems.”

AMD Data Center Premier event in San Francisco, California, Tuesday, June 13, 2023. (Photo by PaulSakuma.com Photography)

While AWS has its own server design preferences and declined to partner with Nvidia on the DGX Cloud offering, it did start selling Nvidia’s H100 chip in March. AWS is exploring a potential partnership with AMD, aiming to leverage the performance of 4th Gen AMD EPYC processors combined with the AWS Nitro System. This collaboration aims to advance cloud technology, empowering customers to perform better across a broader range of Amazon EC2 instances.

Although AMD has disclosed technical specifications for its upcoming AI chip, which could outperform some of Nvidia’s current offerings in certain aspects, investors were looking for a flagship customer announcement, leading to a decline in AMD’s stock after the event.

Su outlined a strategy to win over major cloud computing customers by offering a menu of components necessary for building systems similar to ChatGPT. This approach allows customers to select and customize the components according to their requirements while maintaining industry-standard connections.

Su’s full keynote address is in the video below.

The competition between AMD and Nvidia in the AI market is heating up, with both companies vying for dominance in the rapidly growing sector. As the demand for AI solutions continues to rise, the battle for market share is expected to intensify, driving innovation and pushing the boundaries of AI technology.

In summary, AMD is poised to challenge Nvidia’s market leadership with its upcoming AI chip and comprehensive AI Platform strategy. The company’s focus on providing scalable and pervasive AI solutions, supported by deep industry software collaboration, demonstrates its commitment to meeting customers’ evolving needs. As the battle between AMD and Nvidia unfolds, the AI computing market is set to witness exciting developments, paving the way for advancements in AI technology and its wide-ranging applications. With the increasing competition and advancements, customers can expect greater options and innovations in the AI landscape, driving progress and shaping the future of artificial intelligence.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland