US President Joe Biden (L) speaks about artificial intelligence (AI), in the Roosevelt Room of the White House in Washington, DC, on July 21, 2023. Reflecting the sense of urgency as AI rips ever deeper into personal and business life, Biden was meeting at the White House with (2nd L to R) Adam Selipsky, CEO of Amazon Web Services; Greg Brockman, President of OpenAI; Nick Clegg, President of Meta; Mustafa Suleyman, CEO of Inflection AI; and Dario Amodei, CEO of Anthropic. (Photo by ANDREW CABALLERO-REYNOLDS / AFP)

Are watermarks enough for responsible AI usage?

- To ensure responsible AI usage, seven tech companies agreed to develop “robust technical mechanisms,” such as watermarking systems, to ensure users know when content is from AI and not human-generated.

- Anthropic, Google, Microsoft and OpenAI are launching the Frontier Model Forum, an industry body focused on ensuring safe and responsible development of frontier AI models.

- Authors, writers and actors are protesting the use of AI for generating content based on their original work.

Watermarks in images, text and other content generated by AI may soon be mandatory as tech companies look to ensure responsible AI usage by all. In a meeting with US President Joe Biden, representatives from Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI have committed to the safer use of AI.

“These commitments, which the companies have chosen to undertake immediately, underscore three principles that must be fundamental to the future of AI — safety, security, and trust — and mark a critical step toward developing responsible AI,” the White House said in a release.

There have been increasing concerns about the use of AI in recent months, especially in the manner in which content is sourced and generated. Some tech experts in AI have already expressed concerns about society becoming too dependent on AI while others are worried about how the technology can take over their jobs.

“Artificial intelligence promises an enormous promise of both risk to our society and our economy and our national security, but also incredible opportunities — incredible opportunities,” commented the President after meeting the tech leaders last week.

The President also mentioned that the seven tech companies have agreed to voluntary commitments for responsible innovation.

“These commitments, which the companies will implement immediately, underscore three fundamental principles: safety, security, and trust,” said Biden.

The four commitments made by the tech companies include:

- An obligation is to make sure the technology is safe before releasing it to the public. This means testing the capabilities of their systems, assessing their potential risk, and making the results of these assessments public.

- Companies must prioritize the security of their systems by safeguarding their models against cyber threats and managing the risks to our national security and sharing the best practices and industry standards that are necessary.

- The companies have a duty to earn people’s trust and empower users to make informed decisions by labeling content that has been altered or AI-generated, rooting out bias and discrimination, strengthening privacy protections, and shielding children from harm.

- Companies have agreed to find ways for AI to help meet society’s greatest challenges — from cancer to climate change — and invest in education and new jobs to help students and workers prosper from the opportunities, and there are enormous opportunities for AI.

“These commitments are real, and they’re concrete. They’re going to help fulfill — the industry fulfill its fundamental obligation to Americans to develop safe, secure, and trustworthy technologies that benefit society and uphold our values and our shared values,” added Biden.

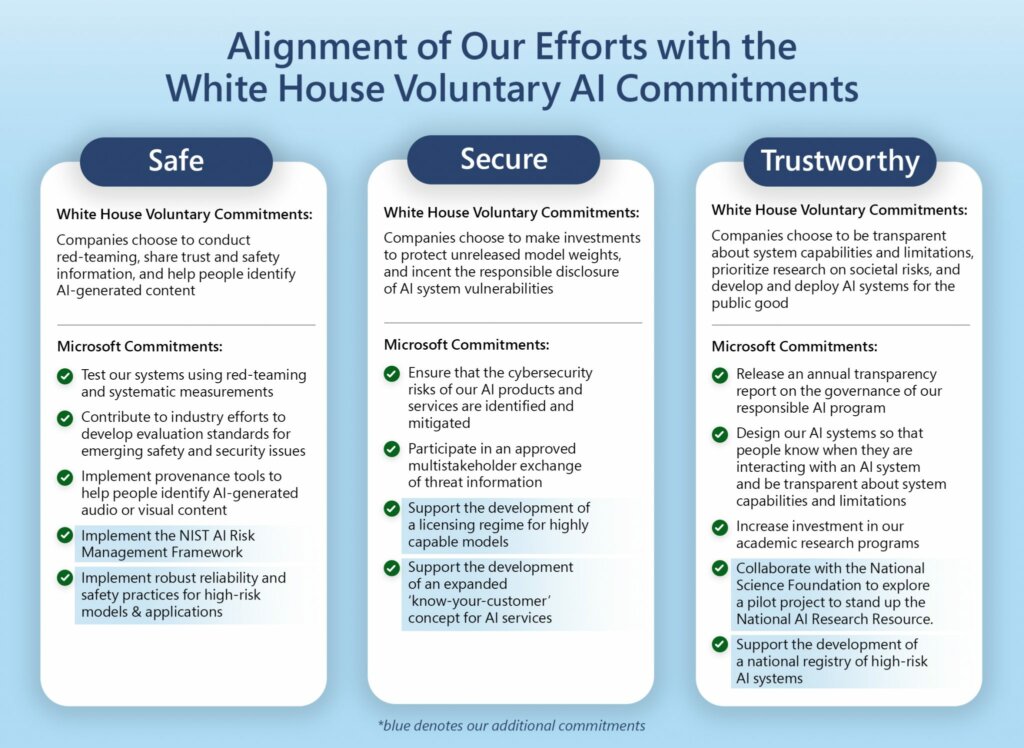

“Guided by the enduring principles of safety, security, and trust, the voluntary commitments address the risks presented by advanced AI models and promote the adoption of specific practices – such as red-team testing and the publication of transparency reports – that will propel the whole ecosystem forward. The commitments build upon strong pre-existing work by the U.S. Government (such as the NIST AI Risk Management Framework and the Blueprint for an AI Bill of Rights) and are a natural complement to the measures that have been developed for high-risk applications in Europe and elsewhere,” explained Brad Smith, Vice Chair and President of Microsoft in a blog post after the discussion with the President.

Microsoft’s additional commitments focus on how it will further strengthen the ecosystem and operationalize the principles of safety, security, and trust. (Source – Microsoft)

Currently, the European Parliament’s recent vote on the European Union’s AI Act and ongoing trilogue discussions make it at the forefront of establishing a model for guiding and regulating AI technology.

AWS has also stated that these commitments build on the company’s approach to responsible and secure AI development, and they will help pave the way for a future that enhances the benefits of AI and minimizes its risks.

Anthropic, Google, Microsoft and OpenAI have also announced the formation of the Frontier Model Forum, a new industry body focused on ensuring safe and responsible development of frontier AI models. The Frontier Model Forum will draw on the technical and operational expertise of its member companies to benefit the entire AI ecosystem, such as through advancing technical evaluations and benchmarks, and developing a public library of solutions to support industry best practices and standards.

Anthropic, Google, Microsoft and OpenAI are launching the Frontier Model Forum, an industry body focused on ensuring safe and responsible development of frontier AI models.

A concern on the lack of responsible AI

Worrying that imagery or audio created by AI will be used for fraud and misinformation has ramped up as the technology improves and the 2024 US presidential election gets closer.

Social media is already filled with deepfake content that many are finding harder to differentiate. As generative AI can mimic voice and even body language, ways to tell when audio or imagery has been generated artificially are being sought to prevent people from being duped by fakes that look or sound real.

Recent examples include a deepfake picture of an explosion in the Pentagon that went viral which led to some disruptions in the stock market. Other examples include videos of President Biden and even former US President Donald Trump making statements that never took place.

AFP reported that Common Sense Media commended the White House for its “commitment to establishing critical policies to regulate AI technology,” according to the review and ratings organization’s chief executive James Steyer.

“That said, history would indicate that many tech companies do not actually walk the walk on a voluntary pledge to act responsibly and support strong regulations.”

Watermarks for AI-generated content were among topics EU commissioner Thierry Breton discussed with OpenAI chief executive Sam Altman during a June visit to San Francisco.

“Looking forward to pursuing our discussions — notably on watermarking,” Breton wrote in a tweet that included a video snippet of him and Altman.

In the video clip, Altman said he “would love to show” what OpenAI is doing with watermarks “very soon.”

The White House said it will also work with allies to establish an international framework to govern the development and use of AI.

CEO of Amazon Web Services Adam Selipsky, President of OpenAI Greg Brockman, President of Meta Nick Clegg, CEO of Inflection AI Mustafa Suleyman, CEO of Anthropic Dario Amodei, President of Google Kent Walker and Microsoft vice chair and president Brad Smith, listen as U.S. President Joe Biden gives remarks on Artificial Intelligence in the Roosevelt Room at the White House on July 21, 2023 in Washington, DC. President Biden gave remarks to reporters before attending a meeting with the seven leaders of A.I. companies that Biden said would consist of a discussion of new safeguarding tactics for the developing technology. Anna Moneymaker/Getty Images/AFP (Photo by Anna Moneymaker / GETTY IMAGES NORTH AMERICA / Getty Images via AFP)

Watermark, AI and content copyright

“Americans are seeing how advanced artificial intelligence and the pace of innovation have the power to disrupt jobs and industries. These commitments are a promising step, but we have a lot more work to do together. Realizing the promise of AI by managing the risk is going to require some new laws, regulations, and oversight,” said Biden.

In the US, the implementation of generative AI could mean sweeping changes for the entertainment industry. This is one of the reasons why more than 11,000 film and television writers remain on strike and are now joined by thousands of film and television actors as well.

Apart from low salaries and the risk of losing their jobs to AI, many in the industry also feel that generative AI is merely picking up content that was originally created by them. Put simply, the originality of the content could still be the work of a writer but modified and generated differently by the AI.

There are already many films that have been purely generated and created by AI. According to a report by MIT Technology Review, The Frost is a 12-minute movie in which every shot is generated by an image-making AI. Waymark, a Detroit-based video creation company behind The Frost, used a script written by an executive producer of the company that directed the film on Open AI’s DALL-E 2. DALL-E 3 is a deep learning model developed by OpenAI to generate digital images from natural language descriptions.

The report stated that after some trial and error to get the model to produce images in a style the filmmakers were happy with, they used DALL-E 2 to generate every single shot. Then they used D-ID, an AI tool that can add movement to still images, to animate these shots, making eyes blink and lips move.

Here’s the film.

This is where the concerns of AI taking over jobs come in. If the technology is able to generate an entire film without the need of a film crew, there is no stopping it from even creating characters based on real-life actors and using them in the film without having to pay them.

For example, a film studio thinking of making a film sequel does not need to get the same actor to come back. All it needs to do is generate the actor’s character digitally, from style to voice and insert it into the film. The rights of that character belong to a film studio and not the actor.

So where do watermarks fit in this? How can individuals, be they script writers or even actors watermark their work, voice or appearance?

In a report by The Guardian, the National Association of Voice Actors, an advocacy group, insists that it is not anti-technology or anti-AI but is calling for stronger regulation. It warns that it is increasingly hard to know when and where synthesized voices are replacing humans in audiobooks, video games and other media.

Examples of voices that have been used in AI include the voices of celebrity chef Anthony Bourdain and artist Andy Warhol which were posthumously recreated by AI in the documentaries Roadrunner and The Andy Warhol Diaries.

At the same time, more than 8,500 authors have signed a letter submitted by the Authors Guild informing technology companies not to use their copyrighted work to train artificial intelligence systems unless those companies are willing to pay them for the usage. US comedian Sarah Silverman and two other authors have already sued Open AI over copyright infringement.

Should written content in books be used freely by AI? (Photo by JOE RAEDLE / GETTY IMAGES NORTH AMERICA / Getty Images via AFP)

Responsible AI for developers

In the open-source community, responsible AI is part of what makes the community share their ideas and programs. However, many are concerned about how generative AI tools are picking up code and using it without acknowledging the source or ownership.

In fact, there have been increasing concerns about Microsoft Copilot as there have been claims that it strips code of its licenses. This means developers who use it run the risk that they are unwittingly violating copyright. This creates another problem as companies could end up being at risk of lawsuits, particularly from open-source advocacy groups.

So how can a watermark be added to the code? It’s not as simple as it sounds really. As long as there is no proper way of acknowledging or crediting the source, AI is pretty much just recycling ideas creatively for now. The technology will only get better and everyone will eventually need to decide how much AI they really want to use

For now, while the suggestions made by the tech companies are definitely in the right direction, the reality is, there is still a lot to be done when it comes to the responsible use of AI.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland