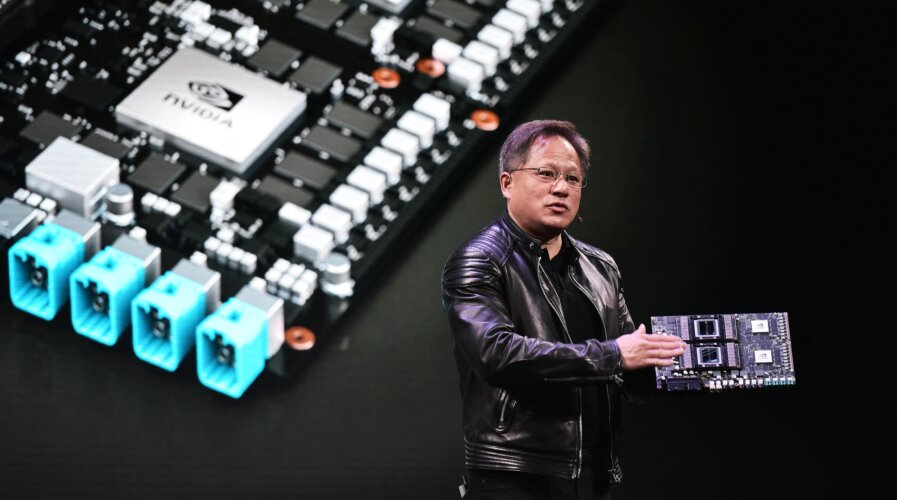

Buyers in China are resisting Nvidia’s adoption of less powerful AI chips, a response to the export restrictions imposed by the US. (Photo by MANDEL NGAN / AFP) (Photo by MANDEL NGAN/AFP).

Spiking demand for AI chips could lead to global revenue of US$53bn this year

- Chips designed to execute AI workloads will represent a US$53.4 billion revenue opportunity for the semiconductor industry in 2023.

- Gartner forecasted that AI chips revenue will continue to experience double-digit growth, increasing 25.6% in 2024.

- By the end of 2023, the value of AI-enabled application processors used in devices will amount to US$1.2 billion, up from US$558 million in 2022.

Earlier this week, we wrote about Nvidia Corp – and its role in providing most of the AI chips that exist today. What is clear is that AI requires a lot of computing power, which means demand for semiconductors is peaking with the rise of ChatGPT and other generative AI services. Fortunately for Nvidia, that means the world needs more of its AI chips.

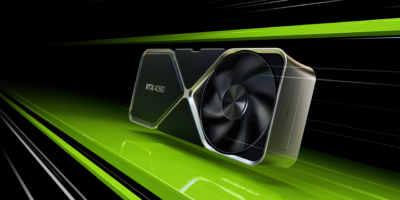

Nvidia’s AI chips, commonly known as graphic processing units (GPUs), have been powering the training of AI models (like ChatGPT) and the eventual inference as well (the results of a query). Those high-end GPUs face a massive supply crunch amid skyrocketing demand, even as the shortages across most other chip categories begin to ease out.

How ChatGPT runs.

But others want a slice of the pie – and an increasing number of companies like AMD are attempting to gain a foothold and “chip away” at Nvidia’s dominance. In fact, over the years, start-ups have variously claimed to outperform certain Nvidia products for particular kinds of workloads, including training the large language models that power chatbots such as ChatGPT and other generative AI systems capable of producing humanlike text and realistic imagery.

Still, AI researchers, and the start-ups that are turning their research into commercial products, overwhelmingly prefer Nvidia’s technology, and the AI chips market is currently the hottest sector in the technology industry. So much so that Gartner forecasted that semiconductors designed to execute AI workloads would represent a US$53.4 billion revenue opportunity for the entire semiconductor industry this year.

That’s a 20.9% increase from 2022, according to the latest forecast from Gartner. “The developments in generative AI and the increasing use of a wide range AI-based applications in data centers, edge infrastructure, and endpoint devices require the deployment of high-performance graphics processing units (GPUs) and optimized semiconductor devices,” Alan Priestley, VP Analyst at Gartner said.

By 2027, AI chips revenue is forecast to more than double.

“This is driving the production and deployment of AI chips,” Priestley added. The AI chips sector holds such potential that Gartner even predicts revenue to continue to experience double-digit growth through the forecast period, increasing 25.6% in 2024 to US$67.1 billion. “By 2027, AI chips revenue is expected to be more than double the size of the market in 2023, reaching US$119.4 billion,” Gartner noted.

What will push the AI chips market higher?

It is inevitable that many more industries and IT organizations will deploy systems that include AI GPUs as AI-based workloads in the enterprise mature. In the consumer electronics market, Gartner analysts estimate that by the end of 2023, the value of AI-enabled application processors used in devices will amount to US$1.2 billion, up from US$558 million in 2022.

Gartner predicts strong revenue in the sector for this year – and next.

There will also be a growing need for efficient and optimized designs to support the cost-effective execution of AI-based workloads, which will increase deployments of custom-designed AI chips. This is especially apparent in Gartner’s recent survey, where, from a poll of more than 2,500 executive leaders, 45% reported that the publicity surrounding ChatGPT has prompted them to increase their AI investments.

“For many organizations, large-scale deployments of custom AI chips will replace the current predominant chip architecture – discrete GPUs – for a wide range of AI-based workloads, especially those based on generative AI techniques,” Priestley said.

The AI comunity just loves NVIDIA’s GPUs.

Generative AI especially is driving demand for high-performance computing systems for development and deployment, with many vendors who offer high-performance GPU-based systems and networking equipment seeing significant near-term benefits. In the long term, as hyperscalers look for efficient and cost-effective ways to deploy these applications, Gartner expects an increase in their use of custom-designed AI chips.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland