VMware and NVIDIA extend partnership to unveil a new generative AI solution.

VMware Explore: VMware and NVIDIA to offer Private AI for enterprises

- VMware unveils the VMware Private AI Foundation with NVIDIA at VMware Explore.

- The platform will enable enterprises to customize models and run generative AI applications, such as intelligent chatbots, assistants, search, and summarization.

- The platform will feature NVIDIA NeMo, an end-to-end, cloud-native framework included in the NVIDIA AI Enterprise.

With generative AI on the agenda for almost every business today, it’s hardly surprising that VMware has decided to join the trend. After all, even before the rise of generative AI, VMware was already a leader in AI technology.

While virtual machines (VM) might sound like AI, they operate quite differently. VMs run multiple operating systems simultaneously from the same hardware. In contrast, AI processes and interprets vast amounts of data. Over the years, VMware has found ways to introduce and integrate various AI solutions into its offerings.

For example, in 2021, VMware and NVIDIA partnered to unlock the power of AI for all businesses by delivering an end-to-end enterprise platform optimized for AI workloads. The integrated platform provided the NVIDIA Enterprise AI suite, optimized and exclusively certified to run on VMware vSphere.

This platform not only accelerated the rate at which developers could create AI and high-performance data analytics for businesses, but also allowed organizations to scale modern workloads on the existing VMware vSphere infrastructure. Additionally, it ensured enterprise-level manageability, security, and availability.

A Tweet of the launch of VMware Private AI Foundation with NVIDIA.

Introducing VMware Private AI Foundation with NVIDIA

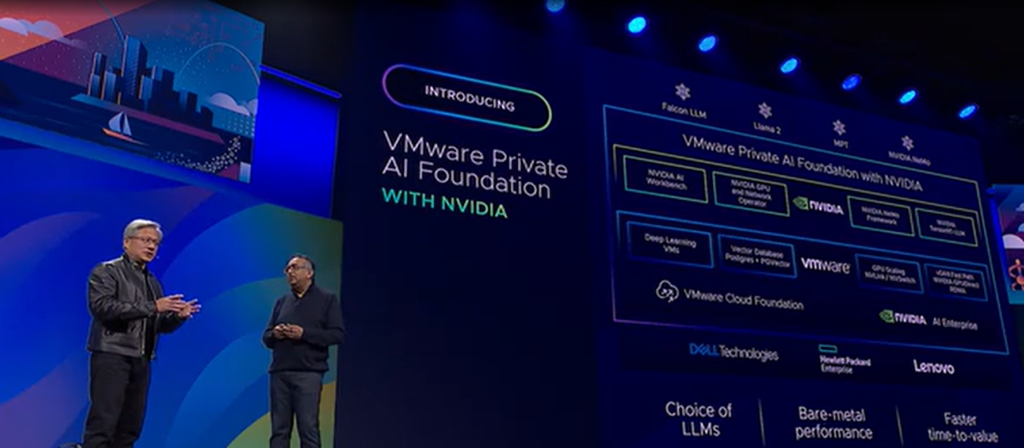

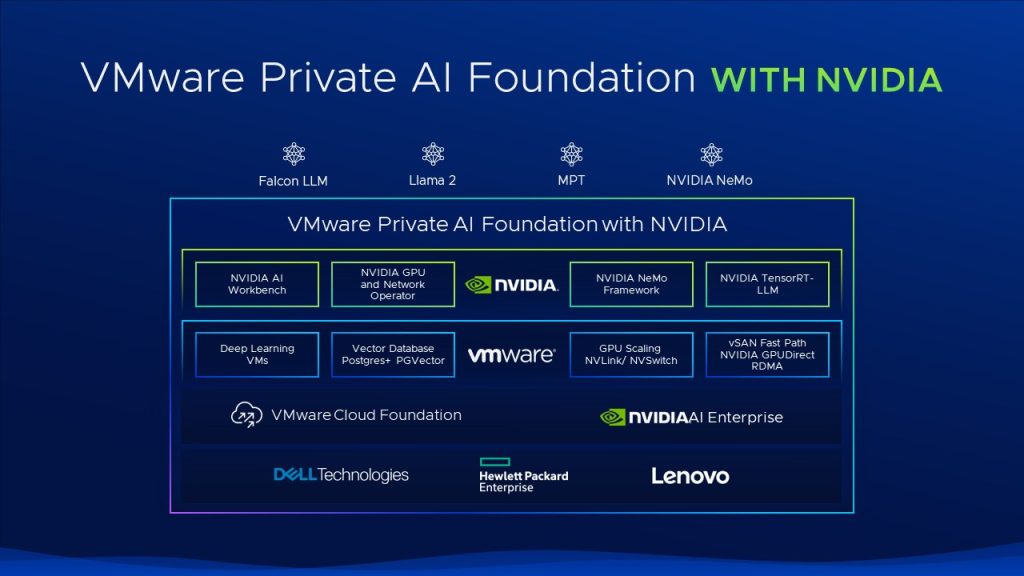

Today, VMware and NVIDIA are expanding their strategic partnership for the era of generative AI. Announced at VMware Explore 2023, the VMware Private AI Foundation with NVIDIA will allow enterprises to customize models and run generative AI applications, such as intelligent chatbots, assistants, search, and summarization. The platform will be a comprehensive solution, featuring generative AI software and accelerated computing from NVIDIA, all built on the VMware Cloud Foundation and optimized for AI.

VMware also unveiled the VMware Private AI Reference Architecture for Open Source, designed to support customers in achieving their desired AI outcomes by using the best available open-source software (OSS) technologies, both now and in the future.

Raghu Raghuram, CEO of VMware, believes that the multi-cloud environment lays the foundation for this new class of AI-powered applications, as it allows for the easier harnessing of private yet widely distributed data. Many enterprises wish to utilize generative AI but are concerned about the potential challenges and risks associated with using public AI models.

While several tech companies offer businesses opportunities to develop their AI applications, many still face uncertainties about the process. Some have even attempted to create their models, but ended up incurring higher costs and lost time.

The CEOS of NVIDIA and VMware discuss the new generative AI offering.

To address these concerns, VMware AI Labs developed the VMware Private AI. This solution brings compute capacity and AI models directly to where enterprise data originates, processes, and is consumed, whether that’s in a public cloud, an enterprise data center, or at the edge.

Put simply, with these new offerings, VMware aims to help customers combine the flexibility and control required to power a new generation of AI-enabled applications that will help dramatically increase worker productivity, ignite transformation across major business functions, and drive economic impact.

“The remarkable potential of generative AI cannot be unlocked unless enterprises are able to maintain the privacy of their data and minimize IP risk while training, customizing, and serving their AI models. With VMware Private AI, we are empowering our customers to tap into their trusted data so they can build and run AI models quickly and more securely in their multi-cloud environment,” said Raghuram.

Chris Wolf, vice president of VMware AI Labs, emphasized that the new VMware Private AI offerings aim to democratize the future of AI for everyone in the enterprise, bringing the choice of compute and AI models closer to the data.

“Our Private AI approach benefits enterprise use cases ranging from software development and marketing content generation to customer service tasks and pulling insights from legal documents,” he added.

Jensen Huang, founder and CEO of NVIDIA, commented that the company’s expanded collaboration with VMware would equip countless customers across various sectors, like financial services, healthcare, and manufacturing, with the comprehensive software and computing resources needed to fully make use of generative AI through custom applications developed with their proprietary data.

The platform will include the NVIDIA NeMo™ framework, NVIDIA LLMs, and other community models (such as Hugging face models) running on VMware Cloud Foundation. (Source – VMware)

The need for Private AI

As the VMware Private AI Foundation with NVIDIA is set to provide AI tools that allow enterprises to operate models on their private data, expected benefits include:

- Privacy – letting customers easily run AI services adjacent to wherever they have their data, with an architecture that preserves data privacy and enables secure access.

- Choice – Enterprises will have a wide choice in where to build and run their models, from NVIDIA NeMo to Llama 2 and beyond, including leading OEM hardware configurations and, in the future, on public cloud and service provider offerings. NVIDIA NeMo is an end-to-end, cloud-native framework included in NVIDIA AI Enterprise that allows enterprises to build, customize and deploy generative AI models virtually anywhere.

- Performance – Running on NVIDIA accelerated infrastructure will deliver performance equal to and even exceeding bare metal in some use cases, as proven in recent industry benchmarks.

- Data center scale – GPU scaling optimizations in virtualized environments will enable AI workloads to scale across up to 16 vGPUs/GPUs in a single virtual machine and across multiple nodes to speed generative AI model fine-tuning and deployment.

- Lower cost – Will maximize usage of all compute resources across, GPUs, DPUs and CPUs to lower overall costs, and create a pooled resource environment that can be shared efficiently across teams.

- Accelerated storage – VMware vSAN Express Storage Architecture will provide performance-optimized NVMe storage and supports GPUDirect® storage over RDMA, allowing for direct I/O transfer from storage to GPUs without CPU involvement.

- Accelerated networking – Deep integration between vSphere and NVIDIA NVSwitch technology will further enable multi-GPU models to execute without inter-GPU bottlenecks.

- Rapid deployment and time to value – vSphere Deep Learning VM images and image repository will enable fast prototyping capabilities by offering a stable turnkey solution image that includes frameworks and performance-optimized libraries pre-installed.

VMware Private AI Foundation with NVIDIA will receive support from tech giants like Dell Technologies, Hewlett Packard Enterprise, and Lenovo. These companies will be among the first to offer systems that power enterprise LLM customization and inference workloads using NVIDIA L40S GPUs, NVIDIA BlueField-3 DPUs and NVIDIA ConnectX®-7 SmartNICs. The solution is slated for release in early 2024.

Explaining the ongoing importance of AI for everyone.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland