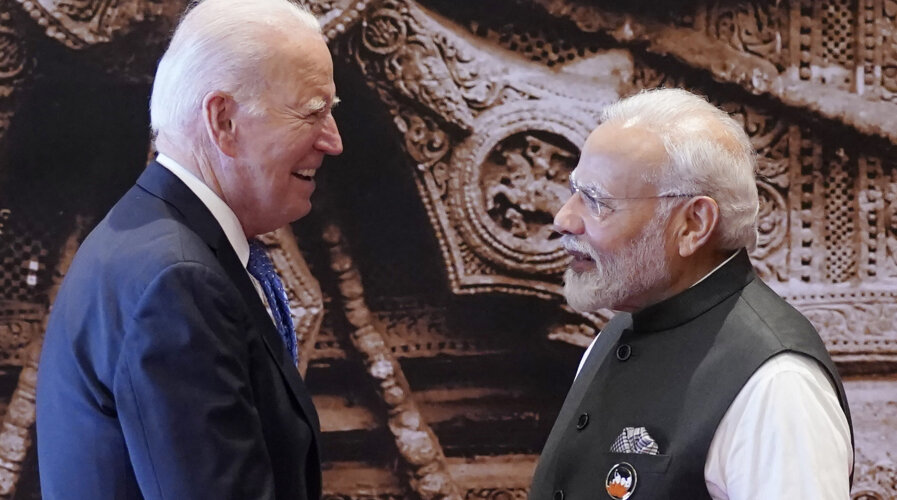

India’s Prime Minister Narendra Modi (R) greets US President Joe Biden upon his arrival ahead of the G20 Leaders’ Summit in New Delhi on September 9, 2023. (Photo by Evan Vucci / POOL / AFP)

Can G20 leaders dictate the future of AI?

• The future of AI depends on many factors, including regulation.

• Can the G20 agree how the future of AI should look?

• The final communique was business-positive, but underlined the need for regulations.

Who will determine the future of AI?

That’s a more complex question that it might look. In the first place, it’s definitely not solely in the hands of tech companies or businesses. While tech companies and businesses fuel the demand for the future of AI, it will be up to regulators set up by the governments of the world to dictate how the technology will be ultimately used.

2023 has already witnessed significant hype surrounding generative AI. But if we were to go back a few years to when AI first started making an impact through facial recognition solutions, its future was already unclear. In fact, the amount of bias produced by AI algorithms ended up creating more problems than it solved.

But today, generative AI is beginning to change that. Not only is the technology capable of solving a lot more problems than standard AI could, but it can also be easily adapted into many use cases in different industries all around the world.

Initially, this AI revolution was welcomed with open arms by businesses, who continue to see the potential in generative AI. Studies by research companies like Gartner, McKinsey, and IDC suggest that the benefits of generative AI outweigh its potential drawbacks. Business leaders themselves are amazed at the technology and have channeled more funds toward implementing generative AI solutions at work.

While some companies have voiced concern about the security of using generative AI, especially in how it sources and uses data to generate its output, more companies are still going full ahead with it.

That is, until government intervention stepped in.

Globally, governments are increasingly adopting a cautious approach towards allowing businesses to use AI. In addition to information concerns, they see the need for adequate regulations to limit AI’s negative impact on society and the workforce.

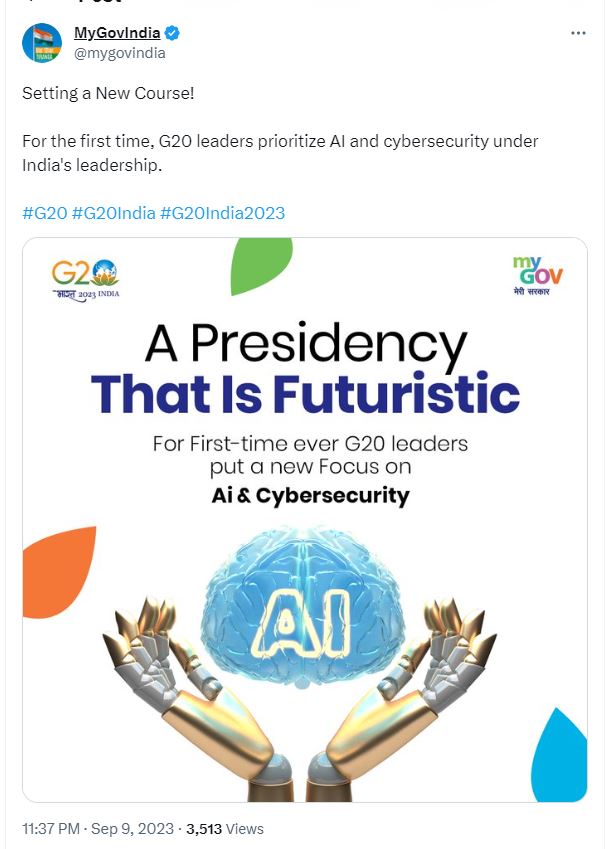

G20 leaders to focus on the future of AI and cybersecurity at the Summit.

It’s all about regulations

In the US, tech companies have agreed to work together to develop AI responsibly after more calls for regulations. Global tech leaders have continuously had discussions with the White House on how they plan to ensure the future of AI will not be a problem for society or negatively affect work.

Recently, tech companies agreed to add digital watermarks to AI-generated content to inform users of its source and prevent copyright infringement.

In China, the government is already regulating most of the tech in the country. So it comes as no surprise that AI developments would also need to meet regulatory requirements before being released. China has already banned the use of OpenAI’s ChatGPT, while local Chinese tech companies like Tencent and Baidu have been developing their own generative AI models, based on the regulations set.

Meanwhile in Southeast Asia, Singapore has created the world’s first AI testing toolkit called AI Verify. AI Verify allows users to conduct technical tests on their AI models and record process checks. The aim of AI Verify is to promote the responsible use of AI.

In Europe, the European Union’s AI Act serves as the industry’s first comprehensive regulatory framework. Acknowledging the need to regulate the future of AI, the law proposes generative AI systems be reviewed before commercial release.

India’s Prime Minister Narendra Modi (center R) addresses the second session ‘One Family’ of the G20 summit in New Delhi on September 9, 2023. (Photo by EVELYN HOCKSTEIN / POOL / AFP)

G20 leaders discuss the future of AI

Despite the number of regulations already established or in the pipeline, there are still concerns about the future of AI, especially when it comes to providing unbiased results. As generative AI works best based on the data on which it is trained and to which it is exposed, there are concerns that some use cases could end up causing more harm than good.

At the recent G20 Summit in India, world leaders discussed both the economic potential of AI and the importance of safeguarding human rights. Some leaders have even called for global oversight of the technology.

According to a report by The Times of India, Indian Prime Minister Narendra Modi highlighted the need for AI regulations to go beyond the “Principles of AI” that was adopted in 2019.

“I suggest we establish a framework for responsible human-centric AI governance. India will also give its suggestions. It will be our effort that all countries get the benefit of AI in areas like socio-economic development, global workforce and research and development,” said the Prime Minister at the summit.

Echoing Modi’s remarks was European Commission President, Ursula von der Leyen. She suggested a similar oversight body to the Intergovernmental Panel on Climate Change.

“It is telling that even the makers and inventors of AI are calling on political leaders to regulate,” she said, as reported by Bloomberg.

A Tweet by EU President on AI regulations.

Here is the summary of what the G20 leaders concluded in their final communiqué:

“The rapid progress of AI promises prosperity and expansion of the global digital economy. It is our endeavor to leverage AI for the public good by solving challenges in a responsible, inclusive and human-centric manner while protecting people’s rights and safety.

To ensure responsible AI development, deployment and use, the protection of human rights, transparency and explainability, fairness, accountability, regulation, safety, appropriate human oversight, ethics, biases, privacy, and data protection must be addressed.

To unlock the full potential of AI, equitably share its benefits and mitigate risks, we will work together to promote international cooperation and further discussions on international governance for AI. To this end, we:

- Reaffirm our commitment to G20 AI Principles (2019) and endeavor to share information on approaches to using AI to support solutions in the digital economy.

- Will pursue a pro-innovation regulatory/governance approach that maximizes the benefits and takes into account the risks associated with the use of AI.

- Will promote responsible AI for achieving SDGs.”

This statement aligns with the agreement reached by the Group of Seven leaders in May of this year. Further discussions on AI’s future and regulation are anticipated at the UK’s first global AI summit, which is expected to be attended by the U.S. President and global tech leaders.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland