The goal for Snowflake is to make advanced AI accessible to a broader range of customers, including small and medium enterprises without deep expertise in the subject. (Image – Shutterstock).

Snowflake has the perfect data platform for AI

• The Snowflake AI approach opens up generative AI to companies with all levels of understanding.

• By democratizing technology, the company hopes to move the industry along.

• Snowflake has always taken a problem-solving approach to the application of technology, and generative AI is no different.

Exponential data growth requires a data platform capable of not only understanding the data but also maximizing its potential. Given today’s importance of data-driven insights, businesses need to ensure they have access to the right type of data to inform their decisions.

But managing all this data is a challenging and complex process. Challenges include not only regulatory and compliance requirements but also the difficulty of accessing all the necessary data.

According to Sanjay Deshmukh, senior regional vice president of ASEAN and India at Snowflake, the company’s founders were motivated to create a modern data platform due to the inadequacies of existing technologies at the time. Legacy data platforms, especially big data solutions like Hadoop, failed to fulfill their promises, primarily due to their complexity in both construction and maintenance. This complexity hindered customers from becoming truly data-driven and democratizing access to data.

Snowflake identified and aimed to address the top challenges in data management, including legacy systems, silos, cost, and complexity. Data in silos remained a significant problem as it continued to be segregated despite the promises of big data. This segregation was partly due to technological limitations and internal organizational controls. The costs and complexities associated with traditional systems led to delays in obtaining insights. The lack of innovation in AI, ML, data collaboration, and monetization called for a solution.

To address these issues, Snowflake made two crucial decisions. Firstly, its platform had to be a cloud service to tackle scale, complexity, and cost issues. Secondly, it had to be a fully managed service to reduce the time to insights. This approach led to the creation of a platform that disrupted the entire data warehousing industry. By separating computing and storage and offering a consumption model, Snowflake’s platform allows users to store and access vast amounts of data at high speed. It’s a platform and an approach that transformed the industry.

Data in silo were a significant problem as data remained segregated despite the promises of big data. (Image generated by AI).

Snowflake then recognized the growing need for external data and the limitations of existing methods. The company introduced a marketplace within its cloud architecture to address this, enabling secure and efficient data collaboration. This development marked the second phase of the company’s evolution, addressing the demand for external data and enhancing collaboration across different platforms globally.

The third phase of Snowflake’s growth, which aligns with the expansion of data and the need for a modern data platform, involved empowering customers to build analytical applications. This phase focused on creating an environment conducive to the seamless development of insight-driven applications. The native app framework and other capabilities facilitated the efficient delivery of insights to end-users.

The fourth and current phase centers on disrupting the generative AI and large language model (LLM) space to democratize access to these technologies. This phase aims to make advanced AI accessible to a broader range of customers, including small and medium enterprises lacking deep expertise. It represents a commitment to making LLMs genuinely accessible and beneficial to companies globally.

Deshmukh highlighted the company’s expansion over the last five years in the Asia-Pacific region, beginning with Australia, and then moving to Japan and Singapore. The demand for its platform has surged across all sectors in many countries, leading to a strong regional presence. Snowflake’s recent conference in Malaysia underscored the increasing demand for data solutions and the company’s dedication to meeting the diverse needs of different industries.

Snowflake’s recent conference in Malaysia.

Snowflake and the perfect data platform for AI

In 2023, the spotlight is on generative AI, a trend triggered by the launch of ChatGPT. Unlike previous innovations, generative AI has quickly become a topic in boardrooms, prompting CEOs and CIOs to strategize how their companies can benefit from this new technology. While individual consumers may not require a specific generative AI strategy, enterprises must consider potential risks associated with customer data, intellectual property, and competitive markets.

For Deshmukh, the advice to enterprise customers is clear: before jumping on the generative AI bandwagon, establish a robust data strategy as a foundational step. The company recognizes that data powers AI and emphasizes the need for a well-thought-out data platform and governance framework. This two-step approach involves creating a solid data foundation and enabling generative AI.

Sanjay Deshmukh, senior regional vice president, ASEAN and India at Snowflake.

The initial step in building this foundation involves consolidating data in one place, creating a single source of truth. Here, Deshmukh highlights Snowflake’s capability to support any scale and data type, allowing customers to store structured, semi-structured, and unstructured data in one location. This seemingly simple task is challenging for customers accustomed to data spread across multiple systems.

The second crucial aspect involves implementing a governance framework, which includes identifying personally identifiable information (PII) and determining how to protect this sensitive data. Depending on its sensitivity, protection methods for PII may include complete encryption, tokenization, or role-based access.

The third and most vital step is ensuring that the governance framework is applied consistently to every user and use case. Deshmukh pointed out that data is often moved out of the governed environment for specific tasks, putting it at risk of compromise.

“It is extremely important that you not just put in the governance framework, but you ensure that you do not silo the data again, because the moment you silo it, your governance rule framework is going to get defeated. This is our recommendation to our clients, in terms of building a comprehensive data strategy and a governance framework for AI,” said Deshmukh.

Deshmukh presented Snowflake as a solution that supports all workloads. He explained that with Snowflake, clients do not need to extract data for various processes such as data warehousing, data lakes, data engineering, and AI/ML model building. Snowflake’s approach ensures that data stays secure within its governed environment. In this setup, data models and applications are brought to the data, a significant departure from traditional models where data is typically moved towards the applications.

“Our approach resonates well with customers, emphasizing the significance of a comprehensive data strategy and governance framework before delving into generative AI. By prioritizing data organization, security, and accessibility, enterprises can lay a strong foundation for leveraging the capabilities of generative AI technologies,” Deshmukh added.

Snowflake eliminates the need for clients to pull data out for various processes like data warehousing, data lakes, data engineering, and AI/ML model building. (Image – Shutterstock).

The three principles for a generative AI strategy

There are three key principles outlined for effective implementation of generative AI. The first principle emphasizes that data should not be sent to the model; instead, the model and AI applications should be brought to the data. This approach is crucial to maintain customer trust, as hosting a foundation model externally and sending data to it could result in the model being trained on sensitive information, potentially benefiting others and risking trust.

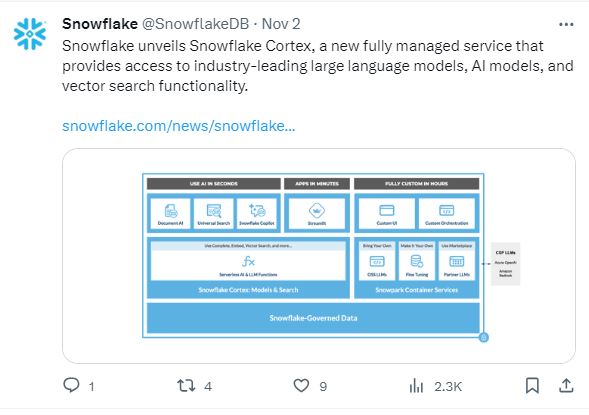

The second principle underscores the importance of training Large Language Models (LLMs) on data relevant to the business. The model might provide inaccurate or irrelevant answers without proper context and training on the company’s data. Snowflake’s recently launched AI platform, Cortex, adheres to this principle by letting customers bring their LLMs and run them alongside their data within the secure Snowflake perimeter.

The third and critical principle emphasizes a business-centric approach to generative AI. Instead of succumbing to the hype and seeking problems to solve with the technology, businesses should identify existing challenges they must address.

For instance, a manufacturing company aiming to enhance production efficiency should pinpoint specific business problems, such as analyzing data related to plant maintenance and servicing documented by engineers. This approach ensures that generative AI is applied purposefully to solve real business issues, rather than being driven solely by technological trends.

“These are the three principles that we recommend our customers to follow, as they are implementing a generative AI and LLM strategy. If you look at our Cortex platform, it is built with this single-minded objective or single-minded focus that we want to democratize access to AI to pretty much everyone,” said Deshmukh.

Snowflake Cortex brings powerful AI and semantic search capabilities to the Snowflake platform.

A hypothetical scenario for AI

The approach to implementing generative AI begins with identifying a business problem and determining the necessary data to solve it. Deshmukh shared a hypothetical yet realistic example, focusing on unstructured documents, specifically service agreements signed by engineers and service professionals, which are not currently integrated into the analytical process. The initial step involves digitizing these physical papers into the data platform by scanning and loading them into the Snowflake system.

Next, Deshmukh pointed out that a Large Language Model (LLM) comes into play to extract intelligence from scanned documents. In 2022, Snowflake acquired Applica, which provides purpose-built LLMs for converting unstructured documents into structured content, known as Document AI. Running Document AI on PDF documents involves training the model with sample data and creating a pipeline to analyze failing parts, identify responsible suppliers, and send relevant information to the respective person for resolution.

Document AI – a workflow to easily extract content from PDF documents using a built-in #LLM developed by Snowflake. pic.twitter.com/5z51p5Q4qP

— Snowflake (@SnowflakeDB) November 3, 2023

According to Deshmukh, this purpose-built model is not a foundational model; instead, it serves the specific task of converting unstructured documents into structured data, letting users to pose queries in natural language. For instance, users can inquire about the status of service reports from the last three months, identify the number of failures, pinpoint the most problematic part (eg, an injection molding machine), and inquire about the supplier of the faulty component. The LLM facilitates asking questions in English and extracting meaningful insights.

For Deshmukh, this example emphasizes the importance of starting with a business problem, determining the required data, and selecting an appropriate model. It contrasts with a common pitfall where businesses choose models before identifying the specific issues they aim to solve.

The initial step in building the AI foundation involves consolidating data in one place, creating a single source of truth. (Image – Shutterstock).

Snowflake aims to democratize access to AI

While generative AI is currently generating hype, AI has been around for several years, initially dominated by a few companies with data scientists and machine learning experts. However, the landscape has since changed significantly, with AI becoming more commoditized due to the availability of open-source language models. While companies can leverage these models, Deshmukh pointed out that the challenge lies in the need for skilled individuals who understand and can effectively work with large language models.

Recognizing that many, particularly in the SME segment, lack the resources to hire data scientists, the Snowflake AI approach has addressed this gap. The company has onboarded LLMs like Meta’s Llama 2 for those without the proficiency. These pre-trained models are accessible through functions, allowing individuals without data science expertise to build small Python code or AI applications. This approach allows SMEs to harness the power of AI without requiring specialized skills in handling large language models.

In another hypothetical scenario that closely resembles reality, Deshmukh considered a B2C company with a customer service focus. The customer service leader, tasked with understanding customer complaints and challenges, can utilize the Snowflake platform. The company can gain insights into customer concerns by loading call transcripts into the system and using a function called ‘summarize,’ which uses an LLM hosted in Snowflake. For example, it might reveal that 30% of customers complain about network issues, while 40% express concerns about other issues.

Deshmukh stated that the key advantage here is that enterprises lacking data scientists or individuals with LLM proficiency can simplify their processes using Snowflake. Those familiar with Python and SQL can easily use these skills to access and analyze data.

Snowflake’s goal is to cater to a spectrum of users: for those with proficiency, they can bring their language models and build applications; for those with SQL and Python skills, they can call functions; and for those with limited expertise, a copilot feature powered by an LLM allows users to ask English-language questions and receive relevant insights. The platform aims to accommodate users at various proficiency levels, making AI applications more accessible and user-friendly.

“That’s our goal, to democratize the access to generative AI, to a broad set of companies and not let it be limited to a handful of large companies who have the skills. That’s the approach that we’re taking with Cortex,” he concluded.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland