Google Gemini to give ChatGPT a run for its money

- Google launches Gemini its most capable and general model yet.

- The first version, Gemini 1.0, is optimized into three different sizes.

- Gemini can understand, explain, and generate high-quality code in the world’s most popular programming languages.

The generative AI hype is still ongoing, and Google is ensuring it remains a crucial player in the industry. While OpenAI and Microsoft have dominated most of the headlines throughout the year on AI innovations, Google has also pursued them, planning and testing innovations that could give OpenAI a run for its vast store of money.

At the Google I/O conference earlier this year, the company previewed Gemini. The next-generation foundation model is created to be highly efficient in enabling future innovations. While there were some reports that Google was planning to delay the launch of Gemini till next year, the company has kept its word and announced the availability of the new AI tool.

“Nearly eight years into our journey as an AI-first company, the pace of progress is only accelerating. Millions of people are now using generative AI across our products to do things they couldn’t even a year ago, from finding answers to more complex questions to using new tools to collaborate and create. At the same time, developers are using our models and infrastructure to build new generative AI applications, and startups and enterprises worldwide are growing with our AI tools,” said Google and Alphabet CEO Sundar Pichai.

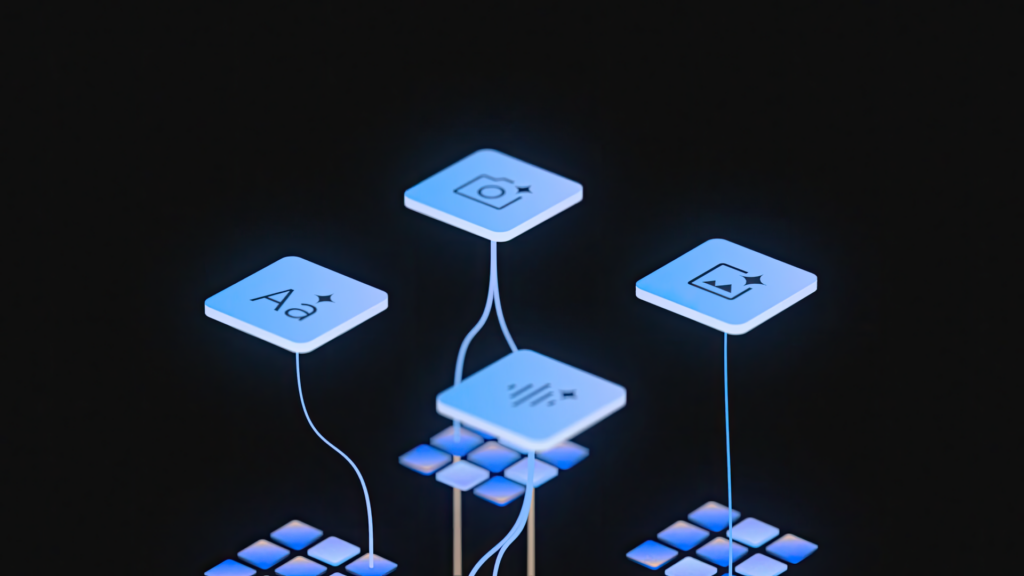

Gemini is Google’s most capable and general model yet, with state-of-the-art performance across many leading benchmarks. According to Demis Hassabis, CEO of Google DeepMind, Gemini was built from the ground up to be multimodal, which means it can generalize and seamlessly understand, operate across, and combine different types of information, including text, code, audio, image, and video.

Google Gemini could be a game changer in AI.

“Gemini is also our most flexible model yet — able to run on everything efficiently, from data centers to mobile devices. Its state-of-the-art capabilities will significantly enhance how developers and enterprise customers build and scale with AI,” said Hassabis.

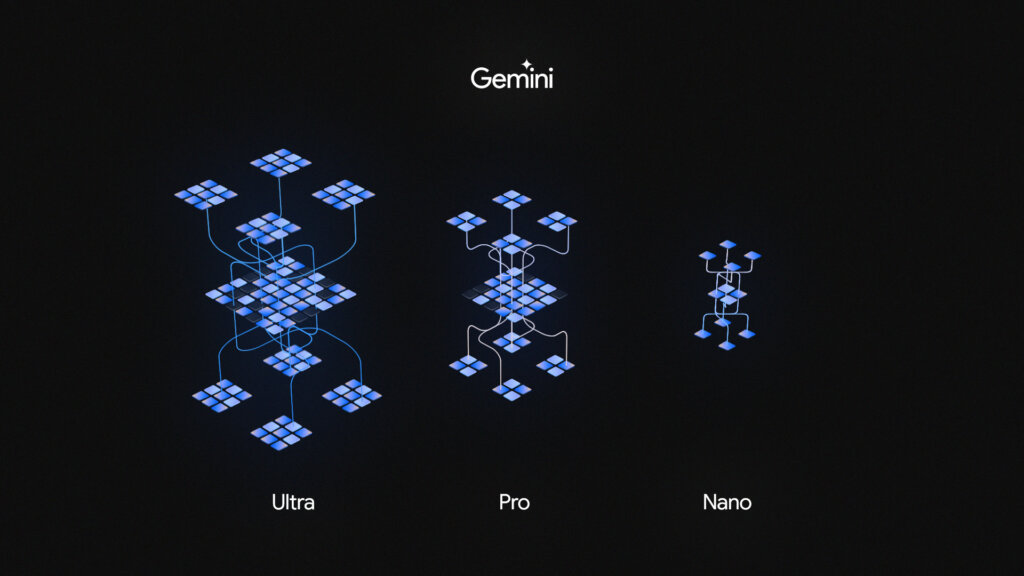

The first version, Gemini 1.0, is optimized into three different sizes:

- Gemini Ultra is the largest and most capable model for highly complex tasks.

- Gemini Pro is the best model for scaling across various tasks.

- Gemini Nano is the most efficient model for on-device tasks.

The first version, Gemini 1.0 is optimized into three different sizes. (Source – Google)

Taking a deeper look into Google Gemini Ultra

Gemini Ultra has been designed to be natively multimodal, pre-trained on different modalities. Having been fine-tuned with additional multimodal data to refine its effectiveness further, Gemini understands and reasons about all kinds of inputs from the ground up far better than existing multimodal models.

In fact, Gemini Ultra’s performance exceeds current state-of-the-art results on 30 of the 32 widely-used academic benchmarks used in large language model (LLM) research and development. It is also the first model to outperform human experts on MMLU (massive multitask language understanding), which uses a combination of 57 subjects such as math, physics, history, law, medicine, and ethics to test world knowledge and problem-solving abilities.

In terms of use cases, Gemini Ultra can make sense of complex written and visual information. For example, a user can scribble a picture, and the tool can recognize it and provide feedback on the image. This makes Gemini uniquely skilled at uncovering knowledge that can be difficult to discern amid vast amounts of data.

During a media briefing, Google showcased an example in which the tool can answer questions related to complicated topics. For example, the tool can answer equations in subjects like math and explain how it got the answer. This is possible as Gemini is trained to recognize and understand text, images, audio, and more simultaneously. It better understands nuanced information and can answer questions relating to complicated topics.

Another innovative capability of Gemini is its ability to understand, explain, and generate high-quality code in the world’s most popular programming languages. Gemini can work across languages like Python, Java, C++, and Go, and its ability to reason about complex information makes it one of the world’s leading foundation models for coding.

According to Hassabis, Gemini Ultra excels in several coding benchmarks, including HumanEval, a necessary industry standard for evaluating performance on coding tasks, and Natural2Code. This internally held-out dataset uses author-generated sources instead of web-based information. Gemini can also be used as the engine for more advanced coding systems.

With a score of 90.0%, Gemini Ultra is the first model to outperform human experts on MMLU. (Source – Google).

Gemini Pro and Gemini Nano

Gemini is expected to make its debut in Bard in two phases. The first phase involves Gemini Pro. Gemini Pro in Bard, available in English, will answer users’ queries with more reasoning, planning, and understanding.

“We’ve specifically tuned Gemini Pro in Bard to be far more capable of understanding, summarizing, reasoning, coding, and planning. And we’re seeing great results: in blind evaluations with our third-party raters, Bard is now the most preferred free chatbot compared to leading alternatives,” commented Sissie Hsiao, VP/GM, assistant at Bard at Google.

Like OpenAI’s ChatGPT, Gemini Pro in Bard will give users a better way of finding solutions to queries. In an example highlighted, Google teamed up with YouTuber and educator Mark Rober to put Bard with Gemini Pro to the ultimate test by crafting the most accurate paper airplane.

In the video, Bard can be seen giving suggestions on how to build the best paper airplane, including the materials to use and the locations in which it can be tested. With Gemini Pro, Bard can recognize the aircraft model and point out areas for improvement.

Meanwhile, Gemini Nano is expected to revolutionize the mobile phone industry. As expected, Gemini Nano will run on Google’s Pixel 8 Pro. As the first smartphone engineered for Gemini Nano, it uses the power of Google Tensor G3 to deliver two expanded features: Summarize in Recorder and Smart Reply in Gboard.

“Gemini Nano running on the Pixel 8 Pro offers several advantages by design, helping prevent sensitive data from leaving the phone and offering the ability to use features without a network connection. In addition to Gemini Nano now running on-device, the broader family of Gemini models will unlock new capabilities for the Assistant with Bard experience early next year on Pixel,” said Brian Rakowski, VP of product management at Google Pixel.

For Android users, there is Android AICore. The new system service in Android 14 gives easy access to Gemini Nano. AICore handles model management, runtimes, safety features, and more, simplifying the work of incorporating AI into apps.

“AICore is private by design, following the example of Android’s Private Compute Core with isolation from the network via open source APIs, providing transparency and auditability. As part of our efforts to build and deploy AI responsibly, we also built dedicated safety features to make it safer and more inclusive for everyone,” explained Dave Burke, VP of engineering at Google.

Gemini Ultra has been designed to be natively multimodal, pre-trained from the start on different modalities. (Source – Google).

Google Gemini is built with responsible AI at its core

As with any innovation, there will be concerns about how much technology will impact society. Given the growing problems with regulations and privacy, especially with generative AI, Google believes it has considered all these issues when developing the technology based on the company’s AI principles.

In fact, as Gemini can recognize movie scenes, famous people, and products, Google claims that the model has been trained on data that is publicly available on the web. With Gemini, Google will also add new protections to its multimodal capabilities.

“Gemini has the most comprehensive safety evaluations of any Google AI model to date, including for bias and toxicity. We’ve conducted novel research into potential risk areas like cyber-offense, persuasion, and autonomy. We have applied Google Research’s best-in-class adversarial testing techniques to help identify critical safety issues before Gemini’s deployment,” said Hassabis.

Hassabis also pointed out that to identify blindspots in Google’s internal evaluation approach, the Gemini teams are working with a diverse group of external experts and partners to stress-test their models across various issues.

To diagnose content safety issues during Gemini’s training phases and ensure its output follows policies, Google uses benchmarks such as Real Toxicity Prompts, a set of 100,000 prompts with varying degrees of toxicity pulled from the web, developed by experts at the Allen Institute of AI.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland