A team of international researchers recently taught AI to justify its reasoning and point to evidence when it makes a decision. Source: Shutterstock

Researchers teach AI to explain itself

ALONGSIDE the hype and excitement surrounding the fast-developing applications of artificial intelligence (AI), there’s a fear concerned with how the way this tech actually works.

A recent MIT Technology Review article titled “The Dark Secret at the Heart of AI” warned: “No one really knows how the most advanced algorithms do what they do. That could be a problem”.

This uncertainty and lack of accountability surrounding AI have led to many questioning the uses of the technology. A report by the AI Now Institute has even recommended that public agencies responsible for criminal justice, health care, welfare, and education should avoid using AI technology.

The unseeable space between where data goes in and answers come out is often referred to as a “black box”, and is what is keeping us humans from trusting AI systems.

But now, a team of international researchers is on the way to a making the ‘black box’ transparent, having recently taught AI to justify its reasoning and point to evidence when making a decision.

The workforce comprised of researchers from UC Berkeley, College of Amsterdam, MPI for Informatics, and FB AI Analysis. The recently published analysis builds on the group’s previous work, but this time around they’ve taught the AI some new tricks.

Much like humans, it can “point” to the evidence it used to answer a question as well as describe how it interpreted that evidence through text. It has been developed to answer questions that require the typical intellect of a nine-year-old child.

According to the group’s recently published white paper, this marks the first time anyone has created a system that can clarify itself in two alternative ways:

“Our model is the first to be capable of providing natural language justifications of decisions as well as pointing to the evidence in an image.”

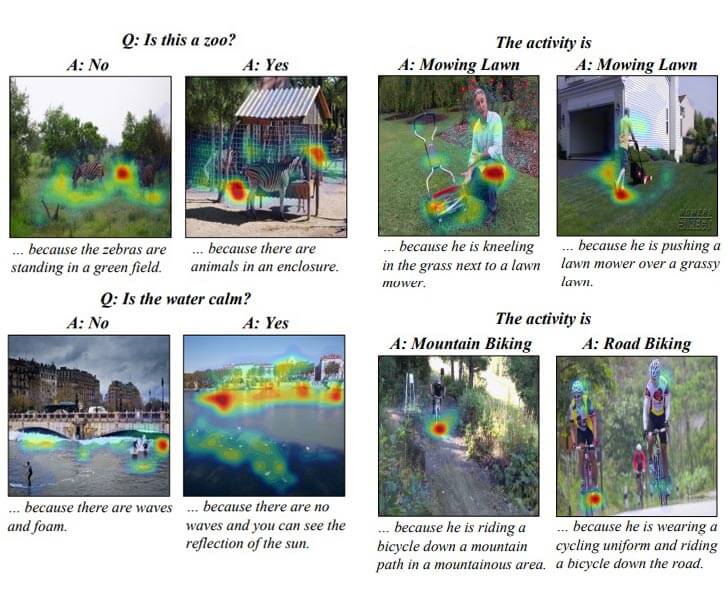

In the examples shown in the whitepaper, the researchers show that the AI can answer questions about objects as well as their actions. As well as this, it can explain its answers by describing what it saw in the images, highlighting the relevant parts.

Source: ArXiv

It should be noted that the AI did not get everything right. For instance, it got confused when determining whether a person was smiling or not, as well as having difficulty in telling the difference between a person painting a room and someone using a vacuum cleaner.

While it may have made some mistakes, this was part of the research: knowing what AI sees and its reasons for making decisions.

As the neural networks of AI advance and become our primary source of data analysis, it is important for researchers to discover methods to debug, error-check, and understand the decision-making process of the technology.

By creating a way for AI to point out its work and clarify itself, is a massive step towards better understanding how this “much-feared” piece of technology works.

READ MORE

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications

- How Japan balances AI-driven opportunities with cybersecurity needs

- Deploying SASE: Benchmarking your approach