(Source – Shutterstock)

Deepfakes get harder to detect

- Generative AI makes deepfakes detection harder.

- Study shows consumers are aware of deepfakes and are concerned about identity theft.

Deepfakes detection continues to be a problem all over the world. Deepfakes manipulate media to create misleading representations of politicians, celebrities and ordinary people. Now, generative AI is enabling deepfakes to wreak more havoc.

Recently, an AI-generated photo of a fake explosion at the Pentagon caused mass confusion among users and even led to a brief run on the US stock market. According to a Bloomberg report, the fake photo, which first appeared on Facebook, showed a large plume of smoke that a Facebook user claimed was near the US military headquarters in Virginia. It soon spread on Twitter accounts that reach millions of followers, including the Russian state-controlled news network RT and the financial news site ZeroHedge, a participant in the social-media company’s new Twitter Blue verification system.

The Pentagon deepfake image is just one of the many examples in which deepfakes are manipulating users. But the bigger concern about deepfakes detection is the potential fraud and scams they can create. For instance, cybercriminals can create deepfake audio or video impersonations to deceive individuals into making fund transfers or revealing sensitive information.

Confident that this picture claiming to show an “explosion near the pentagon” is AI generated.

Check out the frontage of the building, and the way the fence melds into the crowd barriers. There’s also no other images, videos or people posting as first hand witnesses. pic.twitter.com/t1YKQabuNL

— Nick Waters (@N_Waters89) May 22, 2023

There are also concerns about how deepfakes are infringing privacy by manipulating images and videos of individuals without their consent. As more users share personal images and videos on social media, their profiles can be used to create explicit or compromising content, causing harm to reputations, relationships, or personal lives.

Thanks to generative AI, it is becoming increasingly challenging to distinguish between deepfake and authentic content which can undermine public trust in the veracity of information and evidence.

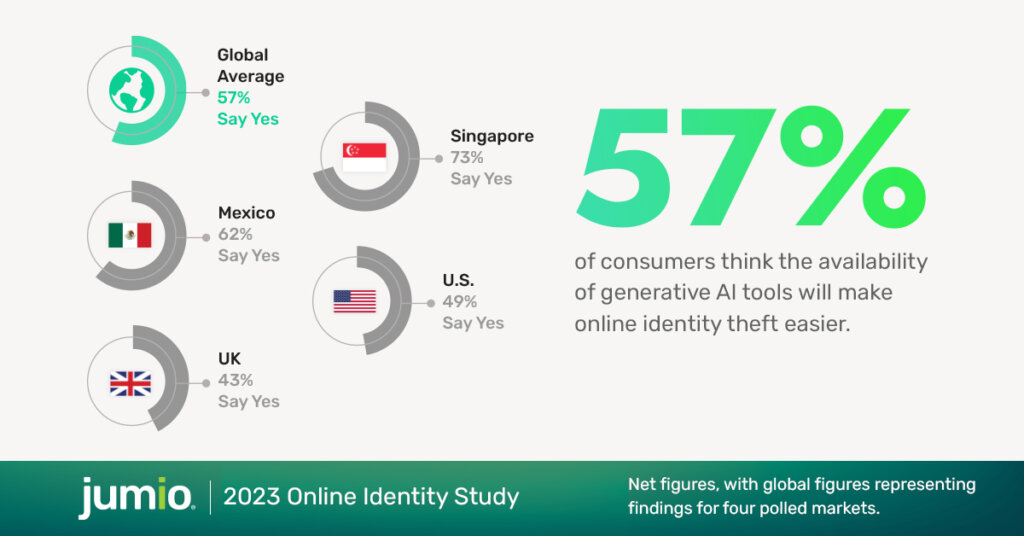

According to Jumio’s 2023 Online Identity Study, a survey conducted on 8,055 adult consumers split evenly across the United Kingdom, United States, Singapore and Mexico, awareness of generative AI and deepfakes among consumers is high with 52% of respondents believing they could detect a deepfake video. This sentiment reflects over-confidence on the part of consumers, given the reality that deepfakes have reached a level of sophistication that prevents detection by the naked eye.

Over two-thirds (67%) claim to be aware of generative AI tools – such as ChatGPT, DALL-E and Lensa AI – which can produce fabricated content, including videos, images and audio. Awareness was highest among consumers in Singapore (87%) and lowest among those in the UK (56%)

“A lot of people seem to think they can spot a deepfake. While there are certain tell-tale signs to look for, deepfakes are getting exponentially better all the time and are becoming increasingly difficult to detect without the aid of AI,” said Stuart Wells, Jumio’s chief technology officer.

While AI-powered technology will increasingly be required by businesses to spot and protect their networks and customers from deepfakes, Wells believes that consumers can protect themselves by treating provocative images, videos and audio with skepticism.

“Some quick research will usually uncover whether it’s a fake or not.”

To detect an authentic or fake content, try out this challenge by Massachusetts Institute of Technology.

Deepfakes are fueling identity theft

Identity theft can lead to bigger problems for organizations. Not only can companies lose funds, but cybercriminals can also use deepfake on stolen identities to launch smear campaigns or make statements that could destroy a company’s reputation.

In fact, Jumio’s survey indicates that as consumers become more aware of deepfakes, there is also an emerging understanding of how they could be used to fuel identity theft. For example, 73% of consumers in Singapore believe that identity theft will be a big problem compared to just 42% of consumers in the UK.

Despite the concerns of identity theft and deepfakes, the survey also found that over two-thirds (68%) of consumers are open to using a digital identity to verify themselves online. The top sectors where they would prefer a digital identity over a physical ID (like a driver’s license or passport) are financial services (43%), government (38%) and healthcare (35%).

(Source – Jumio)

For Philipp Pointner, Jumio’s chief of digital identity, organizations have a duty to educate their customers on the nuances of generative AI technologies to help them be more realistic about their ability to detect deepfakes.

“At the same time, even the best education will never be able to completely stop a fraudster’s use of evolving technologies. Online organizations must look to implement multimodal, biometric-based verification systems that can detect deepfakes and prevent stolen personal information from being used. Encouragingly, our research indicated strong consumer appetite for this form of identity verification, which businesses should act on fast,” added Pointner.

With tech companies developing more ways to use generative AI, deepfakes are only going to continue to be menacing and harder to detect. Organizations will need to take a multifaceted approach, which involves technological advancements, policy and legal frameworks, media literacy and public awareness to solve the problem.

While tools are being developed to detect and combat deepfakes, it may be a while before this problem is solved. Till then, consumers and businesses will need to be vigilant, especially in verifying the authenticity of the media content they encounter.

READ MORE

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications

- How Japan balances AI-driven opportunities with cybersecurity needs

- Deploying SASE: Benchmarking your approach