The impact of AI-generated deepfakes on biometric authentication. (Generated with AI)

Are face biometrics enough to stop AI-generated deepfakes?

- By 2026, 30% of enterprises may distrust facial biometric systems due to AI-generated deepfakes.

- Deepfakes exploit static biometric data and voice replication.

- Deepfakes not only threaten security systems but also have the potential to influence elections and public opinion.

The advent of AI-generated deepfakes targeting facial biometric systems presents a formidable challenge to the integrity of identity verification and authentication solutions. Gartner, Inc.’s projection that 30% of enterprises will question the reliability of these technologies by 2026 underscores the urgency of addressing this emerging threat.

The rise of AI-generated deepfakes

As AI advances, the creation of deepfakes—synthetic images that convincingly mimic real human faces—has become a sophisticated tool in cybercriminals’ arsenal. These entities exploit the static nature of physical characteristics used for authentication, such as eye size, face shape, and fingerprints, to bypass security measures. Furthermore, the ability of deepfakes to replicate human voices with high accuracy adds another layer of complexity to the security challenge, potentially circumventing voice recognition systems. This shifting landscape highlights a critical vulnerability in biometric security technologies and underscores organizations’ need to re-evaluate the efficacy of their current security protocols.

Akif Khan, a VP analyst at Gartner, notes that the last decade has seen pivotal advancements in AI technology, enabling the creation of synthetic images that closely resemble real human faces. These deepfakes present a new frontier in cyberthreats, with the potential to bypass biometric authentication systems by imitating the facial features of legitimate users.

The implications of these advancements are profound, as Khan explains. Organizations may soon question the integrity of their identity verification processes, unable to discern whether the individual attempting access is genuine or merely a sophisticated deepfake representation. This uncertainty poses a significant risk to the security protocols that many rely on.

Deepfakes introduce complex challenges to biometric security measures by exploiting static data—unchanging physical characteristics such as eye size, face shape, or fingerprints—that authentication devices use to recognize individuals. The static nature of these attributes makes them vulnerable to replication by deepfakes, allowing unauthorized access to sensitive systems and data.

Additionally, the technology underpinning deepfakes has evolved to replicate human voices with remarkable accuracy. By dissecting audio recordings of speech into smaller fragments, AI systems can recreate a person’s vocal characteristics, enabling deepfakes to convincingly mimic someone’s voice for use in scripted or impromptu dialogue.

This capability extends to long-term voice storage, letting cybercriminals to produce deepfakes capable of responding to verbal security prompts, effectively circumventing voice recognition-based security measures. The varied effectiveness of biometric security systems in countering deepfakes highlights the pressing need for enhanced protection mechanisms.

The role of multi-factor authentication and PAD

Facial recognition technologies, in particular, are susceptible to manipulation by deepfakes, which can fool systems into granting access by matching the artificial likeness to stored data. This vulnerability underscores the critical importance of multi-factor authentication (MFA) as a defense against such threats, emphasizing the need for ongoing advancements in biometric security to address the evolving landscape of cyberthreats.

Current identity verification methods employing face biometrics rely on presentation attack detection (PAD) to determine the authenticity of the user. However, Khan points out that existing standards and evaluation methods for PAD do not account for the sophisticated digital injection attacks made possible by today’s AI-generated deepfakes.

According to Gartner’s research, presentation attacks remain prevalent, but 2023 saw a 200% increase in injection attacks, signaling a shift towards more sophisticated methods of security breach. Addressing these threats necessitates a comprehensive approach, integrating PAD, injection attack detection (IAD), and image inspection techniques.

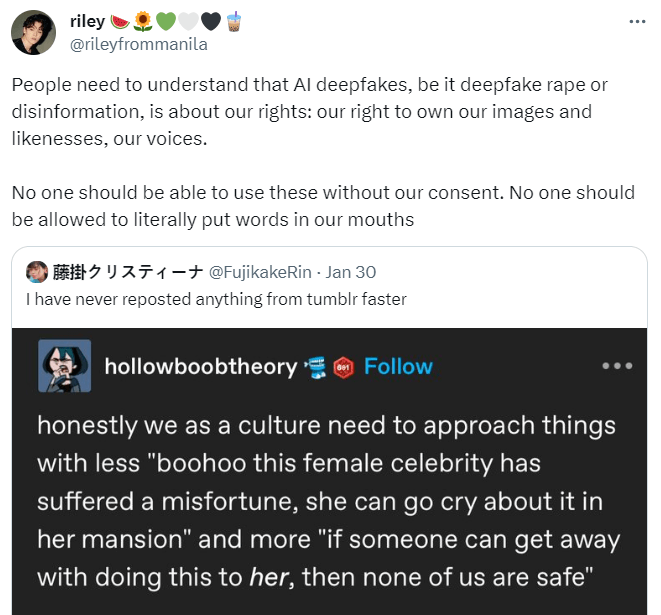

AI-generated deepfakes in action. (Source – X).

Elections in the age of AI

The influence of deepfakes extends beyond security concerns, stirring controversies, especially during election periods, due to their potential to sway public opinion. In Indonesia, with its upcoming general election, there has been a surge in activities by buzzers—individuals paid to disseminate large quantities of online content to influence the electorate. Amid this digital cacophony, Nature reported that researchers like Ika Idris from Monash University’s Jakarta campus are closely monitoring the spread of hate speech and misinformation, including deepfake videos that could falsely imply political alliances or stances of candidates.

However, the task of researching and understanding the impact of deepfakes and misinformation is hampered by the limitations imposed on data access by social media platforms, posing significant challenges for researchers like Idris, who seek to analyze these trends. Similarly, in the United States, as it approaches its presidential election, researchers like Kate Starbird and her team at the University of Washington are examining the spread of rumors on social media, noting a reduction in collaborative efforts compared to the previous election cycle.

The year 2024 marks a critical juncture, with a substantial portion of the world’s population participating in elections amidst the expanding reach of social media and the increasing accessibility of generative AI tools capable of producing deepfakes. Joshua Tucker of New York University emphasizes the challenges researchers face in assessing these technologies’ impact on electoral processes, highlighting the inventive strategies employed to overcome these obstacles.

How to protect yourself from AI-generated deepfakes?

To bolster defenses against the threats posed by AI-generated deepfakes, organizations are urged to collaborate with vendors that demonstrate a commitment to advancing beyond current security standards, focusing on the detection, classification, and quantification of these emerging threats. Khan advises that organizations establish a foundational set of controls by partnering with vendors to combat the latest deepfake-related security challenges through IAD and image inspection.

CISOs and risk management leaders are encouraged to incorporate additional layers of security, including device identification and behavioral analytics, to enhance the detection capabilities of their identity verification systems. Above all, it is imperative for those responsible for identity and access management to adopt technologies and strategies that ensure the verification of genuine human presence, thereby mitigating the risks associated with account takeover.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland