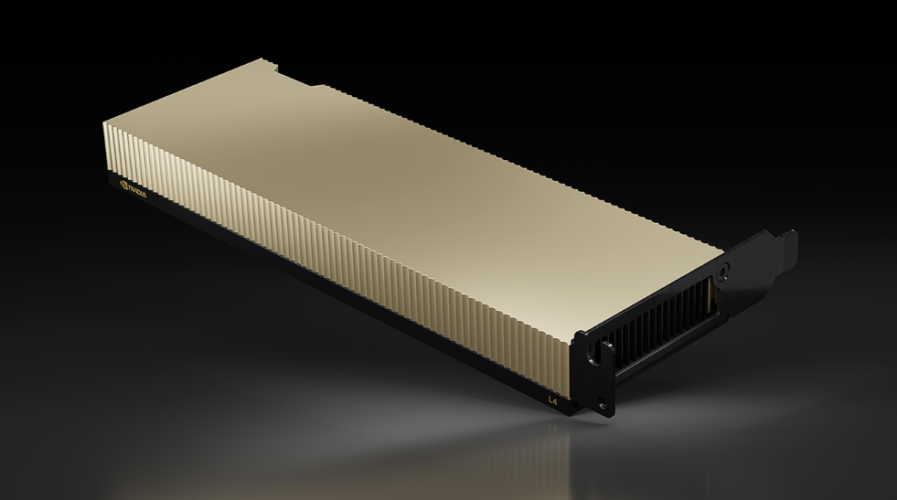

NVIDIA L4 – (Source – NVIDIA)

NVIDIA brings generative AI to almost everything, everywhere, all at once

- NVIDIA launched four customized inference platforms for numerous evolving generative AI applications.

- Google Cloud employs new platforms for a broad spectrum of generative AI services, such as chatbots, text-to-image content, AI video, and more.

Generative AI has revolutionized various industries, driven by advancements in machine learning, computational power, and data availability. Leading corporations like Microsoft and Google have adopted generative AI technologies to create groundbreaking solutions. Microsoft has integrated AI into products like Bing, Edge mobile apps, and Skype.

Meanwhile, Google’s recent generative AI innovations can be found in services like Google Cloud, MakerSuite, and Google Workspace, providing developers and businesses with state-of-the-art AI-driven tools. Now, it’s time for NVIDIA to let the world know about its generative AI initiatives.

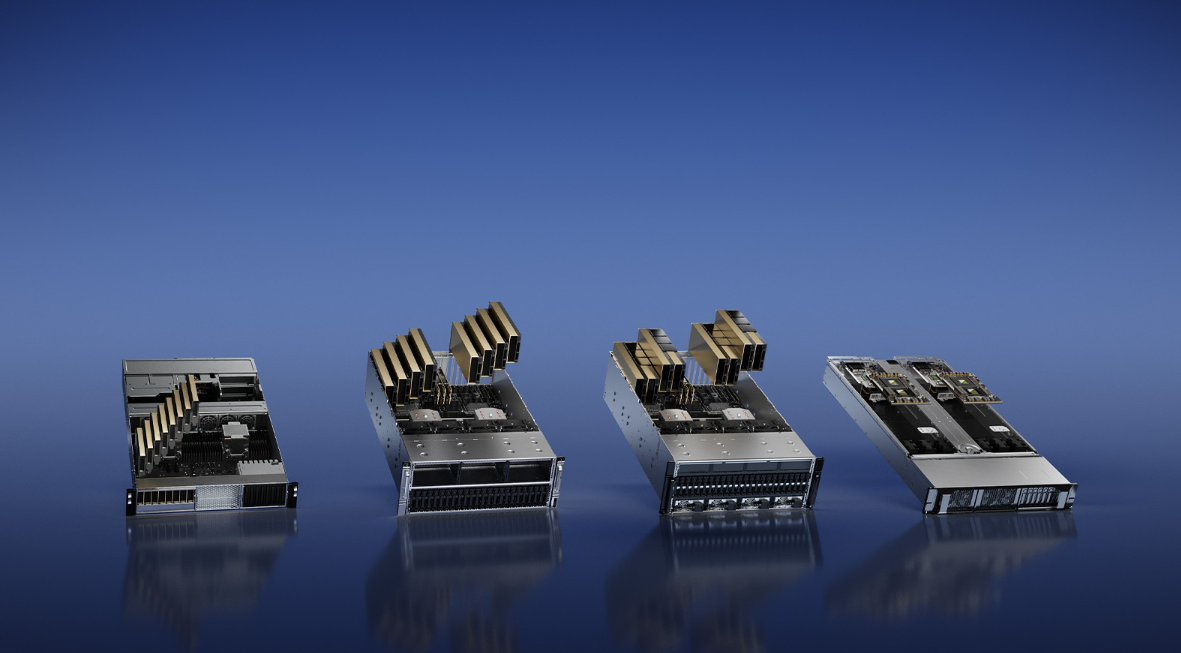

As generative AI expands, its potential applications and influence on numerous fields rapidly grow. At GTC, NVIDIA introduced four inference platforms tailored for an array of emerging generative AI applications, enabling developers to create specialized AI-driven applications offering novel services and insights swiftly.

These platforms combine NVIDIA’s comprehensive inference software suite with state-of-the-art NVIDIA Ada, Hopper, and Grace Hopper processors, including the NVIDIA L4 Tensor Core GPU and the NVIDIA H100 NVL GPU. Each platform is fine-tuned for high-demand workloads, such as AI video processing, image generation, large language model deployment, and recommender inference.

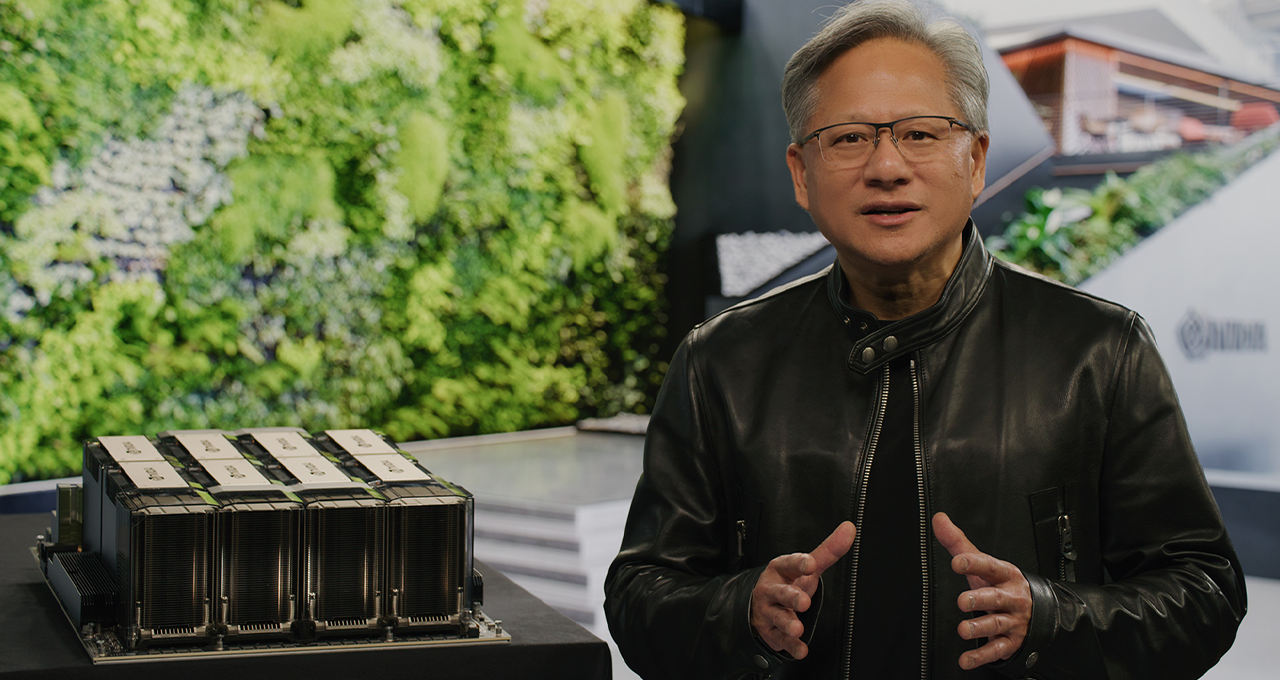

Jensen Huang, the founder and CEO of NVIDIA, emphasized that the increasing prominence of generative AI necessitates more potent inference computing platforms. “The number of applications for generative AI is infinite, limited only by human imagination. Arming developers with the most powerful and flexible inference computing platform will accelerate the creation of new services that will improve our lives in ways not yet imaginable,” he added.

NVIDIA founder and CEO Jensen Huang with the NVIDIA DGX H100 during his GTC keynote.

Accelerating generative AI’s diverse set of inference workloads

Each platform features an NVIDIA GPU optimized for specific generative AI inference workloads, accompanied by specialized software. The first one, the NVIDIA L4, has a compact form factor, as highlighted by Ian Buck, VP of Hyperscale and HPC at NVIDIA. This small, low-profile, single-slot GPU fits into any server and excels in AI video processing, delivering speeds 120 times faster than conventional CPU servers, resulting in 120 times less hardware and 99% less energy consumption.

The versatile NVIDIA L4 GPU suits various workloads, such as enhanced video decoding and transcoding, video streaming, augmented reality, and generative AI video.

The NVIDIA L40 for Image Generation, another platform, is designed for graphics and AI-enabled 2D, video, and 3D image generation. It powers NVIDIA Omniverse, a platform for developing and operating metaverse applications in data centers, and provides 7x the inference performance for Stable Diffusion and 12x Omniverse performance compared to its predecessor.

Lastly, the NVIDIA H100 NVL for Large Language Model Deployment is ideal for deploying massive LLMs like ChatGPT on a grand scale. With 94GB of memory and Transformer Engine acceleration, the H100 NVL achieves up to 12x faster inference performance for GPT-3 compared to the previous-generation A100 at data center scale.

“This amazing GPU will considerably lower the total cost of ownership (TCO) for running and performing inference on GPT-based and large language models. It will play a crucial role in democratizing GPT and large language models for widespread use,” said Buck.

Accelerating generative AI collaboration

Google Cloud, a key cloud partner and early customer of NVIDIA’s inference platforms, is integrating the L4 platform into its machine learning platform, Vertex AI. It is the first cloud service provider to offer L4 instances through a private preview of its G2 virtual machines.

NVIDIA and Google separately revealed that Descript and WOMBO are among the first organizations to gain early access to L4 on Google Cloud. Descript utilizes generative AI to assist creators in producing videos and podcasts, while WOMBO offers a text-to-digital art app called Dream, powered by AI.

NVIDIA has also partnered with Microsoft to provide enterprise users access to the industrial metaverse and AI supercomputing resources via Microsoft Azure. This collaboration includes NVIDIA Omniverse Cloud and NVIDIA DGX Cloud, connecting Microsoft 365 applications with NVIDIA Omniverse.

NVIDIA’s four inference platforms optimized for a diverse set of rapidly emerging generative AI applications, left to right: L4 for AI video, L40 for image generation, H100 NVL for LLMs and Grace Hopper for recommendation models.

These partnerships will accelerate enterprises’ digitalization, engagement in the industrial metaverse, and training of advanced models for generative AI and other applications.

“The world’s largest companies are racing to digitalize every aspect of their business and reinvent themselves as software-defined technology companies,” said Jensen Huang, founder and CEO of NVIDIA. “NVIDIA AI and Omniverse supercharge industrial digitalization. Building NVIDIA Omniverse Cloud within Microsoft Azure brings customers the best of our combined capabilities.”

Bringing generative AI to world’s enterprises with cloud services

NVIDIA is introducing a collection of cloud services that empower businesses to create, fine-tune and implement custom large language models and generative AI models tailored to specific tasks using their proprietary data. Companies like Adobe, Getty Images, Morningstar, Quantiphi, and Shutterstock will leverage NVIDIA AI Foundations model-making services covering language, images, video, and 3D.

Enterprises can develop customized, domain-specific generative AI applications using the NVIDIA NeMo language service and NVIDIA Picasso image, video, and 3D service. NVIDIA has also unveiled new models for its NVIDIA BioNeMo cloud service in the field of biology.

NVIDIA NeMo and Picasso services are accessible via a browser on the NVIDIA DGX Cloud and offer pre-trained models, data processing frameworks, vector databases, optimized inference engines, APIs, and assistance from NVIDIA experts. NeMo service enables developers to tailor large language models to business needs, while NVIDIA Picasso service streamlines simulation and creative design across image, video, and 3D fields.

NVIDIA and Adobe are broadening their R&D partnership to develop cutting-edge generative AI models focusing on transparency and Content Credentials. Some of these models will be incorporated into Adobe Creative Cloud products and NVIDIA Picasso.

Additionally, NVIDIA is collaborating with Getty Images to create responsible generative text-to-image and text-to-video foundation models using fully licensed assets. NVIDIA is also partnering with Shutterstock to develop a generative text-to-3D foundation model utilizing the NVIDIA Picasso service, simplifying 3D model creation. Shutterstock will make the model available on its platform, promoting 3D asset creation and expediting the development of industrial digital twins and 3D virtual world composition in NVIDIA Omniverse.

READ MORE

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications

- How Japan balances AI-driven opportunities with cybersecurity needs

- Deploying SASE: Benchmarking your approach