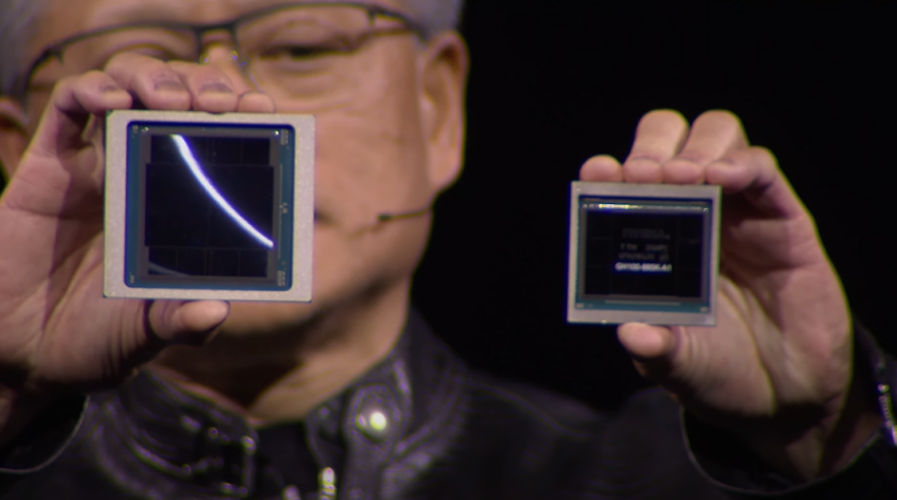

NVIDIA CEO Jensen Huang presents his GTC keynote (Source – YouTube)

Nvidia GTC 2024 showcases the ‘world’s most powerful chip’ for AI and more!

- The Nvidia GTC 2024 reveals the ‘world’s most powerful chip’ for AI, set to transform AI model accessibility and efficiency.

- Nvidia also announced significant partnerships and software tools.

- Nvidia also deepened its foray into the automotive industry, partnering with leading Chinese electric vehicle makers.

This year’s Nvidia GTC is packed with noteworthy revelations, including the introduction of the Blackwell B200 GPU. Branded as the ‘world’s most powerful chip’ for AI, it’s designed for its capacity to make AI models with trillions of parameters more accessible to a wider audience.

Jensen Huang, the CEO of Nvidia, inaugurated the company’s annual developer conference with a series of strategic announcements aimed at solidifying Nvidia’s supremacy in the AI sector.

Revolutionizing AI with the new Nvidia chip

Nvidia introduced the B200 GPU, boasting an impressive 20 petaflops of FP4 computing power, thanks to its 208 billion transistors. Additionally, Nvidia unveiled the GB200, which synergizes two B200 GPUs with a single Grace CPU, claiming it can enhance LLM inference workload performance by 30 times while also significantly boosting efficiency. This advancement is said to slash costs and energy usage by as much as 25 times compared to the H100 model.

Previously, training a model with 1.8 trillion parameters required 8,000 Hopper GPUs and 15 megawatts of power. Now, Nvidia asserts that only 2,000 Blackwell GPUs are needed to achieve the same feat, reducing power consumption to just four megawatts.

The introduction of the GB200 “superchip” alongside the Blackwell B200 GPU marks a significant milestone. Nvidia reports that, in tests using the GPT-3 LLM with 175 billion parameters, the GB200 delivered seven times the performance and quadrupled the training speed of the H100.

A notable upgrade The Verge highlights is the second-generation transformer engine that enhances compute, bandwidth, and model capacity by utilizing four bits per neuron, halving the previous eight. The next-gen NVLink switch is a groundbreaking feature, enabling communication among up to 576 GPUs and providing 1.8 terabytes per second of bidirectional bandwidth. This innovation necessitated the creation of a new network switch chip, boasting 50 billion transistors and 3.6 teraflops of FP8 compute capability.

Bridging the gap: Nvidia’s new suite of software tools

Nvidia has also introduced a suite of software tools designed to streamline the sale and deployment of AI models for businesses, catering to a clientele that includes the globe’s tech behemoths.

These developments underscore Nvidia’s ambition to broaden its influence in the AI inference market, a segment where its chips are not yet predominant, as noted by Joel Hellermark, CEO of Sana.

Nvidia is renowned for its foundational role in training AI models, such as OpenAI’s GPT-4, a process that requires digesting vast data quantities, predominantly undertaken by AI-centric and large tech companies.

However, as businesses of various sizes strive to integrate these foundational models into their operations, Nvidia’s newly released tools aim to simplify the adaptation and execution of diverse AI models on Nvidia hardware.

According to Ben Metcalfe, a venture capitalist and founder of Monochrome Capital, Nvidia’s approach is akin to offering “ready-made meals” instead of gathering ingredients from scratch. This strategy is particularly advantageous for companies that may lack the technical prowess of giants like Google or Uber, enabling them to quickly deploy sophisticated systems.

For instance, ServiceNow used Nvidia’s toolkit to develop a “copilot” for addressing corporate IT challenges, demonstrating the practical applications of Nvidia’s innovations.

Noteworthy is Nvidia’s collaboration with major tech entities such as Microsoft, Google, and Amazon, which will incorporate Nvidia’s tools into their cloud services. However, prominent AI model providers like OpenAI and Anthropic are conspicuously absent from Nvidia’s partnership roster.

Nvidia’s toolkit could significantly bolster its revenue, as part of a software suite priced at US$4,500 annually per Nvidia chip in private data centers or US$1 per hour in cloud data centers.

Reuters suggests that the announcements made at GTC 2024 are pivotal in determining whether Nvidia can sustain its commanding 80% share in the AI chip marketplace.

These developments reflect Nvidia’s evolution from a brand favored by gaming enthusiasts to a tech titan on par with Microsoft, boasting a staggering sales increase to over US$60 billion in its latest fiscal year.

While the B200 chip promises a thirtyfold increase in efficiency for tasks like chatbot responses, Huang remained tight-lipped about its performance in extensive data training and did not disclose pricing details.

Despite the surge in Nvidia’s stock by 240% over the past year, Huang’s announcements did not ignite further enthusiasm in the market, with a slight decline in Nvidia’s stock following the presentation.

Tom Plumb, CEO and portfolio manager at Plumb Funds, a significant investor in Nvidia, remarked that the Blackwell chip’s unveiling was anticipated but reaffirmed Nvidia’s leading edge in graphics processing technology.

Nvidia has revealed that key clients, including Amazon, Google, Microsoft, OpenAI, and Oracle, are expected to incorporate the new chip into their cloud services and AI solutions.

The company is transitioning from selling individual chips to offering complete systems, with its latest model housing 72 AI chips and 36 central processors, exemplifying Nvidia’s comprehensive approach to AI technology deployment.

Analysts predict a slight dip in Nvidia’s market share in 2024 as competition intensifies and major customers develop their chips, posing challenges to Nvidia’s dominance, especially among budget-conscious enterprise clients.

Despite these challenges, Nvidia’s extensive software offerings, particularly the new microservices, are poised to enhance operational efficiency across various applications, reinforcing its position in the tech industry.

Moreover, Nvidia is expanding into software for simulating the physical world with 3-D models, announcing collaborations with leading design software companies. This move and Nvidia’s capability to stream 3-D worlds to Apple’s Vision Pro headset marks a significant leap forward in immersive technology.

Nvidia’s automotive and industrial ventures

Nvidia also unveiled an innovative line of chips tailored for automotive use, introducing capabilities that enable chatbots to operate within vehicles. The tech giant has further solidified its partnerships with Chinese car manufacturers, announcing that electric vehicle leaders BYD and Xpeng will incorporate its latest chip technology.

Last year, BYD surpassed Tesla, becoming the top electric vehicle producer worldwide. The company plans to adopt Nvidia’s cutting-edge Drive Thor chips, which promise to enhance autonomous driving capabilities and digital functionalities. Nvidia also highlighted that BYD intends to leverage its technology to optimize manufacturing processes and supply chain efficiency.

Additionally, this collaboration will facilitate the creation of virtual showrooms, according to Danny Shapiro, Nvidia’s Vice President for Automotive, during a conference call. Shapiro indicated that BYD vehicles would integrate Drive Thor chips starting next year.

The announcement was part of a broader revelation of partnerships during Nvidia’s GTC developer conference in San Jose, California. Notably, Chinese automakers, including BYD, Xpeng, GAC Aion’s Hyper brand, and autonomous truck developers, have declared their expanded cooperation with Nvidia. Other Chinese brands like Zeekr, a subsidiary of Geely, and Li Auto have also committed to using the Drive Thor technology.

Nvidia extends partnership with BYD (Source – X)

These partnerships reflect a strategic move by Chinese auto brands to leverage advanced technology, offsetting their relatively lower global brand recognition. BYD and its competitors are keen on increasing their market presence in Europe, Southeast Asia, and other regions outside China, positioning themselves against Tesla and other established Western brands in their domestic market.

Shapiro emphasized the considerable number of Chinese automakers and highlighted the supportive regulatory environment and incentives fostering innovation and developing advanced automated driving technologies.

Nvidia also announced several other key partnerships in the automotive and industrial sectors, including a collaboration with U.S. software firm Cerence to adapt large language model AI systems for automotive computing needs. Chinese computer manufacturer Lenovo is also working with Nvidia to deploy large language model technologies.

Another development is Soundhound’s utilization of Nvidia’s technology to create a voice command system for vehicles, enabling users to access information from a virtual owner’s manual through voice commands, marking a step forward in enhancing user interaction with vehicle technology.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland