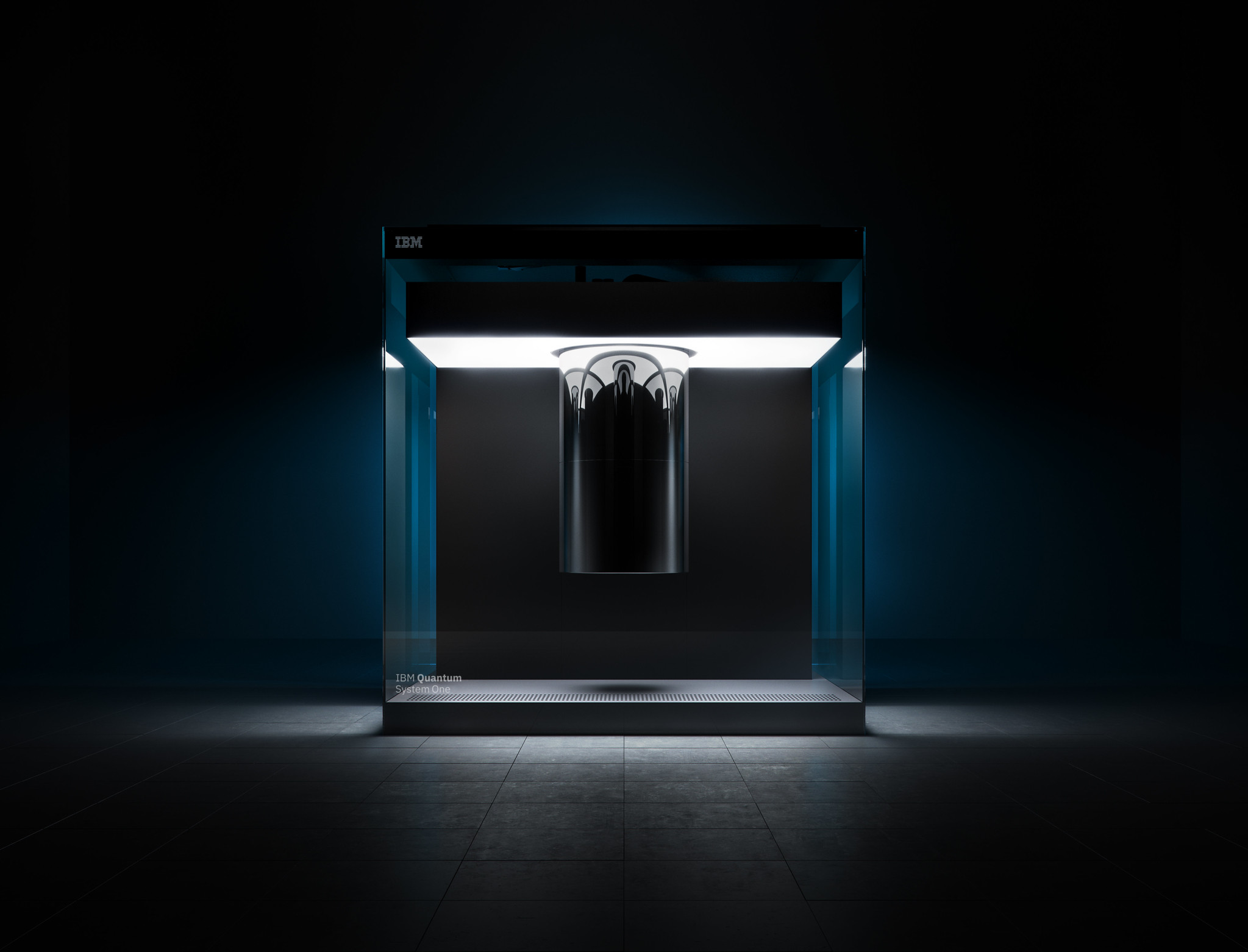

IBM Quantum Lab (Source – IBM Research)

IBM redefines quantum computing with unprecedented computational capabilities

|

Getting your Trinity Audio player ready... |

- IBM surpasses classical approaches with 100+ qubit quantum computers.

- IBM to enhance IBM Quantum systems with a minimum of 127 qubits within a year.

Quantum computing holds the potential to achieve significant improvements in computational speed compared to classical computing. However, the main obstacle to the realization of its full capabilities is the inherent noise of quantum systems. Despite this challenge, IBM recently made a groundbreaking quantum computing breakthrough prominently featured in the prestigious scientific journal Nature.

In their study, IBM demonstrated, for the first time, that quantum computers can deliver precise and reliable outcomes on a scale exceeding 100 qubits, surpassing the capabilities of leading classical approaches.

Quantum computing aims to tackle simulations of material components that classical computers have struggled to handle efficiently. However, existing quantum systems suffer from inherent noise and a high error rate due to the delicate nature of qubits and environmental disturbances.

In an experiment, the IBM team demonstrated that quantum computers could surpass leading classical simulations by learning from and mitigating errors within the system. Using the IBM Quantum ‘Eagle’ quantum processor, consisting of 127 superconducting qubits on a chip, they successfully generated large, entangled states that simulate the spin dynamics of a material model. The quantum computer accurately predicted properties such as magnetization.

To validate the accuracy of these simulations, a group of scientists at UC Berkeley performed identical simulations on advanced classical computers at Lawrence Berkeley National Lab’s National Energy Research Scientific Computing Center (NERSC) and Purdue University. As the complexity of the model increased, the quantum computer consistently produced precise results, thanks to advanced error mitigation techniques. In contrast, the classical computing methods eventually faltered and failed to match the performance of the IBM Quantum system.

In the video below, the collaboration between IBM Quantum and UC Berkeley show how quantum computers achieve unprecedented reliability and accuracy, solving challenging simulation problems at a scale of 127 qubits.

According to Darío Gil, Senior Vice President and Director of IBM Research, this recent achievement is the first instance where quantum computers have successfully modeled a natural physical system with accuracy surpassing that of leading classical approaches.

“To us, this milestone is a significant step in proving that today’s quantum computers are capable, scientific tools that can be used to model problems that are extremely difficult – and perhaps impossible – for classical systems, signaling that we are now entering a new era of utility for quantum computing,” he added.

Committing to power up the IBM Quantum systems

IBM also plans to enhance the power of their IBM Quantum systems by equipping them with a minimum of 127 qubits, to be completed within the next year. These advanced processors will provide access to computational capabilities that surpass classical methods for specific applications. Additionally, they will exhibit improved coherence times and reduced error rates compared to previous IBM quantum systems.

By combining these enhanced features with continuously evolving error mitigation techniques, IBM Quantum systems aim to reach a new industry threshold termed ‘utility-scale.’ At this point, quantum computers can serve as scientific tools to address a wide range of problems on a scale that classical systems may never be able to solve.

IBM Quantum System One (Source – IBM Research)

IBM Quantum makes utility-scale processors with more than 100 qubits available to all its users. This development allows over 2,000 IBM Quantum Spring Challenge participants to access these utility-scale processors. Throughout the challenge, participants were able to explore dynamic circuits, a cutting-edge technology that facilitates the execution of more advanced quantum algorithms.

It’s a busy year for IBM

IBM has been actively engaged in quantum computing, achieving notable milestones. In late 2022, the company established a new record by creating the largest quantum computing system, featuring a processor containing 433 qubits—the fundamental units of quantum information processing. Building on this accomplishment, IBM has now set its sights on an even more ambitious goal: constructing a 100,000-qubit machine within the next decade.

The announcement was made by IBM during the G7 summit held in Hiroshima, Japan, on May 22. To accomplish this ambitious objective, IBM will collaborate with the University of Tokyo and the University of Chicago through a US$100 million initiative. The aim is to propel quantum computing towards full-scale operation, enabling the technology to tackle complex problems beyond conventional supercomputers’ capabilities.

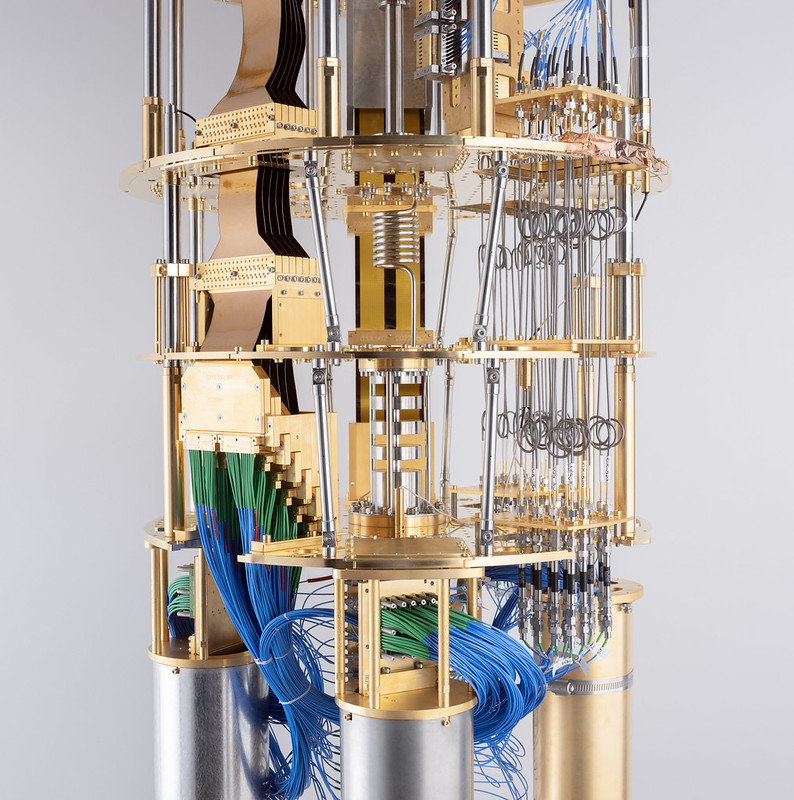

IBM Quantum cryostat (Source – IBM Research)

IBM’s aspirations for a 100,000-qubit machine are not unique, as other companies aim high. Google, for instance, has expressed its intention to reach a million qubits by the end of the decade, although only a fraction of those qubits will be available for computations due to error correction limitations. IonQ, based in Maryland, plans to achieve 1,024 “logical qubits” by 2028, with each logical qubit comprising a circuit of 13 physical qubits for error correction. Meanwhile, PsiQuantum, located in Palo Alto, shares Google’s million-qubit objective but has not disclosed its timeline or error-correction requirements.

However, focusing solely on the number of physical qubits can be misleading, as the construction details significantly impact factors such as noise resilience and operational ease. Companies involved in quantum computing often provide alternative performance metrics, such as “quantum volume” and the count of “algorithmic qubits.” Over the next decade, advancements in error correction techniques, qubit performance, software-based error mitigation, and the differentiation between various qubit types will make this competition particularly challenging to track and assess.

In summary, quantum computing is at a pivotal stage with IBM’s groundbreaking achievements in accurately modeling physical systems beyond classical approaches. The advancements in quantum processors and error mitigation techniques bring everyone closer to the realization of quantum computers as powerful scientific tools.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland