IBM is contemplating using a chip called the AI Unit as part of its new “Watsonx” cloud service. Source: Shutterstock

IBM considers in-house AI chip to save cost on operating its Watsonx

- IBM is contemplating using a chip called the AI Unit as part of its new “Watsonx” cloud service.

- IBM Watsonx is the company’s latest enterprise-ready AI and data platform, replacing Watson, its first major AI system that had failed to gain market traction.

When IBM made its bold foray into the artificial intelligence space over a decade ago with Watson, it failed tremendously mainly due to the high costs involved. Nine years later, the tech giant started rolling out IBM Watsonx – its new enterprise-ready AI and data platform. Learning from previous mistakes, IBM said it is considering utilizing its in-house AI chip this time around, which could help lower the operating cost of the platform.

The platform, made to cater to the boom in generative AI technologies amongst enterprises, was unveiled in May this year. By July 11, IBM revealed that it had been shaped by more than 150 users across industries participating in its beta and tech preview programs.

Clients using Watsonx. Source: IBM

“Previewed at IBM THINK in May, Watsonx comprises three products to help organizations accelerate and scale AI – the watsonx.ai studio for new foundation models, generative AI, and machine learning; the watsonx.data fit-for-purpose data store, built on an open lakehouse architecture; and the watsonx.governance toolkit to help enable AI workflows to be built with responsibility, transparency, and explainability (coming later this year),” IBM said in a statement.

Simply put, Watsonx allows clients and partners to specialize and deploy models for various enterprise use cases or build their own. “The models, according to the tech giant, are pre-trained to support a range of Natural Language Processing (NLP) type tasks including question answering, content generation and summarization, text classification, and extraction.”

A déjà vu?

In February 2011, the world was introduced to Watson, IBM’s cognitive computing system that defeated Ken Jennings and Brad Rutter in a game show called Jeopardy! It was the first widely seen demonstration of cognitive computing, and Watson’s ability to answer subtle, complex, pun-laden questions made clear that a new era of computing was at hand.

On the back of that very public success, in 2011, IBM turned Watson toward one of the most lucrative but untapped industries for AI: healthcare. Over the next decade, what followed was a series of ups and downs – but primarily downs – that exemplified the promise and numerous shortcomings of applying AI to healthcare. The Watson health odyssey finally ended in 2022 when it was sold off “for parts.”

In retrospect, IBM described Watson as a learning journey for the company. “There have been wrong turns and setbacks,” IBM says, “but that comes with trying to commercialize pioneering technology.”

Fast forward to today, IBM is hoping to take advantage of the boom in generative AI technologies that can write human-like text more than a decade after Watson failed to gain market traction. Mukesh Khare, general manager of IBM Semiconductors, told Reuters recently that one of the barriers the old Watson system faced was high costs, which IBM hopes to address now.

Khare said using its chips could lower cloud service costs because they are power efficient.

The AI chip

IBM announced the chip’s existence in October 2022 but did not disclose the manufacturer or how it would be used. “It’s our first complete system-on-chip designed to run and train deep learning models faster and more efficiently than a general-purpose CPU,” IBM said in a release dated October 13, 2022.

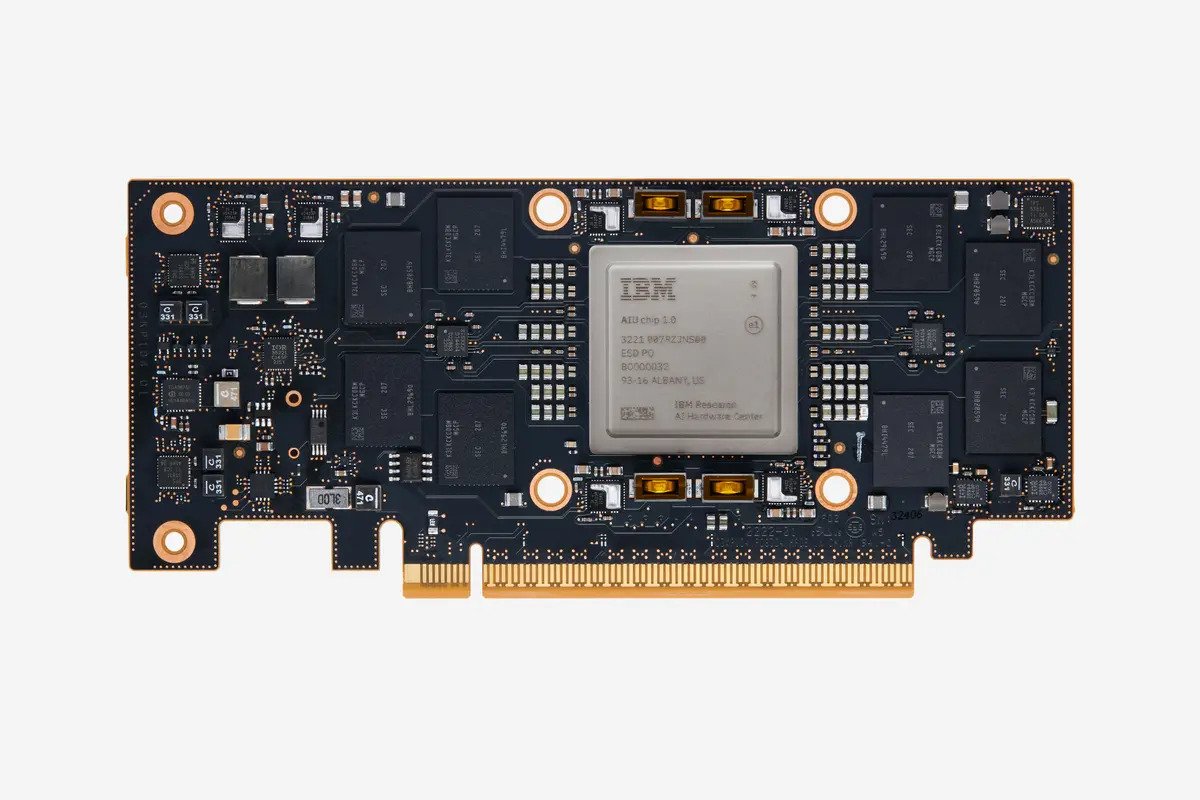

IBM Research AI Hardware Center created a specialized computer chip for AI – calling it an Artificial Intelligence Unit, or AIU. Source: IBM

IBM also shared how, for the last decade, they had run deep learning models on CPUs and GPUs — graphics processors designed to render images for video games —when they needed an all-purpose chip optimized for the types of matrix and vector multiplication operations used for deep learning. “At IBM, we’ve spent the last five years figuring out how to design a chip customized for the statistics of modern AI,” it said.

IBM was trying to say that AI models are growing exponentially, but the hardware to train these behemoths and run them on servers in the cloud or on edge devices like smartphones and sensors hasn’t advanced as quickly. “That’s why the IBM Research AI Hardware Center created a specialized computer chip for AI. We’re calling it an Artificial Intelligence Unit, or AIU,” the tech giant iterated.

Basically, the workhorse of traditional computing — standard chips known as CPUs — was designed before the revolution in deep learning, a form of machine learning that makes predictions based on statistical patterns in big data sets. “CPUs’ flexibility and high precision suit general-purpose software applications. But those winning qualities put them at a disadvantage when training and running deep learning models, which require massively parallel AI operations,” IBM added.

The AI chip by the tech giant uses a range of smaller bit formats, including both floating point and integer representations, to make running an AI model far less memory intensive. “We leverage key IBM breakthroughs from the last five years to find the best tradeoff between speed and accuracy,” the company said.

So by leveraging their chips, IBM aims to improve cost efficiency altogether, which could make their cloud service more competitive in the market. Khare also told Reuters that IBM has collaborated with Samsung Electronics for semiconductor research and has selected them to manufacture those AI chips.

Considering how the approach is similar to the ones adopted by other tech giants like Google and Amazon.com, IBM, too, by developing proprietary chips, can differentiate its cloud computing service in the market. But Khare said IBM was not trying to design a direct replacement for semiconductors from Nvidia, whose chips lead the market in training AI systems with vast amounts of data.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland