Meta is opening up the AI model, Llama 2, to major cloud providers, including Microsoft Corp.Source: Shutterstock

Meta is taking on ChatGPT by making its latest AI model free for all

- Meta is opening up the AI model, Llama 2, to major cloud providers, including Microsoft Corp.

- Qualcomm also announced it is working with Meta to bring LLaMa to laptops, phones, and headsets starting from 2024 onward for AI-powered apps that work without relying on cloud services.

In February, when tech giants like Microsoft and Google announced their AI chatbots, Meta also rolled out a new large language model. The first, smaller version of LLaMA was restricted only to researchers – all while the underlying models for Google’s LaMDA and OpenAI’s ChatGPT are not public.

Five months later, Meta introduced the availability of Llama 2, the next generation of its open-source large language model. Meta is, however, still sticking to a long-held belief that allowing all sorts of programmers to tinker with technology is the best way to improve it. But this time, Llama 2 will not be limited to just researchers. Meta said it is open-sourcing the AI model for commercial use through partnerships with major cloud providers, including Microsoft Corp.

“We believe an open approach is the right one for the development of today’s AI models, especially those in the generative space where the technology is rapidly advancing,” Meta said in a blog posting on Tuesday (June 18). The Facebook-parent company believes making its large language model open source is a safer option.

“Opening access to today’s AI models means a generation of developers and researchers can stress test them, identifying and solving problems fast, as a community. By seeing how others use these tools, our teams can learn from them, improve those tools, and fix vulnerabilities,” the company stated.

Separately, Mark Zuckerberg, in a post on his personal Facebook page, shared how Meta has a long history of open-sourcing their infrastructure and AI work. “From PyTorch, the leading machine learning framework, to models like Segment Anything, ImageBind, and Dino, to basic infrastructure as part of the Open Compute Project. This has helped us build better products by driving progress across the industry,” he noted.

Mark Zuckerberg’s Facebook post.

The move by Meta would also establish the company alongside other tech giants as having a pivotal contribution to the AI arms race. For context, Chief Executive Officer Mark Zuckerberg has said incorporating AI improvements into all the company’s products and algorithms is a priority and that Meta is spending record amounts on AI infrastructure.

According to Meta, there has been a massive demand for Llama 1 from researchers — with more than 100,000 requests for access to the large language model.

What’s new with the latest AI model by Meta

The commercial rollout of Llama 2 is the first project to debut out of the company’s generative AI group, a new team assembled in February. According to Zuckerberg, the Llama 2 has been pre-trained and fine-tuned models with 7 billion, 13 billion, and 70 billion parameters. “Llama 2 was pre-trained on 40% more data than Llama 1 and had improvements to its architecture,” he said,

For the fine-tuned models, Zuckerberg said Meta had collected more than one million human annotations and applied supervised fine-tuning and reinforcement learning with human feedback (RLHF) with leading results on safety and quality.

Meta also announced that Microsoft would distribute the new version of the AI model through its Azure cloud service and will run on the Windows operating system, Meta said in its blog post, referring to Microsoft as “our preferred partner” for the release. In the generative AI race, Microsoft has emerged as the clear leader in AI through its investment and technology partnership with ChatGPT creator OpenAI, which charges for access to its model.

“Starting today, Llama 2 is available in the Azure AI model catalog, enabling developers using Microsoft Azure to build with it and leverage their cloud-native tools for content filtering and safety features. It is also optimized to run locally on Windows, giving developers a seamless workflow as they bring generative AI experiences to customers across different platforms,” the tech giant said in its blog posting.

Meta and Microsoft’s collaboration gives researchers and businesses access to build with our next generation large language model as the foundation of their work. Source: Mark Zuckerberg’s Instagram

Meta said Llama 2 is available through Amazon Web Services (AWS), Hugging Face, and other providers.

Qualcomm partners with Meta to run Llama 2 on phones

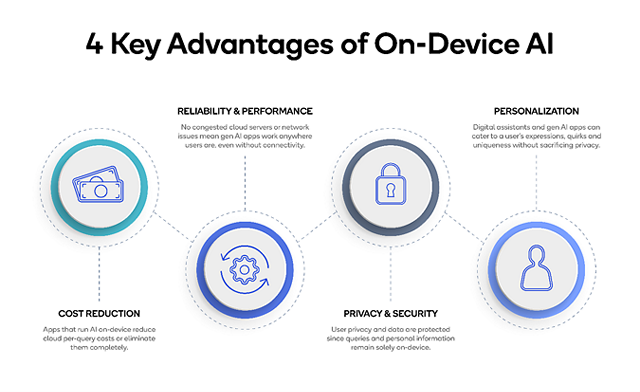

Shortly after Meta unveiled Llama 2, Qualcomm announced that it is partnering with the tech giant for the new large language model. “Qualcomm Technologies Inc. and Meta are working to optimize the execution of Meta’s Llama 2 large language models directly on-device – without relying on the sole use of cloud services,” Qualcomm said.

Meta and Qualcomm Technologies have a longstanding history of working together to drive technology innovation and deliver the next generation of premium device experiences. Source: Qualcomm

For the US chip designer, the ability to run generative AI models like Llama 2 on devices such as smartphones, PCs, VR/AR headsets, and vehicles allows developers to save on cloud costs and provide users with private, more reliable, personalized experiences. Qualcomm is scheduled to make Llama 2-based AI implementation available on devices powered by Snapdragon from 2024 onwards.

“We applaud Meta’s approach to open and responsible AI and are committed to driving innovation and reducing barriers-to-entry for developers of any size by bringing generative AI on-device,” said Durga Malladi, senior vice president and general manager of technology, planning, and edge solutions businesses, Qualcomm Technologies, Inc.

Malladi reckons to scale generative AI into the mainstream effectively; AI will need to run on both the cloud and devices at the edge, such as smartphones, laptops, vehicles, and IoT devices.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland