Jensen Huang, NVIDIA’s founder and CEO, announced the advanced NVIDIA GH200 Grace Hopper platform – built for the AI era. (Source – YouTube)

From superchips to supercomputing: NVIDIA charts the future of AI

|

Getting your Trinity Audio player ready... |

- NVIDIA unveils the GH200 Grace Hopper platform with the HBM3e processor.

- With the NVIDIA AI Workbench and the AI Enterprise 4.0 software, the company is shaping the AI revolution.

NVIDIA is once again making headlines with its recent revelation at the Siggraph conference in Los Angeles. NVIDIA unveiled its latest leap in AI technology, showcasing an enhanced processor that pushes the boundaries of speed and capacity. This strategic move reaffirms NVIDIA’s position at the forefront of the rapidly evolving AI market. Let’s dive deeper into the details.

The company introduced the advanced NVIDIA GH200 Grace Hopper platform, incorporating the pioneering Grace Hopper Superchip with the world’s inaugural HBM3e processor. This innovation is tailored for the new wave of rapid computing and transformative AI.

This new platform will come in various configurations to process some of the most intricate generative AI tasks – including large language models, recommender systems, and vector databases.

The dual-configuration system boasts up to 3.5 times enhanced memory and thrice the bandwidth of its predecessor. It features a server with 144 Arm Neoverse cores, eight petaflops of AI capability, and a substantial 282GB of the advanced HBM3e memory.

The HBM3e memory, exhibiting a 50% speed enhancement over its HBM3 counterpart, delivers a whopping 10TB/sec combined bandwidth. This allows the platform to process models 3.5 times larger with three times the memory speed of the earlier model.

Leading manufacturers have already embraced the Grace Hopper Superchip. With the unveiling of the next-gen Grace Hopper platform featuring HBM3e, compatibility with the NVIDIA MGX server specifications presented at COMPUTEX has been ensured. This allows manufacturers to integrate Grace Hopper into over 100 server models seamlessly.

Empowering developers with NVIDIA AI Workbench

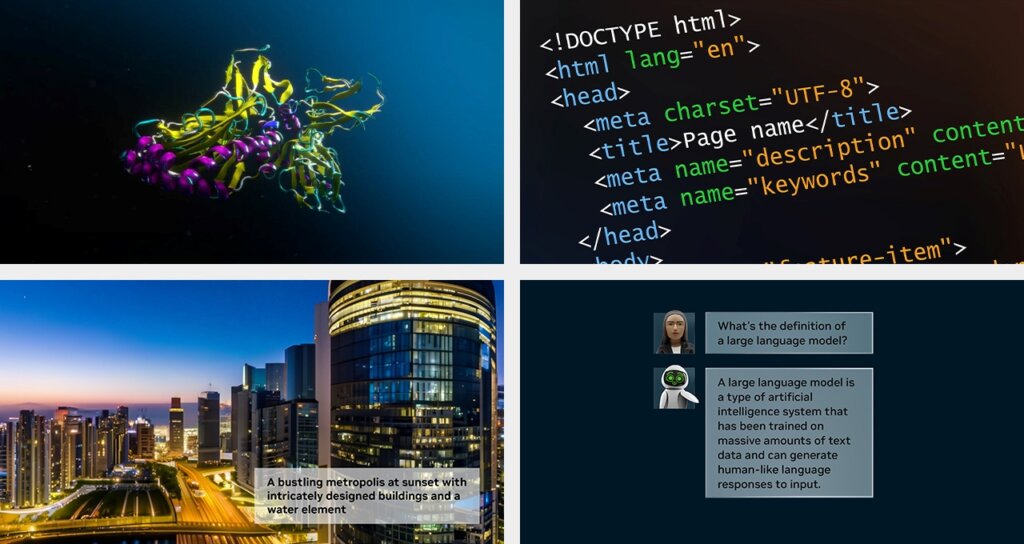

For the developer community, NVIDIA has launched the NVIDIA AI Workbench. This cohesive toolkit empowers developers to efficiently design, evaluate, and tailor pretrained generative AI models, making them adaptable to any data center, public cloud, or NVIDIA DGX Cloud.

The AI Workbench aims to simplify the initiation of enterprise AI ventures. Its user-friendly local system interface grants developers access to popular repositories such as Hugging Face, GitHub, and NVIDIA NGC, allowing model customization. Moreover, these models can be effortlessly shared across diverse platforms.

NVIDIA AI Workbench simplifies the lives of developers. (Source – NVIDIA)

On Hugging Face, NVIDIA has unveiled a collaborative effort with them, aiming to bring generative AI supercomputing to a vast developer base focused on large language models and advanced AI tools.

Jensen Huang, NVIDIA’s founder and CEO, expressed that the synergy between researchers, developers, and generative AI is revolutionizing industries. By partnering, Hugging Face and NVIDIA aim to bridge the gap between the vast AI community and NVIDIA’s state-of-the-art AI computing platforms available on premier cloud services. The collaboration’s essence is making NVIDIA AI computing seamlessly accessible to the Hugging Face community.

This partnership will see Hugging Face launch a “Training Cluster as a Service”, facilitating the design of new generative AI enterprise models, all powered by the NVIDIA DGX Cloud, set to be available in the near future.

A brief on Hugging Face: It provides a platform for developers to construct, refine, and roll out cutting-edge AI models utilizing open-source tools. Hugging Face boasts a clientele of over 15,000 organizations, a community contribution of over 250,000 models, and 50,000 datasets.

The integration of DGX Cloud with Hugging Face ensures effortless access to NVIDIA’s expansive AI supercomputing platform. Hugging Face users will benefit from enhanced training and customization of foundation models, paving the way for a surge in enterprise large language model initiatives. The new service, backed by DGX Cloud, promises swift and efficient model creation using unique data.

Given the vast array of available pretrained models, customizing them using various open-source tools can be daunting, requiring sifting through numerous online repositories to find the perfect tools and frameworks.

However, with the NVIDIA AI Workbench, the process becomes a breeze. Developers can easily integrate all essential enterprise-grade tools from open-source repositories and NVIDIA’s AI suite into one comprehensive developer toolkit.

Major AI infrastructure giants such as Dell Technologies, Hewlett Packard Enterprise, HP Inc., Lambda, Lenovo, and Supermicro are endorsing AI Workbench, recognizing its potential to enhance their newest multi-GPU desktop, premium mobile, and virtual workstations.

More toys for generative AI

In a push to further the reach of generative AI, the company has rolled out the latest iteration of its enterprise software, NVIDIA AI Enterprise 4.0. This offers companies the resources to integrate generative AI, ensuring security and API stability for dependable production launches.

The latest tools supporting the NVIDIA AI Enterprise, aimed at streamlining generative AI integration, include:

- NVIDIA NeMo, a cloud-centric framework, facilitating the design, tailoring, and deployment of large language models. NeMo ensures comprehensive support within NVIDIA AI Enterprise for these applications.

- NVIDIA Triton Management Service, designed for efficient production deployment. It enables automated deployment of numerous NVIDIA Triton Inference Server instances in Kubernetes, ensuring optimized AI scalability.

- NVIDIA Base Command Manager Essentials, a cluster management software that aids enterprises in optimizing the performance and utilization of AI servers across various environments.

NVIDIA AI Enterprise software, designed for holistic AI solutions spanning the cloud, data centers, and edge, is certified to function on mainstream NVIDIA-Certified Systems, NVIDIA DGX systems, prominent cloud platforms, and the newly introduced NVIDIA RTX workstations.

As the world stands on the cusp of an AI revolution, NVIDIA’s recent offerings underscore its commitment to democratizing advanced AI for industries, developers, and end-users. The horizon looks promising, and with trailblazers like NVIDIA at the helm, the future of AI is poised for unprecedented achievements.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland