The rise of generative AI and what to expect from Google in the future. (Source – Shutterstock)

Understanding Google and its AI tools

- At its Gen AI SEA media summit, Google underscored generative AI’s industry impact through innovative AI tools and unique data strategies.

- Google previewed new AI-driven features for Google Meet.

- With Google’s new “Attend for me” feature, you can now miss meetings… without really missing meetings.

Google Cloud recently held its Generative AI SEA media summit in Singapore. Although that’s not a new Google event, those who attended had the opportunity to delve deeper into the company’s new offerings – we even got some hands-on experience with some Google AI tools.

In terms of generative AI, Mitesh Agarwal, managing director of solutions and technology for Asia Pacific at Google Cloud, quoted former Google research engineer Andrew Ng, saying “AI is the new electricity.” Agarwal believes this technology necessitates a metaphorically supporting ‘grid’ of infrastructure, which he identifies as the cloud. Additionally, you’ll need some ‘fuel’ to ensure that this grid receives a constant supply, which comes in the form of cumulus data.

He raised some intriguing questions: ‘Which industry will be first? There’s a lot of discussion about what will happen, in fact, to each of us as individuals here.’

“I don’t think there is any predictive model or AI that can foresee this; we believe that some industries will be transformed completely, while others will find ways to adapt,” said Agarwal. “What we certainly know is that productivity will massively improve. We know that any experience you’ve had today is subject to change.”

And this is something that can be easily seen in the gaming industry.

Evolving gaming with Google and its AI tools

Jack Buser, director of game industry solutions at Google Cloud, commented on what distinguishes generative AI from its predecessors. How does it evolve beyond previous technologies? On one level, it’s evolutionary. “To think back, games have long used the term ‘AI,’ but our definition was a constrained set of rules, decision trees, and behaviors. Players engaged with ‘bots,’ but compared to a human, bots often weren’t as engaging.”

This statement holds true especially recently, as game companies have begun using true AI and ML frameworks (built on data and analytics) to understand players, manage churn, and increase monetization. Some have even integrated their frameworks into advanced AI algorithms like large language models (LLMs), bringing us to generative AI.

But generative AI represents more than just an evolutionary step forward. It’s a whole new dimension for innovation, and, Buser posits, the most significant change to the industry of games since the introduction of real-time 3D graphics.

Generative AI in gaming was presented live at the Summit, where Southeast Asian Web3 games software company Ethlas is now allowing game artists to generate game assets on the fly—such as hyper-personalized game weapons—to improve the player experience. This is only possible as Ethlas was able to quickly launch enterprise-grade generative AI applications using Google Cloud’s AI-optimized infrastructure and capabilities on Vertex AI to tune and deploy open-source models from Stable Diffusion.

Elston Sam, co-founder of Ethlas, showcasing how generative AI is being used in gaming.

Due to popular demand in the next few weeks, Ethlas’ flagship multiplayer shooter game, Battle Showdown, will also open up its “weapon AI system” to players, allowing them to customize their in-game characters using generative AI.

What differentiates Google from the rest?

A question that every company gets and something that Google addressed at the Summit was “Why is Google’s AI model better than its competitors’?” Agarwal’s personal view is that foundation models, whether developed by Google, its competitors, OpenAI, Meta, or any others, will, over time, become accessible to everyone.

“Everyone will have access to foundational models. You all have access to these foundation models, except that you might not be aware of it,” he said. “For instance, when using Bard, you are essentially accessing Google’s PaLM model. Consequently, companies are asking, ‘What is the differentiator if everyone has access to the same models, assuming they can afford them?'”

Agarwal emphasizes that while access to foundational AI models is vital, the unique data used to train these models is the real differentiator. Using standard applications like Bard isn’t enough; organizations must leverage their proprietary data for true value. This principle is evident in how BloombergGPT operates, utilizing its extensive proprietary trading data. Success hinges on training these sophisticated models with specialized data, turning information gathered from interactions — like those a bank has with its customers — into actionable insights and effective responses.

Google is integrating this philosophy into its AI offerings. The tech giant has launched Vertex AI Search and Conversation, formerly known as Generative AI App Builder. This platform lets developers craft AI-driven chat interfaces and digital helpers, using their unique data swiftly with scarce coding required, while ensuring robust management and security.

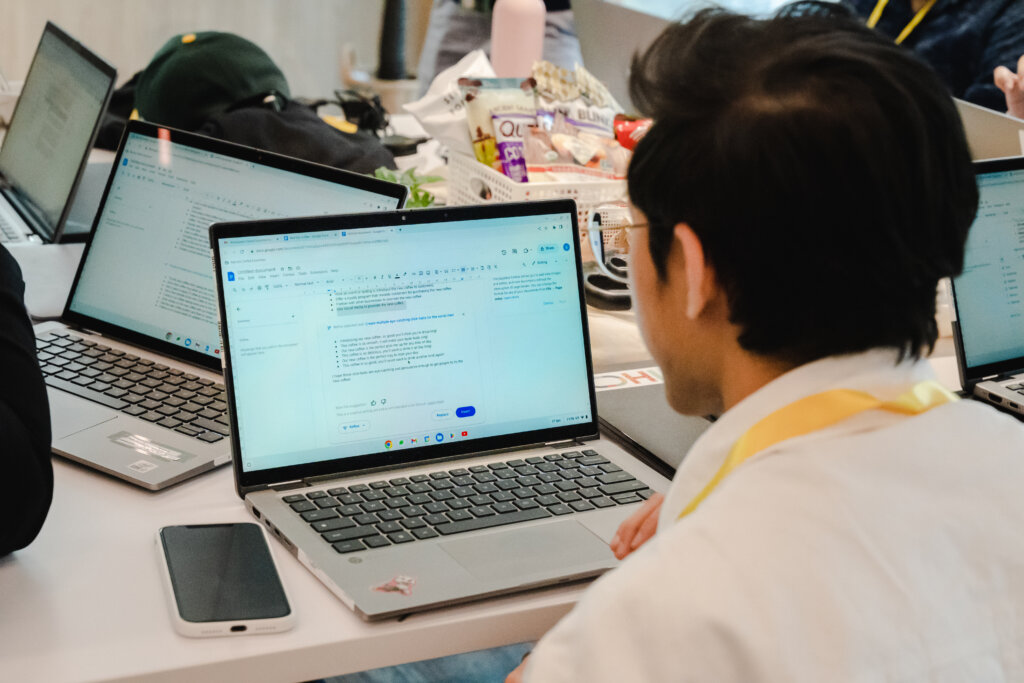

Zul working on a demo of Vertex AI in action.

Vertex AI Search allows entities to establish search functions of a caliber akin to Google’s own, characterized by multimodal, iterative capabilities fueled by foundational models. This feature uniquely allows for grounding results exclusively in corporate data or utilizing such data to enhance the model’s preliminary training.

Conversely, Vertex AI Conversation is designed to develop chat and voice bots that mimic human interaction, leveraging foundational models and supporting audio and text formats. This tool streamlines the development process, enabling the creation of chatbots from existing websites or document caches with minimal coding required.

So, in helping AI practitioners to build generative AI apps with Vertex AI, Google got something called the Model Garden, which is a platform where developers can select from more than 100 large foundation models to suit their use cases, including Google’s first-party models like Imagen, its text-to-image foundation model, that lets organizations generate and customize studio-grade images at scale for any business need. And most importantly, according to Google, users are completely protected from all copyright issues.

Ownership in the AI era

In light of this protection, there arises a question about ownership specifics: how can users trace these elements back to themselves, since they maintain ownership? And what exactly do they possess when they generate these components?

According to Agarwal, Google employs a feature known as ‘Synthetic ID,’ which is embedded as part of users’ image metadata. This concept is akin to how NFTs operate within blockchain technology—how do you trace NFTs? How do you establish ownership?

“Google has incorporated Synthetic ID into imaging technology, enabling users to substantiate ownership. This integration achieves several objectives: first, it authenticates ownership through metadata unique to the user; second, it ensures that copyright information is included; and third, it verifies whether the content was AI-generated, as distinguishing this is often a requirement,” he added.

In the coming months, Google Meet users can expect an AI-driven improvement in their video feed quality, correcting blurriness possibly caused by older webcam models. Additionally, Google is experimenting with generative AI to create a virtual ‘professional studio’ experience, enhancing your video calls’ lighting and overall quality.

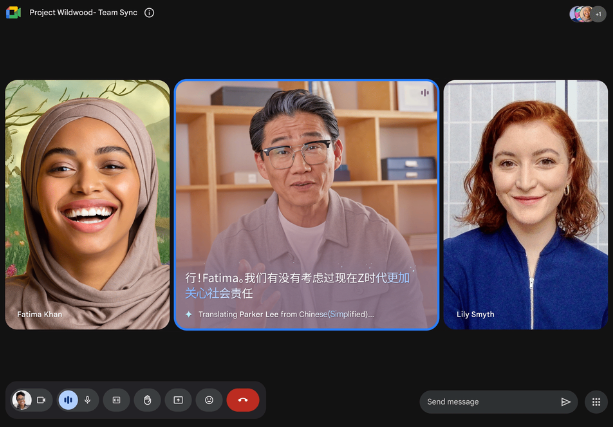

Another development is the introduction of ‘automated translated captions.’ This innovative feature will automatically detect and translate over 300 language pairs in real time during a Google Meet call, breaking down language barriers in global communication. It will also provide transcripts in both the original language and the translated one, making it easier to follow up post-meeting.

Automatic Translated Captions in action. (Source – Google)

Google Meet is also set to launch a ‘real-time teleprompter’ feature, offering users real-time guidance if they lose track during a presentation, ensuring they convey their points professionally. Also, with the ‘Take Notes for Me’ feature, meeting minutes are actively documented, enabling late participants to seamlessly catch up on the discussion without causing interruptions.

The ‘Take Notes for Me’ feature goes beyond just providing summaries. It catalogs video segments corresponding to different discussion points, marking when new topics are introduced and what was discussed. This functionality allows participants to review specific meeting segments, ensuring comprehensive understanding and alignment among all members.

Google’s new ‘Attend for me’ feature is a game-changer for those juggling packed schedules. If you’re unable to attend a meeting, your avatar can participate in your stead, armed with your preset questions. While it’s not about being in multiple places simultaneously, this feature ensures your essential queries are raised, and the meeting insights are shared with you afterwards. That means Google AI tools can replace you – temporarily, and in a good way!

The Google way in confronting cybersecurity with its AI tools

Tech Wire Asia has previously discussed Google’s use of Duet AI for cybersecurity, aiming to provide deeper insights and enhanced threat management. The constant evolution of AI and the emergence of new threats highlights the importance of maintaining human intervention in decision-making processes.

Mark Johnston, director, Office of the CISO, Asia Pacific, Google Cloud, underscored the necessity of keeping humans at the core of decision-making, as they contribute unique insights not replicable by AI. The system is designed to learn from and adapt to each organization’s needs and operational nuances. Information entered into Google Workspace is safeguarded and not shared across platforms, ensuring organizational specifics don’t become generalized, which could lead to potential operational risks.

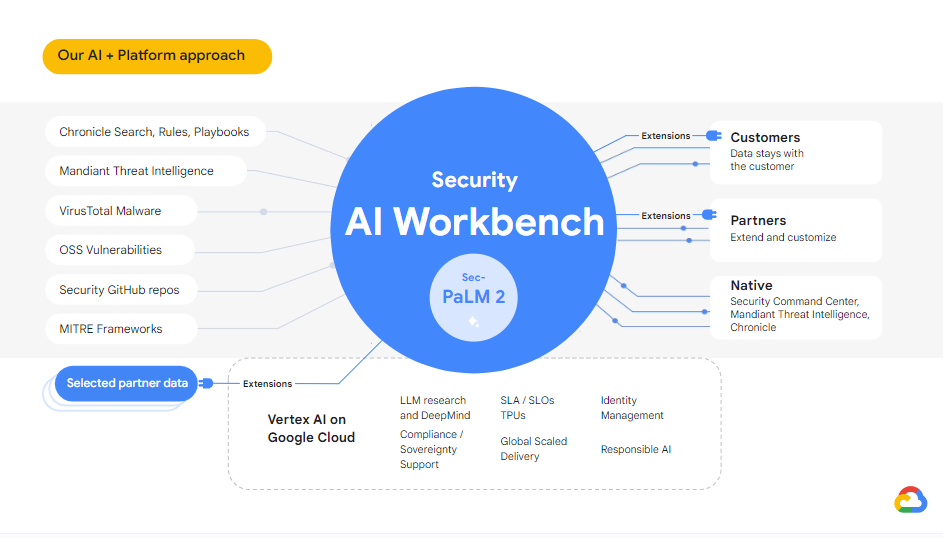

Earlier this year, Google Cloud announced the innovative AI Workbench, a first-of-its-kind platform energized by a specialized security LLM, Sec-PaLM. This system appears to function as an automated policy enforcer, executing traditionally human-dependent tasks. Enterprises utilizing Google Workspace are backed by a continuously updated platform with critical rules, regulations, and protective policies, all orchestrated by AI to defend against looming threats.

How Google Cloud Security AI Workbench works. (Source – Google)

Johnston confirmed that Google’s capabilities, spanning its extensive research and Threat Intelligence, are fundamental to its AI model. This model is consistently fed the latest data to respond to threats effectively, and includes various compliance measures, policy changes, and standards that refine the AI’s learning process.

The process is ongoing, with the AI system regularly updated and retrained with the most current information. These enhancements are made accessible via products like VirusTotal Code Insight, improving its malware detection and analysis capabilities, and large language model breach analytics, enabling the discovery of issues across novel data sets. Google’s Mandiant team also participates in this process, delving into your security datasets and seeking anomalies.

Additionally, functions undertaken by Duet AI and the security operations suite provide detailed guidance on the appropriate steps to take in various incident scenarios or playbook contexts.

The latest Google AI tools are designed both to help businesses in the ways they need on a day-to-day basis, and to open up creative and productive ways to get the most out of the AI revolution.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland