Not everyone is happy with AI regulations. (Image by Shutterstock)

As the US and UK map AI regulations, how will it impact Asia?

- The US and UK are looking to improve AI regulations.

- China has agreed to work with other countries to ensure safe AI development.

- However, some tech developers feel regulations could limit AI capabilities.

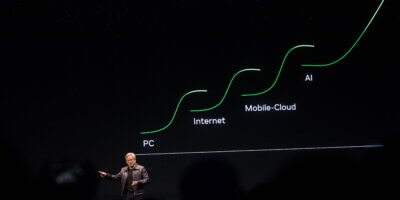

While everyone agrees that AI has been a revolutionary technology, its uses recently have caused some to be more cautious in their approach to the technology. Increasing calls for AI regulations have been made not just by governments but also by tech companies and experts themselves, especially with the increasing adoption of AI.

There is no doubt that, when used with positive intent, AI can do more good than harm. But in the wrong hands, AI can do a lot of damage. Cybercriminals are already using AI tools to improve their tactics. This includes using AI to create content such as emails to target and lure a lot more victims. Some cybercriminals are even using AI to detect cybersecurity protections and find ways to bypass them.

There is also a significant rise in AI deepfake content. While deepfakes initially only targeted famous individuals, their availability to anyone today has resulted in scammers using deepfake tools to run scams and commit fraud on individuals. For example, a scammer could create a deepfake profile of an individual and use it for financial transactions in their name. Another concern is the rise in deepfake porn being generated from any image. Even tenagers are making AI nudes of their fellow students – it’s that straightforward.

But what concerns society the most about AI is how the technology could take over the world someday. Unlike some rogue AI from a Hollywood film, the reality today is that AI is capable of doing almost every task a human can do. Society is concerned that AI will take over more roles at work as companies look to cut costs in the long run. Some companies have already indicated that they will replace some of their workforce with AI and robots.

Then there’s the concern of AI biases. While the technology is still learning for some use cases, the results it comes out with can often be inaccurate or biased. Examples include how AI recognition tools can stereotype certain races in search results and profiling applications. Some of the information generated by AI search engines is also not as accurate and current as it needs to be if it’s to be consistently, believably applied.

There are also copyright concerns. Generative AI is capable of creating content such as writing stories, movie scripts and even drawing images. But most of the results are based on work created by real people. In the US, there have been protests – and strikes – around how film companies are using AI to write scripts and also recreating the voices and faces of celebrities. Some authors and artists have even sued AI companies for using their content without acknowledging them or paying royalties.

The US and UK recently unveiled more AI regulations. (Image by Shutterstock)

Can regulations fix AI problems?

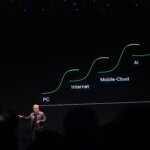

In the US, President Joe Biden has issued a landmark executive order to ensure that the country leads the way in seizing the promise and managing the risks of AI. The executive order establishes new standards for AI safety and security, protects Americans’ privacy, advances equity and civil rights, stands up for consumers and workers, promotes innovation and competition, advances American leadership around the world, and more.

As part of the Biden-Harris administration’s comprehensive strategy for responsible innovation, the executive order builds on previous actions the President has taken, including work that led to voluntary commitments from 15 leading companies to drive safe, secure, and trustworthy development of AI.

“AI holds extraordinary potential for both promise and peril. Responsible AI use has the potential to help solve urgent challenges while making our world more prosperous, productive, innovative, and secure. At the same time, irresponsible use could exacerbate societal harms such as fraud, discrimination, bias, and disinformation; displace and disempower workers; stifle competition; and pose risks to national security. Harnessing AI for good and realizing its myriad benefits requires mitigating its substantial risks. This endeavor demands a society-wide effort that includes government, the private sector, academia, and civil society,” the President said.

With this executive order, the President has instigated the most sweeping actions yet taken to protect Americans from the potential risks of AI systems. The order will:

- Require that developers of the most powerful AI systems share their safety test results and other critical information with the US government.

- Develop standards, tools, and tests to help ensure that AI systems are safe, secure, and trustworthy.

- Protect against the risks of using AI to engineer dangerous biological materials by developing strong new standards for biological synthesis screening.

- Protect Americans from AI-enabled fraud and deception by establishing standards and best practices for detecting AI-generated content and authenticating official content.

- Establish an advanced cybersecurity program to develop AI tools to find and fix vulnerabilities in critical software, building on the Biden-Harris administration’s ongoing AI Cyber Challenge.

- Order the development of a National Security Memorandum that directs further actions on AI and security, to be developed by the National Security Council and White House Chief of Staff.

Despite these new regulations, some tech scholars feel that more clarity is needed to fully understand how the order can make a real difference. Some also feel that the regulations could strain our ability to research AI’s full potential. More importantly, there are concerns about who will actually police AI models, and how it will be done, especially for lawful compliance without punitive actions.

In fact, some AI developers are hoping the President will relook into the executive order, as they feel it is restricting the use of open source AI. There are also concerns that AI will eventually come to be monopolized by big tech companies, leaving users and developers at their mercy when it comes to pricing and other crucial gateways to access.

Not everyone is happy with the new rules in AI.

Over in the UK, the recently concluded AI Safety Summit resulted in the Bletchley Declaration which was signed by countries participating, including the US and China. The declaration calls for understanding of the safety risks that arise at the frontier of AI. This includes the risks from general-purpose AI models, including foundation models as well as specific narrow AI models.

“We are especially concerned by such risks in domains such as cybersecurity and biotechnology, as well as where frontier AI systems may amplify risks, such as disinformation. There is potential for serious, even catastrophic, harm, either deliberate or unintentional, stemming from the most significant capabilities of these AI models. Given the rapid and uncertain rate of change of AI, and in the context of the acceleration of investment in technology, we affirm that deepening our understanding of these potential risks and of actions to address them is especially urgent,” the declaration read.

To address the frontier AI risks, the focus will be on:

- identifying AI safety risks of shared concern, building a shared scientific and evidence-based understanding of these risks, and sustaining that understanding as capabilities continue to increase, in the context of a wider global approach to understanding the impact of AI in our societies.

- building respective risk-based policies across countries to ensure safety in light of such risks, collaborating as appropriate while recognizing our approaches may differ based on national circumstances and applicable legal frameworks.

Will AI regulations in Asia take a different approach to the majority of the world? (Image by Shutterstock)

What does this mean for AI in Asia?

Compared to the US and Europe, AI regulations in Asia could be taking a different approach. China, Singapore, Indonesia, the Philippines, India, Japan and the Republic of Korea were part of the Bletchley Declaration.

Statistics have shown that AI adoption in Southeast Asia in particular is growing at a much faster speed to the rest of the world. Singapore is leading AI adoption in the region and the use of technology is still bound by some regulations, depending on the industry.

Earlier this year, Singapore launched AI Verify, an AI governance testing framework and software toolkit that validates the performance of AI systems against a set of internationally recognized principles through standardized tests. As regulations are developed in the region, the AI Verify toolkit is an open source kit to crowd-in developers, industry and research communities to grow AI governance testing and evaluation.

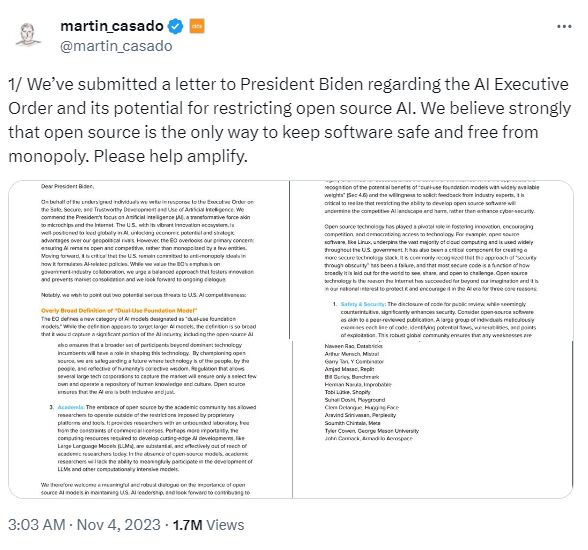

China, which is at the forefront of AI development, has taken an interesting approach to regulations. As most US-based generative AI tools are not available in China, the country has been developing its own AI models. Tech giants like Alibaba, Baidu, Tencent and Huawei, as well as startups, are innovating their own versions of AI, with some apparently as good as OpenAI’s ChatGPT and Google’s Bard.

In fact, China’s regulations are aimed at addressing risks related to AI and introducing compliance obligations on entities engaged in AI-related business. The Algorithm Recommendation Regulation focuses on the use of algorithm recommendation technologies (including, among others, generative and synthetic algorithms) to provide internet information services in China. The Deep Synthesis Regulation focuses on the use of deep synthesis technologies (a subset of generative AI technologies) to provide internet information services in China.

The Draft Ethical Review Measure, on the other hand, focuses on the ethical review of, among other things, the research and development of AI technologies in China. The Generative AI Regulation more broadly regulates the development and use of all generative AI technologies providing services in China.

China and the US will still compete in AI development. (Image by Shutterstock)

Competing to be the best?

While China has agreed to work with the United States, the European Union and other countries to collectively manage the risk from AI, it remains to be seen how this will impact competition between nations in the development of AI. Currently, both the US and China are at the forefront of AI development.

Specifically, whenever an American tech company announces a new AI tool, China is ready to build an alternative to it. Hence, there is really no slowing down the technology from being developed – so who really should be policing it?

As Wu Zhaohui, China’s Vice-Minister of Science and Technology, said during the opening session of the AI summit, Beijing was ready to increase collaboration on AI safety to help build an international “governance framework.”

“Countries regardless of their size and scale have equal rights to develop and use AI,” he said.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland