Cloudera believes enterprises will intensify their efforts on operationalizing generative AI. Photo: Shutterstock

Here’s how enterprises could embrace generative AI and cloud tech in 2024

- Cloudera believes enterprises will intensify efforts to operationalize generative AI while prioritizing cloud.

- Companies could adjust their approach to managing growing volumes of data across environments.

- Cloudera expects a steady migration of data and workloads into open data lakehouse architectures.

We are approaching a year since Microsoft and OpenAI sparked the generative AI revolution. That subset of AI, which focuses on teaching machines to produce original and creative content, has piqued the interest of enterprises worldwide due to its potential to revolutionize industries and the processes they use.

A recent McKinsey report stated that existing generative AI and other technologies, including cloud, can automate tasks that currently occupy 60 to 70% of employees’ time. Those are attractive statistics from business’ point of view – though they also lead to employee anxiety over the future of work.

In the last ten months alone, generative AI firms have experienced substantial success in venture capital, with many securing significant funding and scoring impressive valuations. At the same time, IDC research reveals that two-thirds of organizations in the Asia Pacific region are either investigating or investing in generative AI this year.

With enterprise adoption of generative AI growing, consumption of ancillary cloud computing, storage, and other services has also been ticking up, boosting the cloud computing sector as a whole. Moving into next year, Daniel Hand, field chief technology officer for APJ at Cloudera, predicted that companies would intensify their efforts to operationalize generative AI.

In sharing his tech predictions for 2024, Hand noted that enterprises are also likely to adjust their approaches to managing growing volumes of data across environments–especially the cloud–to drive flexibility and growth. Hand had a… handful… of other insights to share on how the next year will probably look, especially within the generative AI and cloud space.

The year of prioritizing MLOPS and data integration capabilities

Daniel Hand

field CTO, Cloudera APJ

Since the release of ChatGPT (3.5) in November 2022, Hand noted that organizations have grappled with harnessing its advantages while ensuring the secure handling of ample contextual data. ChatGPT and other SaaS-based Large Language Models (LLMs) have posed notable data privacy concerns for businesses.

For context, unlike traditional AI, which operates based on predetermined rules, generative AI can learn from data and generate content autonomously. This technology uses complex algorithms and neural networks to understand patterns and produce outputs that mimic human-like creativity.

Often, the content in questions, answers, and contextual data is sensitive, making it incompatible with public multi-tenanted services that employ this data for model retraining. Therefore, in 2024, Hand expects organizations “to continue to focus on developing strong machine learning operations (MLOPS) and data integration capabilities.”

Doubling down on RAG, fine-tuning to optimize LLMs

For a start, optimizing LLMs involves various methods like prompt engineering, retrieval augmented generation (RAG), and fine-tuning. “RAG uses content from a knowledge base to enrich the prompt and provide necessary context. A key component of the RAG architecture is a database of knowledge base content, indexed specially. User questions are encoded in a mathematical representation that can be used to search for the content nearest to it in the database,” Hand shared.

The user’s question, as part of the prompt, is then sent to the LLM for inference. Providing both the question and domain context delivers significantly better results. RAG has proven to be a practical approach to adopting LLMs because it does not require training or tuning of LLMs–but still delivers good results. Hand believes that RAG will continue to be an accessible approach to generative AI for many organizations in 2024.

However, an approach to fine-tuning that has gained a lot of interest in 2023 is performance efficient fine-tuning (PEFT). “PEFT trains a small neural network on domain-specific data and sits alongside the general purpose LLM. This provides most of the performance benefits of retraining the larger LLM, but at a fraction of the cost and required training data,” he said.

Although fine-tuning LLMs requires stronger ML capabilities, it can lead to greater efficiency, explainability, and more accurate results – especially when training data is limited. “In 2024, I foresee that fine-tuning approaches, like PEFT, will be increasingly used by organizations, both for net-new projects and for replacing some of the earlier RAG architectures. I expect the uptake to be greatest within organizations with larger and more capable data science teams,” he added.

Cloud-first to cloud-considered in the age of generative AI

In 2023, Hand noted some businesses had adjusted their cloud strategy. “They shifted from a cloud-first approach to a considered and balanced stance aligned with the conservative moves made by most large organizations,” he said. The changes were responses to several factors, including the economics of the cloud for many predictable analytical workloads, data management regulations, and organizational fiscal policy.

“These organizations have settled on a cloud-native architecture across both public and private clouds to support their data and cloud strategy – the additional architectural complexity associated with cloud-native being offset with the flexibility, scalability, and cost savings it provides,” Hand said. He believes that cloud computing will continue to be a vital, transformative technology in organizational data strategy in 2024.

Data generation and acquisition are likely to surge in 2024. (Image generated by AI).

Top priorities: data automation, democratization, and zero-trust security

As data generation and acquisition surge, the demand for increased automation and intelligent data platform management grows. Hand foresees observability across infrastructure, platforms, and workloads playing an increasingly important role in 2024. “This is a precursor to automating intelligent platforms that are highly performant, reliable, and efficient,” he said.

Hand added that data practitioners will continue to push for greater democratization of data and more self-service options. “The most innovative organizations empower data scientists, data engineers, and business analysts to get greater insight from data without going through data gatekeepers,” he wrote, adding that removing friction from all stages of the data lifecycle and increasingly providing access to real-time data will be a focus of organizations and technology providers in 2024.

Hand also expects technology to increasingly simplify the implementation and enforcement of zero-trust within organizations and – more so – across them as data federation becomes an increased area of interest in 2024.

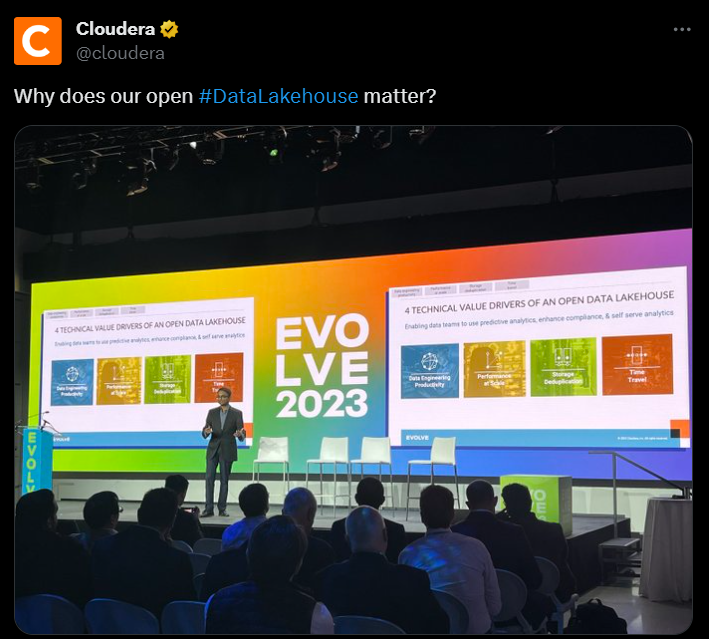

An impending migration to open data lakehouses

Priyank Patel, VP, product management at Cloudera explaining the company’s data lakehouse architecture.

In 2022, Hand recalled significant innovation within data lakehouse implementations, with leading industry data management providers settling on Apache Iceberg as the de facto format. “Iceberg’s rapid adoption as the preferred open technology almost certainly influenced several data management providers to change their open source strategy and build support for it into their products,” he shared.

Therefore, in 2024, Hand anticipates a continuous shift of data and workloads toward open data lakehouse architectures in both public and private cloud environments.

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland