AMD Instinct M1300 AI chips. Source: AMD’S website.

Can AMD disrupt Nvidia’s AI reign with its latest MI300 chips?

- The latest AMD AI chips boasts over 150 billion transistors, 2.4 times the memory of Nvidia’s leading H100, and 1.6 times the memory bandwidth.

- Lisa Su anticipates the AI chip market reaching US$400 billion or more by 2027.

- Meta, OpenAI, and Microsoft are opting for AMD’s latest AI chip.

In the fast-paced world of GPUs and semiconductor innovation, the rivalry between AMD and Nvidia spans decades. The longstanding competition has transformed gaming and creative workloads, and the emergence of generative AI has added a new dimension to the battle. While Nvidia has traditionally dominated AI, AMD is now introducing strong contenders, challenging Nvidia’s historical supremacy.

The battle between AMD and Nvidia is characterized by a continuous cycle of technological advancements. Each company strives to outdo the other in terms of performance, energy efficiency, and feature sets. This race has led to the introduction of increasingly powerful GPUs capable of rendering immersive gaming experiences and handling complex AI workloads.

At a mid-week “Advancing AI” event in San Jose, California, AMD said it will go head to head with Nvidia in the AI chips market by launching a new lineup of accelerator processors. The AMD Instinct™ MI300 series accelerators, according to CEO Lisa Su, are designed to outperform rival products in running AI software.

AMD Instinct M1300 AI chips. Source: AMD’S website.

The launch marks a pivotal moment in AMD’s five-decade history, positioning the company for a significant face-off with Nvidia in the thriving AI accelerators market. These chips play a crucial role in AI model development, excelling at processing data compared to conventional computer processors.

“The truth is we’re so early,” Su said, according to Bloomberg‘s report. “This is not a fad. I believe it.” The event signals that AMD is growing confident that its MI300 lineup can attract major tech players, potentially redirecting significant investments to the company. During the Wednesday AMD investor event, Meta, OpenAI, and Microsoft announced their adoption of AMD’s latest AI chip, the Instinct MI300X.

The move signals a notable shift among tech companies seeking alternatives to Nvidia’s costly graphics processors, which have traditionally been crucial for developing and deploying AI programs like OpenAI’s ChatGPT. On the same day, Nvidia experienced a 2.3% decline in shares to US$455.03 in New York, reflecting investor concerns about the perceived threat posed by the new chip.

However, AMD did not witness a proportional share surge, with a modest 1.3% decrease to $116.82 on a day when tech stocks were generally underperforming. To recall, Nvidia’s market value surpassed US$1.1 trillion this year, fueled mainly by the increasing demand for its chips from data center operators.

However, the looming question around Nvidia’s dominance in the accelerator market is how long it can maintain its current position without significant competition. The launch of the AMD Instinct MI300 range will push that question to its limits.

AMD vs Nvidia: who has better AI chips right now?

The MI300 Series of processors, which AMD calls “accelerators,” was first announced nearly six months ago when Su detailed the chipmaker’s strategy for AI computing in the data center. AMD has built momentum since then.

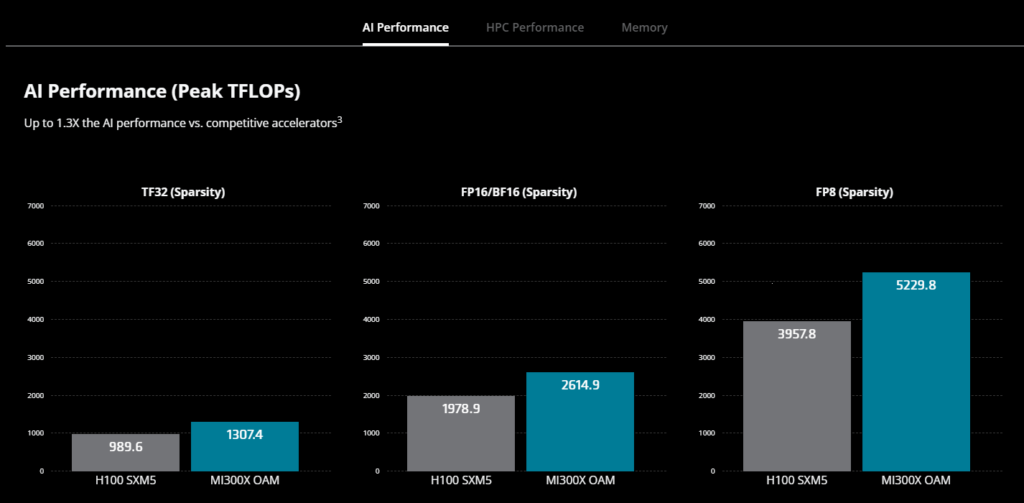

This week’s announcement means that MI300X and MI300A are shipping and in production. The MI300X, designed for cloud providers and enterprises, is built for generative AI applications and outperforms Nvidia’s H100 GPU in two key metrics: memory capacity and memory bandwidth. That lets AMD deliver comparable AI training performance and significantly higher AI inferencing performance.

In other words, the MI300X GPU boasts over 150 billion transistors, surpassing Nvidia’s H100, the current market leader, with 2.4 times the memory. It also reaches 5.3 TB/s peak memory bandwidth, which is 1.6 times more than Nvidia’s H100’s 3.3 TB/s.

Specs comparisons. Source: AMD’S website.

“AMD sees an opening: large language models — used by AI chatbots such as OpenAI’s ChatGPT — need a huge amount of computer memory, and that’s where the chipmaker believes it has an advantage,” a Bloomberg report reads.

“It’s the highest performance accelerator in the world for generative AI,” Su said at the company’s event. In a briefing beforehand, Brad McCredie, AMD’s corporate vice president of data center GPU, said the MI300X GPU will still have more memory bandwidth and memory capacity than Nvidia’s next-generation H200 GPU, which is expected next year.

In November, when Nvidia revealed its intentions for the H200, the company specified that it would incorporate 141 GB of HBM3 memory and boast a memory bandwidth of 4.8 TB/s. So naturally, McCredie expects fierce competition between the two companies on the GPU front.

“We do not expect (Nvidia) to stand still. We know how to go fast, too,” he said. “Nvidia has accelerated its roadmap once recently. We fully expect it to keep pushing hard. We will keep going hard, so we fully wish to stay ahead. Nvidia may leapfrog. We’ll leapfrog back.”

The AMD Instinct MI300A APU comes equipped with 128GB of HBM3 memory. Compared to the prior M250X processor, the MI300A delivers 1.9 times more performance per watt on HPC and AI workloads, according to the company.

Forrest Norrod, executive vice president and general manager of AMD’s data center solutions business group highlighted that at the node level, the MI300A achieves twice the HPC performance-per-watt compared to its closest competitor. This allows customers to accommodate more nodes within their overall facility power budget, aligning with sustainability goals.

Source: Lisa Su’s X

AMD’s outlook

In her address, Su emphasized that AI is the most transformative technology in the last 50 years, surpassing even the introduction of the internet. Notably, she highlighted the accelerated adoption rate of AI compared to other groundbreaking technologies. Last year, AMD projected a 50% growth in the data center AI accelerated market from US$30 billion in 2023 to US$150 billion in 2027.

Su revised this estimate, now predicting an annual growth of more than 70% over the next four years, with the market reaching over US$400 billion in 2027. Su also underscored the pivotal role of generative AI, stressing the substantial investment required for new infrastructure to facilitate training and inference, describing the market as “tremendously significant.”

READ MORE

- Safer Automation: How Sophic and Firmus Succeeded in Malaysia with MDEC’s Support

- Privilege granted, not gained: Intelligent authorization for enhanced infrastructure productivity

- Low-Code produces the Proof-of-Possibilities

- New Wearables Enable Staff to Work Faster and Safer

- Experts weigh in on Oracle’s departure from adland